'People will forgive you for being wrong, but they will never forgive you for being right - especially if events prove you right while proving them wrong.' Thomas Sowell

Search This Blog

Showing posts with label brain. Show all posts

Showing posts with label brain. Show all posts

Monday, 17 June 2024

Monday, 29 January 2024

Monday, 22 May 2023

Wednesday, 11 January 2023

Friday, 31 December 2021

Monday, 6 December 2021

Friday, 10 April 2020

Information can make you sick

Trader turned neuroscientist John Coates in The FT on why economic crises are also medical ones.

As coronavirus infection rates peak in many countries, the markets rally. There is a nagging worry that a second wave of infections might occur once lockdowns are lifted or summer passes. But for anyone immersed in the financial markets there should be a further concern. Volatility created by the pandemic could itself cause a second wave of health problems. Volatility can make you sick, just as a virus can.

To get an inkling of what this other second wave might look like, it helps to recall what happened after the credit crisis. That event was both a financial and medical disaster. Various epidemiological studies suggest it may be responsible for 260,000 cancer deaths in OECD countries; a 17.8 per cent increase in the Greek mortality rate between 2010-16; and a spike in cardiovascular disease in London for the years 2008-09, with an additional 2,000 deaths due to heart attacks. The current economic crisis may be far worse than 2008-09, so the medical fallout could be as well.

Why do financial and medical crises go hand in hand? Many of the above studies focused on unemployment and reduced access to healthcare as causes of the adverse health outcomes. But research my colleagues and I have conducted on trading floors for the past 12 years suggest to me that uncertainty itself, regardless of outcome, can have independent and profound effects on physiology and health.

Our studies were designed initially to test a hunch I had while running a trading desk for Deutsche Bank, that the rollercoaster of physical sensations a person experiences while immersed in the markets alters their risk-taking. After retraining in neuroscience and physiology at Cambridge University, I set up shop on various hedge fund and asset manager trading floors, along with colleagues, mostly medical researchers. Using wearable tech and sampling biochemistry, we tracked the traders’ cardiovascular, endocrine and immune systems.

My goal was to demonstrate how these physiological changes altered trader performance. Increasingly, though, I came to see that a trading floor provides an elegant model for studying occupational health.

One remarkable thing we found was that traders’ bodies calibrated sensitively to market volatility. For humans, apparently, information is physical. You do not process information dispassionately, as a computer does; rather your brain quietly figures out what movement might ensue from the information, and prepares your body, altering heart rate, adrenaline levels, immune activation and so on.

Your brain did not evolve to support Platonic thought; it evolved to process movement. Our larger brain controls a more sophisticated set of muscles, giving us an ability to learn new movements unmatched by any other animal — or robot — on the planet. If you want to understand yourself, fellow humans, even the markets, put movement at the very core of what we are.

Essential to our exquisite motor control is an equally advanced system of fuel injection, one that has been misleadingly termed “the stress response”. Stress connotes something nasty but the stress response is nothing more sinister than a metabolic preparation for movement. Cortisol, the main stress molecule, inhibits bodily systems not needed during movement, such as digestion and reproduction, and marshals glucose and free fatty acids as fuel for our cells.

The stress response evolved to be short lived, acutely activated for only a few hours or days. Yet during a crisis such as the current one, you can activate the stress response for weeks and months at a time. Then an acute stress response morphs into a chronic one. Your digestive system is inhibited so you become susceptible to gastrointestinal disorders; blood pressure increases so you are prone to hypertension; fatty acids and glucose circulate in your blood but are not used, because you are stuck at home, so your risks increase for cardiovascular disease. Finally, by inhibiting parts of the immune system, stress impairs your ability to recover from diseases such as cancer, and Covid-19.

So why the connection with uncertainty? The stress response is largely predictive rather than reactive. Just as we try to predict the future location of a tennis ball, so too we predict our metabolic needs. When we encounter situations of novelty and uncertainty, we do not know what to expect, so we marshal a preparatory stress response. The stress response is comparable to revving your engine at a yellow light. Situations of novelty can be described, following Claude Shannon, inventor of information theory, as “information rich”. Conveniently, informational load in the financial markets can be measured by the level of volatility: the more Shannon information flowing into the markets, the higher the volatility.

In two of our studies we found that traders’ cortisol levels did in fact track bond volatility almost tick for tick. It did not even matter if the traders were making or losing money; just put a human in the presence of information and their metabolism calibrates to it. Take a moment to contemplate that curious result — there are molecules in your blood that track the amount of information you process.

Today, with historically elevated volatility, there is a good chance cortisol levels are trending higher. Immune systems could also be affected. When your body is attacked by a pathogen, your immune system coordinates a suite of changes known as “sickness behaviour”. You develop a fever, lose your appetite and withdraw socially. You also experience increased risk aversion.

Central to the immune response is inflammation, the process of eliminating pathogens and initiating tissue repair. However, inflammation can also occur in stressful situations, because cytokines, the molecules triggering inflammation, assist in the recruitment of metabolic reserves. If inflammation becomes systemic and chronic, it contributes to a wide range of health problems. We found that interleukin-1-beta, the first responder of inflammation, tracked volatility as closely as cortisol.

Recently we have focused on the cardiovascular system. Working with a large and sophisticated fund manager, we have used cutting-edge wearable tech that permits portfolio managers to track their cardiovascular data, physical activity and sleep. The cardiovascular system similarly tracks volatility and risk appetite.

In short, here we may have a mechanism connecting financial and health crises. On the one hand, fluctuating levels of stress and inflammation affect risk-taking. In a lab-based study, we found that chronically elevated cortisol caused a large decrease in risk appetite. Shifting risk presents tricky problems for risk management — and for central banks. Physiology-induced risk aversion can feed a bear market, morphing it into a crash so dangerous that the state has to step in with asset purchases. On the other hand, chronically elevated stress and inflammation are known to contribute to a wide range of health problems.

We are not accustomed to combining financial and medical data in this way. But corporate and state health programs should start.

The markets today are living through a period of volatility the likes of which I have never encountered. March was, to put it mildly, information rich. As a result, there is now the very real possibility of a second wave of disease. Viruses can make you sick, but so too can information.

As coronavirus infection rates peak in many countries, the markets rally. There is a nagging worry that a second wave of infections might occur once lockdowns are lifted or summer passes. But for anyone immersed in the financial markets there should be a further concern. Volatility created by the pandemic could itself cause a second wave of health problems. Volatility can make you sick, just as a virus can.

To get an inkling of what this other second wave might look like, it helps to recall what happened after the credit crisis. That event was both a financial and medical disaster. Various epidemiological studies suggest it may be responsible for 260,000 cancer deaths in OECD countries; a 17.8 per cent increase in the Greek mortality rate between 2010-16; and a spike in cardiovascular disease in London for the years 2008-09, with an additional 2,000 deaths due to heart attacks. The current economic crisis may be far worse than 2008-09, so the medical fallout could be as well.

Why do financial and medical crises go hand in hand? Many of the above studies focused on unemployment and reduced access to healthcare as causes of the adverse health outcomes. But research my colleagues and I have conducted on trading floors for the past 12 years suggest to me that uncertainty itself, regardless of outcome, can have independent and profound effects on physiology and health.

Our studies were designed initially to test a hunch I had while running a trading desk for Deutsche Bank, that the rollercoaster of physical sensations a person experiences while immersed in the markets alters their risk-taking. After retraining in neuroscience and physiology at Cambridge University, I set up shop on various hedge fund and asset manager trading floors, along with colleagues, mostly medical researchers. Using wearable tech and sampling biochemistry, we tracked the traders’ cardiovascular, endocrine and immune systems.

My goal was to demonstrate how these physiological changes altered trader performance. Increasingly, though, I came to see that a trading floor provides an elegant model for studying occupational health.

One remarkable thing we found was that traders’ bodies calibrated sensitively to market volatility. For humans, apparently, information is physical. You do not process information dispassionately, as a computer does; rather your brain quietly figures out what movement might ensue from the information, and prepares your body, altering heart rate, adrenaline levels, immune activation and so on.

Your brain did not evolve to support Platonic thought; it evolved to process movement. Our larger brain controls a more sophisticated set of muscles, giving us an ability to learn new movements unmatched by any other animal — or robot — on the planet. If you want to understand yourself, fellow humans, even the markets, put movement at the very core of what we are.

Essential to our exquisite motor control is an equally advanced system of fuel injection, one that has been misleadingly termed “the stress response”. Stress connotes something nasty but the stress response is nothing more sinister than a metabolic preparation for movement. Cortisol, the main stress molecule, inhibits bodily systems not needed during movement, such as digestion and reproduction, and marshals glucose and free fatty acids as fuel for our cells.

The stress response evolved to be short lived, acutely activated for only a few hours or days. Yet during a crisis such as the current one, you can activate the stress response for weeks and months at a time. Then an acute stress response morphs into a chronic one. Your digestive system is inhibited so you become susceptible to gastrointestinal disorders; blood pressure increases so you are prone to hypertension; fatty acids and glucose circulate in your blood but are not used, because you are stuck at home, so your risks increase for cardiovascular disease. Finally, by inhibiting parts of the immune system, stress impairs your ability to recover from diseases such as cancer, and Covid-19.

So why the connection with uncertainty? The stress response is largely predictive rather than reactive. Just as we try to predict the future location of a tennis ball, so too we predict our metabolic needs. When we encounter situations of novelty and uncertainty, we do not know what to expect, so we marshal a preparatory stress response. The stress response is comparable to revving your engine at a yellow light. Situations of novelty can be described, following Claude Shannon, inventor of information theory, as “information rich”. Conveniently, informational load in the financial markets can be measured by the level of volatility: the more Shannon information flowing into the markets, the higher the volatility.

In two of our studies we found that traders’ cortisol levels did in fact track bond volatility almost tick for tick. It did not even matter if the traders were making or losing money; just put a human in the presence of information and their metabolism calibrates to it. Take a moment to contemplate that curious result — there are molecules in your blood that track the amount of information you process.

Today, with historically elevated volatility, there is a good chance cortisol levels are trending higher. Immune systems could also be affected. When your body is attacked by a pathogen, your immune system coordinates a suite of changes known as “sickness behaviour”. You develop a fever, lose your appetite and withdraw socially. You also experience increased risk aversion.

Central to the immune response is inflammation, the process of eliminating pathogens and initiating tissue repair. However, inflammation can also occur in stressful situations, because cytokines, the molecules triggering inflammation, assist in the recruitment of metabolic reserves. If inflammation becomes systemic and chronic, it contributes to a wide range of health problems. We found that interleukin-1-beta, the first responder of inflammation, tracked volatility as closely as cortisol.

Recently we have focused on the cardiovascular system. Working with a large and sophisticated fund manager, we have used cutting-edge wearable tech that permits portfolio managers to track their cardiovascular data, physical activity and sleep. The cardiovascular system similarly tracks volatility and risk appetite.

In short, here we may have a mechanism connecting financial and health crises. On the one hand, fluctuating levels of stress and inflammation affect risk-taking. In a lab-based study, we found that chronically elevated cortisol caused a large decrease in risk appetite. Shifting risk presents tricky problems for risk management — and for central banks. Physiology-induced risk aversion can feed a bear market, morphing it into a crash so dangerous that the state has to step in with asset purchases. On the other hand, chronically elevated stress and inflammation are known to contribute to a wide range of health problems.

We are not accustomed to combining financial and medical data in this way. But corporate and state health programs should start.

The markets today are living through a period of volatility the likes of which I have never encountered. March was, to put it mildly, information rich. As a result, there is now the very real possibility of a second wave of disease. Viruses can make you sick, but so too can information.

Thursday, 27 February 2020

Why your brain is not a computer

For decades it has been the dominant metaphor in neuroscience. But could this idea have been leading us astray all along? By Matthew Cobb in The Guardian

We are living through one of the greatest of scientific endeavours – the attempt to understand the most complex object in the universe, the brain. Scientists are accumulating vast amounts of data about structure and function in a huge array of brains, from the tiniest to our own. Tens of thousands of researchers are devoting massive amounts of time and energy to thinking about what brains do, and astonishing new technology is enabling us to both describe and manipulate that activity.

There are many alternative scenarios about how the future of our understanding of the brain could play out: perhaps the various computational projects will come good and theoreticians will crack the functioning of all brains, or the connectomes will reveal principles of brain function that are currently hidden from us. Or a theory will somehow pop out of the vast amounts of imaging data we are generating. Or we will slowly piece together a theory (or theories) out of a series of separate but satisfactory explanations. Or by focusing on simple neural network principles we will understand higher-level organisation. Or some radical new approach integrating physiology and biochemistry and anatomy will shed decisive light on what is going on. Or new comparative evolutionary studies will show how other animals are conscious and provide insight into the functioning of our own brains. Or unimagined new technology will change all our views by providing a radical new metaphor for the brain. Or our computer systems will provide us with alarming new insight by becoming conscious. Or a new framework will emerge from cybernetics, control theory, complexity and dynamical systems theory, semantics and semiotics. Or we will accept that there is no theory to be found because brains have no overall logic, just adequate explanations of each tiny part, and we will have to be satisfied with that.

We are living through one of the greatest of scientific endeavours – the attempt to understand the most complex object in the universe, the brain. Scientists are accumulating vast amounts of data about structure and function in a huge array of brains, from the tiniest to our own. Tens of thousands of researchers are devoting massive amounts of time and energy to thinking about what brains do, and astonishing new technology is enabling us to both describe and manipulate that activity.

We can now make a mouse remember something about a smell it has never encountered, turn a bad mouse memory into a good one, and even use a surge of electricity to change how people perceive faces. We are drawing up increasingly detailed and complex functional maps of the brain, human and otherwise. In some species, we can change the brain’s very structure at will, altering the animal’s behaviour as a result. Some of the most profound consequences of our growing mastery can be seen in our ability to enable a paralysed person to control a robotic arm with the power of their mind.

Every day, we hear about new discoveries that shed light on how brains work, along with the promise – or threat – of new technology that will enable us to do such far-fetched things as read minds, or detect criminals, or even be uploaded into a computer. Books are repeatedly produced that each claim to explain the brain in different ways.

And yet there is a growing conviction among some neuroscientists that our future path is not clear. It is hard to see where we should be going, apart from simply collecting more data or counting on the latest exciting experimental approach. As the German neuroscientist Olaf Sporns has put it: “Neuroscience still largely lacks organising principles or a theoretical framework for converting brain data into fundamental knowledge and understanding.” Despite the vast number of facts being accumulated, our understanding of the brain appears to be approaching an impasse.

I n 2017, the French neuroscientist Yves Frégnac focused on the current fashion of collecting massive amounts of data in expensive, large-scale projects and argued that the tsunami of data they are producing is leading to major bottlenecks in progress, partly because, as he put it pithily, “big data is not knowledge”.

“Only 20 to 30 years ago, neuroanatomical and neurophysiological information was relatively scarce, while understanding mind-related processes seemed within reach,” Frégnac wrote. “Nowadays, we are drowning in a flood of information. Paradoxically, all sense of global understanding is in acute danger of getting washed away. Each overcoming of technological barriers opens a Pandora’s box by revealing hidden variables, mechanisms and nonlinearities, adding new levels of complexity.”

The neuroscientists Anne Churchland and Larry Abbott have also emphasised our difficulties in interpreting the massive amount of data that is being produced by laboratories all over the world: “Obtaining deep understanding from this onslaught will require, in addition to the skilful and creative application of experimental technologies, substantial advances in data analysis methods and intense application of theoretic concepts and models.”

There are indeed theoretical approaches to brain function, including to the most mysterious thing the human brain can do – produce consciousness. But none of these frameworks are widely accepted, for none has yet passed the decisive test of experimental investigation. It is possible that repeated calls for more theory may be a pious hope. It can be argued that there is no possible single theory of brain function, not even in a worm, because a brain is not a single thing. (Scientists even find it difficult to come up with a precise definition of what a brain is.)

As observed by Francis Crick, the co-discoverer of the DNA double helix, the brain is an integrated, evolved structure with different bits of it appearing at different moments in evolution and adapted to solve different problems. Our current comprehension of how it all works is extremely partial – for example, most neuroscience sensory research has been focused on sight, not smell; smell is conceptually and technically more challenging. But the way that olfaction and vision work are different, both computationally and structurally. By focusing on vision, we have developed a very limited understanding of what the brain does and how it does it.

The nature of the brain – simultaneously integrated and composite – may mean that our future understanding will inevitably be fragmented and composed of different explanations for different parts. Churchland and Abbott spelled out the implication: “Global understanding, when it comes, will likely take the form of highly diverse panels loosely stitched together into a patchwork quilt.”

For more than half a century, all those highly diverse panels of patchwork we have been working on have been framed by thinking that brain processes involve something like those carried out in a computer. But that does not mean this metaphor will continue to be useful in the future. At the very beginning of the digital age, in 1951, the pioneer neuroscientist Karl Lashley argued against the use of any machine-based metaphor.

“Descartes was impressed by the hydraulic figures in the royal gardens, and developed a hydraulic theory of the action of the brain,” Lashley wrote. “We have since had telephone theories, electrical field theories and now theories based on computing machines and automatic rudders. I suggest we are more likely to find out about how the brain works by studying the brain itself, and the phenomena of behaviour, than by indulging in far-fetched physical analogies.”

This dismissal of metaphor has recently been taken even further by the French neuroscientist Romain Brette, who has challenged the most fundamental metaphor of brain function: coding. Since its inception in the 1920s, the idea of a neural code has come to dominate neuroscientific thinking – more than 11,000 papers on the topic have been published in the past 10 years. Brette’s fundamental criticism was that, in thinking about “code”, researchers inadvertently drift from a technical sense, in which there is a link between a stimulus and the activity of the neuron, to a representational sense, according to which neuronal codes represent that stimulus.

The unstated implication in most descriptions of neural coding is that the activity of neural networks is presented to an ideal observer or reader within the brain, often described as “downstream structures” that have access to the optimal way to decode the signals. But the ways in which such structures actually process those signals is unknown, and is rarely explicitly hypothesised, even in simple models of neural network function.

Every day, we hear about new discoveries that shed light on how brains work, along with the promise – or threat – of new technology that will enable us to do such far-fetched things as read minds, or detect criminals, or even be uploaded into a computer. Books are repeatedly produced that each claim to explain the brain in different ways.

And yet there is a growing conviction among some neuroscientists that our future path is not clear. It is hard to see where we should be going, apart from simply collecting more data or counting on the latest exciting experimental approach. As the German neuroscientist Olaf Sporns has put it: “Neuroscience still largely lacks organising principles or a theoretical framework for converting brain data into fundamental knowledge and understanding.” Despite the vast number of facts being accumulated, our understanding of the brain appears to be approaching an impasse.

I n 2017, the French neuroscientist Yves Frégnac focused on the current fashion of collecting massive amounts of data in expensive, large-scale projects and argued that the tsunami of data they are producing is leading to major bottlenecks in progress, partly because, as he put it pithily, “big data is not knowledge”.

“Only 20 to 30 years ago, neuroanatomical and neurophysiological information was relatively scarce, while understanding mind-related processes seemed within reach,” Frégnac wrote. “Nowadays, we are drowning in a flood of information. Paradoxically, all sense of global understanding is in acute danger of getting washed away. Each overcoming of technological barriers opens a Pandora’s box by revealing hidden variables, mechanisms and nonlinearities, adding new levels of complexity.”

The neuroscientists Anne Churchland and Larry Abbott have also emphasised our difficulties in interpreting the massive amount of data that is being produced by laboratories all over the world: “Obtaining deep understanding from this onslaught will require, in addition to the skilful and creative application of experimental technologies, substantial advances in data analysis methods and intense application of theoretic concepts and models.”

There are indeed theoretical approaches to brain function, including to the most mysterious thing the human brain can do – produce consciousness. But none of these frameworks are widely accepted, for none has yet passed the decisive test of experimental investigation. It is possible that repeated calls for more theory may be a pious hope. It can be argued that there is no possible single theory of brain function, not even in a worm, because a brain is not a single thing. (Scientists even find it difficult to come up with a precise definition of what a brain is.)

As observed by Francis Crick, the co-discoverer of the DNA double helix, the brain is an integrated, evolved structure with different bits of it appearing at different moments in evolution and adapted to solve different problems. Our current comprehension of how it all works is extremely partial – for example, most neuroscience sensory research has been focused on sight, not smell; smell is conceptually and technically more challenging. But the way that olfaction and vision work are different, both computationally and structurally. By focusing on vision, we have developed a very limited understanding of what the brain does and how it does it.

The nature of the brain – simultaneously integrated and composite – may mean that our future understanding will inevitably be fragmented and composed of different explanations for different parts. Churchland and Abbott spelled out the implication: “Global understanding, when it comes, will likely take the form of highly diverse panels loosely stitched together into a patchwork quilt.”

For more than half a century, all those highly diverse panels of patchwork we have been working on have been framed by thinking that brain processes involve something like those carried out in a computer. But that does not mean this metaphor will continue to be useful in the future. At the very beginning of the digital age, in 1951, the pioneer neuroscientist Karl Lashley argued against the use of any machine-based metaphor.

“Descartes was impressed by the hydraulic figures in the royal gardens, and developed a hydraulic theory of the action of the brain,” Lashley wrote. “We have since had telephone theories, electrical field theories and now theories based on computing machines and automatic rudders. I suggest we are more likely to find out about how the brain works by studying the brain itself, and the phenomena of behaviour, than by indulging in far-fetched physical analogies.”

This dismissal of metaphor has recently been taken even further by the French neuroscientist Romain Brette, who has challenged the most fundamental metaphor of brain function: coding. Since its inception in the 1920s, the idea of a neural code has come to dominate neuroscientific thinking – more than 11,000 papers on the topic have been published in the past 10 years. Brette’s fundamental criticism was that, in thinking about “code”, researchers inadvertently drift from a technical sense, in which there is a link between a stimulus and the activity of the neuron, to a representational sense, according to which neuronal codes represent that stimulus.

The unstated implication in most descriptions of neural coding is that the activity of neural networks is presented to an ideal observer or reader within the brain, often described as “downstream structures” that have access to the optimal way to decode the signals. But the ways in which such structures actually process those signals is unknown, and is rarely explicitly hypothesised, even in simple models of neural network function.

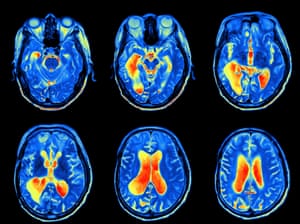

MRI scan of a brain. Photograph: Getty/iStockphoto

The processing of neural codes is generally seen as a series of linear steps – like a line of dominoes falling one after another. The brain, however, consists of highly complex neural networks that are interconnected, and which are linked to the outside world to effect action. Focusing on sets of sensory and processing neurons without linking these networks to the behaviour of the animal misses the point of all that processing.

By viewing the brain as a computer that passively responds to inputs and processes data, we forget that it is an active organ, part of a body that is intervening in the world, and which has an evolutionary past that has shaped its structure and function. This view of the brain has been outlined by the Hungarian neuroscientist György Buzsáki in his recent book The Brain from Inside Out. According to Buzsáki, the brain is not simply passively absorbing stimuli and representing them through a neural code, but rather is actively searching through alternative possibilities to test various options. His conclusion – following scientists going back to the 19th century – is that the brain does not represent information: it constructs it.

The metaphors of neuroscience – computers, coding, wiring diagrams and so on – are inevitably partial. That is the nature of metaphors, which have been intensely studied by philosophers of science and by scientists, as they seem to be so central to the way scientists think. But metaphors are also rich and allow insight and discovery. There will come a point when the understanding they allow will be outweighed by the limits they impose, but in the case of computational and representational metaphors of the brain, there is no agreement that such a moment has arrived. From a historical point of view, the very fact that this debate is taking place suggests that we may indeed be approaching the end of the computational metaphor. What is not clear, however, is what would replace it.

Scientists often get excited when they realise how their views have been shaped by the use of metaphor, and grasp that new analogies could alter how they understand their work, or even enable them to devise new experiments. Coming up with those new metaphors is challenging – most of those used in the past with regard to the brain have been related to new kinds of technology. This could imply that the appearance of new and insightful metaphors for the brain and how it functions hinges on future technological breakthroughs, on a par with hydraulic power, the telephone exchange or the computer. There is no sign of such a development; despite the latest buzzwords that zip about – blockchain, quantum supremacy (or quantum anything), nanotech and so on – it is unlikely that these fields will transform either technology or our view of what brains do.

One sign that our metaphors may be losing their explanatory power is the widespread assumption that much of what nervous systems do, from simple systems right up to the appearance of consciousness in humans, can only be explained as emergent properties – things that you cannot predict from an analysis of the components, but which emerge as the system functions.

In 1981, the British psychologist Richard Gregory argued that the reliance on emergence as a way of explaining brain function indicated a problem with the theoretical framework: “The appearance of ‘emergence’ may well be a sign that a more general (or at least different) conceptual scheme is needed … It is the role of good theories to remove the appearance of emergence. (So explanations in terms of emergence are bogus.)”

This overlooks the fact that there are different kinds of emergence: weak and strong. Weak emergent features, such as the movement of a shoal of tiny fish in response to a shark, can be understood in terms of the rules that govern the behaviour of their component parts. In such cases, apparently mysterious group behaviours are based on the behaviour of individuals, each of which is responding to factors such as the movement of a neighbour, or external stimuli such as the approach of a predator.

This kind of weak emergence cannot explain the activity of even the simplest nervous systems, never mind the working of your brain, so we fall back on strong emergence, where the phenomenon that emerges cannot be explained by the activity of the individual components. You and the page you are reading this on are both made of atoms, but your ability to read and understand comes from features that emerge through atoms in your body forming higher-level structures, such as neurons and their patterns of firing – not simply from atoms interacting.

Strong emergence has recently been criticised by some neuroscientists as risking “metaphysical implausibility”, because there is no evident causal mechanism, nor any single explanation, of how emergence occurs. Like Gregory, these critics claim that the reliance on emergence to explain complex phenomena suggests that neuroscience is at a key historical juncture, similar to that which saw the slow transformation of alchemy into chemistry. But faced with the mysteries of neuroscience, emergence is often our only resort. And it is not so daft – the amazing properties of deep-learning programmes, which at root cannot be explained by the people who design them, are essentially emergent properties.

Interestingly, while some neuroscientists are discombobulated by the metaphysics of emergence, researchers in artificial intelligence revel in the idea, believing that the sheer complexity of modern computers, or of their interconnectedness through the internet, will lead to what is dramatically known as the singularity. Machines will become conscious.

There are plenty of fictional explorations of this possibility (in which things often end badly for all concerned), and the subject certainly excites the public’s imagination, but there is no reason, beyond our ignorance of how consciousness works, to suppose that it will happen in the near future. In principle, it must be possible, because the working hypothesis is that mind is a product of matter, which we should therefore be able to mimic in a device. But the scale of complexity of even the simplest brains dwarfs any machine we can currently envisage. For decades – centuries – to come, the singularity will be the stuff of science fiction, not science.Get the Guardian’s award-winning long reads sent direct to you every Saturday morning

A related view of the nature of consciousness turns the brain-as-computer metaphor into a strict analogy. Some researchers view the mind as a kind of operating system that is implemented on neural hardware, with the implication that our minds, seen as a particular computational state, could be uploaded on to some device or into another brain. In the way this is generally presented, this is wrong, or at best hopelessly naive.

The materialist working hypothesis is that brains and minds, in humans and maggots and everything else, are identical. Neurons and the processes they support – including consciousness – are the same thing. In a computer, software and hardware are separate; however, our brains and our minds consist of what can best be described as wetware, in which what is happening and where it is happening are completely intertwined.

Imagining that we can repurpose our nervous system to run different programmes, or upload our mind to a server, might sound scientific, but lurking behind this idea is a non-materialist view going back to Descartes and beyond. It implies that our minds are somehow floating about in our brains, and could be transferred into a different head or replaced by another mind. It would be possible to give this idea a veneer of scientific respectability by posing it in terms of reading the state of a set of neurons and writing that to a new substrate, organic or artificial.

But to even begin to imagine how that might work in practice, we would need both an understanding of neuronal function that is far beyond anything we can currently envisage, and would require unimaginably vast computational power and a simulation that precisely mimicked the structure of the brain in question. For this to be possible even in principle, we would first need to be able to fully model the activity of a nervous system capable of holding a single state, never mind a thought. We are so far away from taking this first step that the possibility of uploading your mind can be dismissed as a fantasy, at least until the far future.

For the moment, the brain-as-computer metaphor retains its dominance, although there is disagreement about how strong a metaphor it is. In 2015, the roboticist Rodney Brooks chose the computational metaphor of the brain as his pet hate in his contribution to a collection of essays entitled This Idea Must Die. Less dramatically, but drawing similar conclusions, two decades earlier the historian S Ryan Johansson argued that “endlessly debating the truth or falsity of a metaphor like ‘the brain is a computer’ is a waste of time. The relationship proposed is metaphorical, and it is ordering us to do something, not trying to tell us the truth.”

On the other hand, the US expert in artificial intelligence, Gary Marcus, has made a robust defence of the computer metaphor: “Computers are, in a nutshell, systematic architectures that take inputs, encode and manipulate information, and transform their inputs into outputs. Brains are, so far as we can tell, exactly that. The real question isn’t whether the brain is an information processor, per se, but rather how do brains store and encode information, and what operations do they perform over that information, once it is encoded.”

Marcus went on to argue that the task of neuroscience is to “reverse engineer” the brain, much as one might study a computer, examining its components and their interconnections to decipher how it works. This suggestion has been around for some time. In 1989, Crick recognised its attractiveness, but felt it would fail, because of the brain’s complex and messy evolutionary history – he dramatically claimed it would be like trying to reverse engineer a piece of “alien technology”. Attempts to find an overall explanation of how the brain works that flow logically from its structure would be doomed to failure, he argued, because the starting point is almost certainly wrong – there is no overall logic.

Reverse engineering a computer is often used as a thought experiment to show how, in principle, we might understand the brain. Inevitably, these thought experiments are successful, encouraging us to pursue this way of understanding the squishy organs in our heads. But in 2017, a pair of neuroscientists decided to actually do the experiment on a real computer chip, which had a real logic and real components with clearly designed functions. Things did not go as expected.

The duo – Eric Jonas and Konrad Paul Kording – employed the very techniques they normally used to analyse the brain and applied them to the MOS 6507 processor found in computers from the late 70s and early 80s that enabled those machines to run video games such as Donkey Kong and Space Invaders.

First, they obtained the connectome of the chip by scanning the 3510 enhancement-mode transistors it contained and simulating the device on a modern computer (including running the games programmes for 10 seconds). They then used the full range of neuroscientific techniques, such as “lesions” (removing transistors from the simulation), analysing the “spiking” activity of the virtual transistors and studying their connectivity, observing the effect of various manipulations on the behaviour of the system, as measured by its ability to launch each of the games.

Despite deploying this powerful analytical armoury, and despite the fact that there is a clear explanation for how the chip works (it has “ground truth”, in technospeak), the study failed to detect the hierarchy of information processing that occurs inside the chip. As Jonas and Kording put it, the techniques fell short of producing “a meaningful understanding”. Their conclusion was bleak: “Ultimately, the problem is not that neuroscientists could not understand a microprocessor, the problem is that they would not understand it given the approaches they are currently taking.”

This sobering outcome suggests that, despite the attractiveness of the computer metaphor and the fact that brains do indeed process information and somehow represent the external world, we still need to make significant theoretical breakthroughs in order to make progress. Even if our brains were designed along logical lines, which they are not, our present conceptual and analytical tools would be completely inadequate for the task of explaining them. This does not mean that simulation projects are pointless – by modelling (or simulating) we can test hypotheses and, by linking the model with well-established systems that can be precisely manipulated, we can gain insight into how real brains function. This is an extremely powerful tool, but a degree of modesty is required when it comes to the claims that are made for such studies, and realism is needed with regard to the difficulties of drawing parallels between brains and artificial systems.

The processing of neural codes is generally seen as a series of linear steps – like a line of dominoes falling one after another. The brain, however, consists of highly complex neural networks that are interconnected, and which are linked to the outside world to effect action. Focusing on sets of sensory and processing neurons without linking these networks to the behaviour of the animal misses the point of all that processing.

By viewing the brain as a computer that passively responds to inputs and processes data, we forget that it is an active organ, part of a body that is intervening in the world, and which has an evolutionary past that has shaped its structure and function. This view of the brain has been outlined by the Hungarian neuroscientist György Buzsáki in his recent book The Brain from Inside Out. According to Buzsáki, the brain is not simply passively absorbing stimuli and representing them through a neural code, but rather is actively searching through alternative possibilities to test various options. His conclusion – following scientists going back to the 19th century – is that the brain does not represent information: it constructs it.

The metaphors of neuroscience – computers, coding, wiring diagrams and so on – are inevitably partial. That is the nature of metaphors, which have been intensely studied by philosophers of science and by scientists, as they seem to be so central to the way scientists think. But metaphors are also rich and allow insight and discovery. There will come a point when the understanding they allow will be outweighed by the limits they impose, but in the case of computational and representational metaphors of the brain, there is no agreement that such a moment has arrived. From a historical point of view, the very fact that this debate is taking place suggests that we may indeed be approaching the end of the computational metaphor. What is not clear, however, is what would replace it.

Scientists often get excited when they realise how their views have been shaped by the use of metaphor, and grasp that new analogies could alter how they understand their work, or even enable them to devise new experiments. Coming up with those new metaphors is challenging – most of those used in the past with regard to the brain have been related to new kinds of technology. This could imply that the appearance of new and insightful metaphors for the brain and how it functions hinges on future technological breakthroughs, on a par with hydraulic power, the telephone exchange or the computer. There is no sign of such a development; despite the latest buzzwords that zip about – blockchain, quantum supremacy (or quantum anything), nanotech and so on – it is unlikely that these fields will transform either technology or our view of what brains do.

One sign that our metaphors may be losing their explanatory power is the widespread assumption that much of what nervous systems do, from simple systems right up to the appearance of consciousness in humans, can only be explained as emergent properties – things that you cannot predict from an analysis of the components, but which emerge as the system functions.

In 1981, the British psychologist Richard Gregory argued that the reliance on emergence as a way of explaining brain function indicated a problem with the theoretical framework: “The appearance of ‘emergence’ may well be a sign that a more general (or at least different) conceptual scheme is needed … It is the role of good theories to remove the appearance of emergence. (So explanations in terms of emergence are bogus.)”

This overlooks the fact that there are different kinds of emergence: weak and strong. Weak emergent features, such as the movement of a shoal of tiny fish in response to a shark, can be understood in terms of the rules that govern the behaviour of their component parts. In such cases, apparently mysterious group behaviours are based on the behaviour of individuals, each of which is responding to factors such as the movement of a neighbour, or external stimuli such as the approach of a predator.

This kind of weak emergence cannot explain the activity of even the simplest nervous systems, never mind the working of your brain, so we fall back on strong emergence, where the phenomenon that emerges cannot be explained by the activity of the individual components. You and the page you are reading this on are both made of atoms, but your ability to read and understand comes from features that emerge through atoms in your body forming higher-level structures, such as neurons and their patterns of firing – not simply from atoms interacting.

Strong emergence has recently been criticised by some neuroscientists as risking “metaphysical implausibility”, because there is no evident causal mechanism, nor any single explanation, of how emergence occurs. Like Gregory, these critics claim that the reliance on emergence to explain complex phenomena suggests that neuroscience is at a key historical juncture, similar to that which saw the slow transformation of alchemy into chemistry. But faced with the mysteries of neuroscience, emergence is often our only resort. And it is not so daft – the amazing properties of deep-learning programmes, which at root cannot be explained by the people who design them, are essentially emergent properties.

Interestingly, while some neuroscientists are discombobulated by the metaphysics of emergence, researchers in artificial intelligence revel in the idea, believing that the sheer complexity of modern computers, or of their interconnectedness through the internet, will lead to what is dramatically known as the singularity. Machines will become conscious.

There are plenty of fictional explorations of this possibility (in which things often end badly for all concerned), and the subject certainly excites the public’s imagination, but there is no reason, beyond our ignorance of how consciousness works, to suppose that it will happen in the near future. In principle, it must be possible, because the working hypothesis is that mind is a product of matter, which we should therefore be able to mimic in a device. But the scale of complexity of even the simplest brains dwarfs any machine we can currently envisage. For decades – centuries – to come, the singularity will be the stuff of science fiction, not science.Get the Guardian’s award-winning long reads sent direct to you every Saturday morning

A related view of the nature of consciousness turns the brain-as-computer metaphor into a strict analogy. Some researchers view the mind as a kind of operating system that is implemented on neural hardware, with the implication that our minds, seen as a particular computational state, could be uploaded on to some device or into another brain. In the way this is generally presented, this is wrong, or at best hopelessly naive.

The materialist working hypothesis is that brains and minds, in humans and maggots and everything else, are identical. Neurons and the processes they support – including consciousness – are the same thing. In a computer, software and hardware are separate; however, our brains and our minds consist of what can best be described as wetware, in which what is happening and where it is happening are completely intertwined.

Imagining that we can repurpose our nervous system to run different programmes, or upload our mind to a server, might sound scientific, but lurking behind this idea is a non-materialist view going back to Descartes and beyond. It implies that our minds are somehow floating about in our brains, and could be transferred into a different head or replaced by another mind. It would be possible to give this idea a veneer of scientific respectability by posing it in terms of reading the state of a set of neurons and writing that to a new substrate, organic or artificial.

But to even begin to imagine how that might work in practice, we would need both an understanding of neuronal function that is far beyond anything we can currently envisage, and would require unimaginably vast computational power and a simulation that precisely mimicked the structure of the brain in question. For this to be possible even in principle, we would first need to be able to fully model the activity of a nervous system capable of holding a single state, never mind a thought. We are so far away from taking this first step that the possibility of uploading your mind can be dismissed as a fantasy, at least until the far future.

For the moment, the brain-as-computer metaphor retains its dominance, although there is disagreement about how strong a metaphor it is. In 2015, the roboticist Rodney Brooks chose the computational metaphor of the brain as his pet hate in his contribution to a collection of essays entitled This Idea Must Die. Less dramatically, but drawing similar conclusions, two decades earlier the historian S Ryan Johansson argued that “endlessly debating the truth or falsity of a metaphor like ‘the brain is a computer’ is a waste of time. The relationship proposed is metaphorical, and it is ordering us to do something, not trying to tell us the truth.”

On the other hand, the US expert in artificial intelligence, Gary Marcus, has made a robust defence of the computer metaphor: “Computers are, in a nutshell, systematic architectures that take inputs, encode and manipulate information, and transform their inputs into outputs. Brains are, so far as we can tell, exactly that. The real question isn’t whether the brain is an information processor, per se, but rather how do brains store and encode information, and what operations do they perform over that information, once it is encoded.”

Marcus went on to argue that the task of neuroscience is to “reverse engineer” the brain, much as one might study a computer, examining its components and their interconnections to decipher how it works. This suggestion has been around for some time. In 1989, Crick recognised its attractiveness, but felt it would fail, because of the brain’s complex and messy evolutionary history – he dramatically claimed it would be like trying to reverse engineer a piece of “alien technology”. Attempts to find an overall explanation of how the brain works that flow logically from its structure would be doomed to failure, he argued, because the starting point is almost certainly wrong – there is no overall logic.

Reverse engineering a computer is often used as a thought experiment to show how, in principle, we might understand the brain. Inevitably, these thought experiments are successful, encouraging us to pursue this way of understanding the squishy organs in our heads. But in 2017, a pair of neuroscientists decided to actually do the experiment on a real computer chip, which had a real logic and real components with clearly designed functions. Things did not go as expected.

The duo – Eric Jonas and Konrad Paul Kording – employed the very techniques they normally used to analyse the brain and applied them to the MOS 6507 processor found in computers from the late 70s and early 80s that enabled those machines to run video games such as Donkey Kong and Space Invaders.

First, they obtained the connectome of the chip by scanning the 3510 enhancement-mode transistors it contained and simulating the device on a modern computer (including running the games programmes for 10 seconds). They then used the full range of neuroscientific techniques, such as “lesions” (removing transistors from the simulation), analysing the “spiking” activity of the virtual transistors and studying their connectivity, observing the effect of various manipulations on the behaviour of the system, as measured by its ability to launch each of the games.

Despite deploying this powerful analytical armoury, and despite the fact that there is a clear explanation for how the chip works (it has “ground truth”, in technospeak), the study failed to detect the hierarchy of information processing that occurs inside the chip. As Jonas and Kording put it, the techniques fell short of producing “a meaningful understanding”. Their conclusion was bleak: “Ultimately, the problem is not that neuroscientists could not understand a microprocessor, the problem is that they would not understand it given the approaches they are currently taking.”

This sobering outcome suggests that, despite the attractiveness of the computer metaphor and the fact that brains do indeed process information and somehow represent the external world, we still need to make significant theoretical breakthroughs in order to make progress. Even if our brains were designed along logical lines, which they are not, our present conceptual and analytical tools would be completely inadequate for the task of explaining them. This does not mean that simulation projects are pointless – by modelling (or simulating) we can test hypotheses and, by linking the model with well-established systems that can be precisely manipulated, we can gain insight into how real brains function. This is an extremely powerful tool, but a degree of modesty is required when it comes to the claims that are made for such studies, and realism is needed with regard to the difficulties of drawing parallels between brains and artificial systems.

Current ‘reverse engineering’ techniques cannot deliver a proper understanding of an Atari console chip, let alone of a human brain. Photograph: Radharc Images/Alamy

Even something as apparently straightforward as working out the storage capacity of a brain falls apart when it is attempted. Such calculations are fraught with conceptual and practical difficulties. Brains are natural, evolved phenomena, not digital devices. Although it is often argued that particular functions are tightly localised in the brain, as they are in a machine, this certainty has been repeatedly challenged by new neuroanatomical discoveries of unsuspected connections between brain regions, or amazing examples of plasticity, in which people can function normally without bits of the brain that are supposedly devoted to particular behaviours.

In reality, the very structures of a brain and a computer are completely different. In 2006, Larry Abbott wrote an essay titled “Where are the switches on this thing?”, in which he explored the potential biophysical bases of that most elementary component of an electronic device – a switch. Although inhibitory synapses can change the flow of activity by rendering a downstream neuron unresponsive, such interactions are relatively rare in the brain.

A neuron is not like a binary switch that can be turned on or off, forming a wiring diagram. Instead, neurons respond in an analogue way, changing their activity in response to changes in stimulation. The nervous system alters its working by changes in the patterns of activation in networks of cells composed of large numbers of units; it is these networks that channel, shift and shunt activity. Unlike any device we have yet envisaged, the nodes of these networks are not stable points like transistors or valves, but sets of neurons – hundreds, thousands, tens of thousands strong – that can respond consistently as a network over time, even if the component cells show inconsistent behaviour.

Understanding even the simplest of such networks is currently beyond our grasp. Eve Marder, a neuroscientist at Brandeis University, has spent much of her career trying to understand how a few dozen neurons in the lobster’s stomach produce a rhythmic grinding. Despite vast amounts of effort and ingenuity, we still cannot predict the effect of changing one component in this tiny network that is not even a simple brain.

This is the great problem we have to solve. On the one hand, brains are made of neurons and other cells, which interact together in networks, the activity of which is influenced not only by synaptic activity, but also by various factors such as neuromodulators. On the other hand, it is clear that brain function involves complex dynamic patterns of neuronal activity at a population level. Finding the link between these two levels of analysis will be a challenge for much of the rest of the century, I suspect. And the prospect of properly understanding what is happening in cases of mental illness is even further away.

Not all neuroscientists are pessimistic – some confidently claim that the application of new mathematical methods will enable us to understand the myriad interconnections in the human brain. Others – like myself – favour studying animals at the other end of the scale, focusing our attention on the tiny brains of worms or maggots and employing the well-established approach of seeking to understand how a simple system works and then applying those lessons to more complex cases. Many neuroscientists, if they think about the problem at all, simply consider that progress will inevitably be piecemeal and slow, because there is no grand unified theory of the brain lurking around the corner.

Even something as apparently straightforward as working out the storage capacity of a brain falls apart when it is attempted. Such calculations are fraught with conceptual and practical difficulties. Brains are natural, evolved phenomena, not digital devices. Although it is often argued that particular functions are tightly localised in the brain, as they are in a machine, this certainty has been repeatedly challenged by new neuroanatomical discoveries of unsuspected connections between brain regions, or amazing examples of plasticity, in which people can function normally without bits of the brain that are supposedly devoted to particular behaviours.

In reality, the very structures of a brain and a computer are completely different. In 2006, Larry Abbott wrote an essay titled “Where are the switches on this thing?”, in which he explored the potential biophysical bases of that most elementary component of an electronic device – a switch. Although inhibitory synapses can change the flow of activity by rendering a downstream neuron unresponsive, such interactions are relatively rare in the brain.

A neuron is not like a binary switch that can be turned on or off, forming a wiring diagram. Instead, neurons respond in an analogue way, changing their activity in response to changes in stimulation. The nervous system alters its working by changes in the patterns of activation in networks of cells composed of large numbers of units; it is these networks that channel, shift and shunt activity. Unlike any device we have yet envisaged, the nodes of these networks are not stable points like transistors or valves, but sets of neurons – hundreds, thousands, tens of thousands strong – that can respond consistently as a network over time, even if the component cells show inconsistent behaviour.

Understanding even the simplest of such networks is currently beyond our grasp. Eve Marder, a neuroscientist at Brandeis University, has spent much of her career trying to understand how a few dozen neurons in the lobster’s stomach produce a rhythmic grinding. Despite vast amounts of effort and ingenuity, we still cannot predict the effect of changing one component in this tiny network that is not even a simple brain.

This is the great problem we have to solve. On the one hand, brains are made of neurons and other cells, which interact together in networks, the activity of which is influenced not only by synaptic activity, but also by various factors such as neuromodulators. On the other hand, it is clear that brain function involves complex dynamic patterns of neuronal activity at a population level. Finding the link between these two levels of analysis will be a challenge for much of the rest of the century, I suspect. And the prospect of properly understanding what is happening in cases of mental illness is even further away.

Not all neuroscientists are pessimistic – some confidently claim that the application of new mathematical methods will enable us to understand the myriad interconnections in the human brain. Others – like myself – favour studying animals at the other end of the scale, focusing our attention on the tiny brains of worms or maggots and employing the well-established approach of seeking to understand how a simple system works and then applying those lessons to more complex cases. Many neuroscientists, if they think about the problem at all, simply consider that progress will inevitably be piecemeal and slow, because there is no grand unified theory of the brain lurking around the corner.

There are many alternative scenarios about how the future of our understanding of the brain could play out: perhaps the various computational projects will come good and theoreticians will crack the functioning of all brains, or the connectomes will reveal principles of brain function that are currently hidden from us. Or a theory will somehow pop out of the vast amounts of imaging data we are generating. Or we will slowly piece together a theory (or theories) out of a series of separate but satisfactory explanations. Or by focusing on simple neural network principles we will understand higher-level organisation. Or some radical new approach integrating physiology and biochemistry and anatomy will shed decisive light on what is going on. Or new comparative evolutionary studies will show how other animals are conscious and provide insight into the functioning of our own brains. Or unimagined new technology will change all our views by providing a radical new metaphor for the brain. Or our computer systems will provide us with alarming new insight by becoming conscious. Or a new framework will emerge from cybernetics, control theory, complexity and dynamical systems theory, semantics and semiotics. Or we will accept that there is no theory to be found because brains have no overall logic, just adequate explanations of each tiny part, and we will have to be satisfied with that.

Wednesday, 24 August 2016

How tricksters make you see what they want you to see

By David Robson in the BBC

Could you be fooled into “seeing” something that doesn’t exist?

Matthew Tompkins, a magician-turned-psychologist at the University of Oxford, has been investigating the ways that tricksters implant thoughts in people’s minds. With a masterful sleight of hand, he can make a poker chip disappear right in front of your eyes, or conjure a crayon out of thin air.

And finally, let’s watch the “phantom vanish trick”, which was the focus of his latest experiment:

Although interesting in themselves, the first three videos are really a warm-up for this more ambitious illusion, in which Tompkins tries to plant an image in the participant’s minds using the power of suggestion alone.

Around a third of his participants believed they had seen Tompkins take an object from the pot and tuck it into his hand – only to make it disappear later on. In fact, his fingers were always empty, but his clever pantomiming created an illusion of a real, visible object.

How is that possible? Psychologists have long known that the brain acts like an expert art restorer, touching up the rough images hitting our retina according to context and expectation. This “top-down processing” allows us to build a clear picture from the barest of details (such as this famous picture of the “Dalmatian in the snow”). It’s the reason we can make out a face in the dark, for instance. But occasionally, the brain may fill in too many of the gaps, allowing expectation to warp a picture so that it no longer reflects reality. In some ways, we really do see what we want to see.

This “top-down processing” is reflected in measures of brain activity, and it could easily explain the phantom vanish trick. The warm-up videos, the direction of his gaze, and his deft hand gestures all primed the participants’ brains to see the object between his fingers, and for some participants, this expectation overrode the reality in front of their eyes.

Sunday, 1 March 2015

14 Things To Know Before You Start Meditating

Sasha Bronner in The Huffington Post

New to meditating? It can be confusing. Not new to meditating? It can still be confusing.

The practice of meditation is said to have been around for thousands of years -- and yet, in the last few, especially in America, it seems that everyone knows at least one person who has taken on the ancient art of de-stressing.

Because it has been around for so long and because there are many different types of meditation, there are some essential truths you should know before you too take the dive into meditation or mindfulness (or both). Take a look at the suggestions below.

1. You don't need a mantra (but you can have one if you want).

It has become common for people to confuse mantra with the idea of an intention or specific words to live by. A motto. But the actual word "mantra" means something quite different. Man means mind and tra means vehicle. A mantra is a mind-vehicle. Mantras can be used in meditation as a tool to help your mind enter (or stay in) your meditation practice.

Other types of meditation use things like sound, counting breaths or even just the breath itself as a similar tool. Another way to think about a mantra is like an anchor. It anchors your mind as you meditate and can be what you come back to when your thoughts (inevitably) wander.

2. Don’t expect your brain to go blank.

One of the biggest misconceptions about meditation is that your mind is supposed to go blank and that you reach a super-Zen state of consciousness. This is typically not true. It's important to keep in mind that you don’t have to try to clear thoughts from your brain during meditation.

One of the biggest misconceptions about meditation is that your mind is supposed to go blank and that you reach a super-Zen state of consciousness. This is typically not true. It's important to keep in mind that you don’t have to try to clear thoughts from your brain during meditation.

The "nature of the mind to move from one thought to another is in fact the very basis of meditation," says Deepak Chopra, a meditation expert and founder of the Chopra Center for Wellbeing. "We don’t eliminate the tendency of the mind to jump from one thought to another. That’s not possible anyway." Depending on the type of meditation you learn, there are tools for gently bringing your focus back to your meditation practice. Alternatively, some types of meditation actually emphasize being present and mindful to thoughts as they arise as part of the practice.

3. You do not have to sit cross-legged or hold you hands in any position.

You can sit in any position that is comfortable to you. Most people sit upright in a chair or on a cushion. Your hands can fall gently in your lap or at your sides. It is best not to lie down unless you’re doing a body scan meditation or meditation for sleep.

4. Having said that, it’s also okay if you do fall asleep.

It’s very common to doze off during meditation and some believe that the brief sleep you get is actually very restorative. It’s not the goal, but if it’s a byproduct of your meditation, that is OK. Other practices might give tricks on how to stay more alert if you fall asleep (check out No. 19 on these tips from Headspace), like sitting upright in a chair. In our experience, the relaxation that can come from meditation is a wonderful thing -- and if that means a mini-snooze, so be it.

It’s very common to doze off during meditation and some believe that the brief sleep you get is actually very restorative. It’s not the goal, but if it’s a byproduct of your meditation, that is OK. Other practices might give tricks on how to stay more alert if you fall asleep (check out No. 19 on these tips from Headspace), like sitting upright in a chair. In our experience, the relaxation that can come from meditation is a wonderful thing -- and if that means a mini-snooze, so be it.

5. There are many ways to learn.

With meditation becoming so available to the masses, you can learn how to meditate alone, in a group, on a retreat, with your phone or even by listening to guided meditations online. Everyone has a different learning style and there are plenty of options out there to fit individual needs. Read our suggestions for how to get started.

With meditation becoming so available to the masses, you can learn how to meditate alone, in a group, on a retreat, with your phone or even by listening to guided meditations online. Everyone has a different learning style and there are plenty of options out there to fit individual needs. Read our suggestions for how to get started.

6. You can meditate for a distinct purpose or for general wellness.

Some meditation exercises are aimed at one goal, like helping to ease anxiety or helping people who have trouble sleeping. One popular mindfulness meditation technique, loving-kindness meditation, promotes the positive act of wishing ourselves or others happiness. However, if you don't have a specific goal in mind, you can still reap the benefits of the practice.

7. Meditation has so many health perks.

Meditation can help boost the immune system, reduce stress and anxiety, improve concentration, decrease blood pressure, improve your sleep, increase your happiness, and has even helped people deal with alcohol or smoking addictions.

Meditation can help boost the immune system, reduce stress and anxiety, improve concentration, decrease blood pressure, improve your sleep, increase your happiness, and has even helped people deal with alcohol or smoking addictions.

8. It can also physically change your brain.

Researchers have not only looked at the brains of meditators and non-meditators to study the differences, but they have also started looking at a group of brains before and after eight weeks of mindfulness meditation. The results are remarkable. Scientists noted everything from "changes in grey matter volume to reduced activity in the 'me' centers of the brain to enhanced connectivity between brain regions," Forbes reported earlier this year.

Researchers have not only looked at the brains of meditators and non-meditators to study the differences, but they have also started looking at a group of brains before and after eight weeks of mindfulness meditation. The results are remarkable. Scientists noted everything from "changes in grey matter volume to reduced activity in the 'me' centers of the brain to enhanced connectivity between brain regions," Forbes reported earlier this year.

Those who participated in an eight week mindfulness program also showed signs of ashrinking of the amygdala (the brain’s "fight or flight" center) as well as a thickening of the pre-frontal cortex, which handles brain functions like concentration and awareness.

Researchers also looked at brain imaging on long-term, experienced meditators. Many, when not in a state of meditation, had brain image results that looked more like the images of a regular person's brain while meditating. In other words, the experienced meditator's brain is remarkably different than the non-meditator's brain.

9. Oprah meditates.

So does Paul McCartney, Jerry Seinfeld, Howard Stern, Lena Dunham, Barbara Walters, Arianna Huffington and Kobe Bryant. Oprah teams up with Deepak Chopra for 21-day online meditation experiences that anyone can join, anywhere. The program is free and the next one begins in March 2015.

10. It’s more mainstream than you might think.

Think meditation is still a new-age concept? Think again. GQ magazine wrote their own guide to Transcendental Meditation. Time’s February 2014 cover story wasdevoted to "the mindful revolution" and many big companies, such as Google, Apple, Nike and HBO, have started promoting meditation at work with free classes and new meditation rooms.

Think meditation is still a new-age concept? Think again. GQ magazine wrote their own guide to Transcendental Meditation. Time’s February 2014 cover story wasdevoted to "the mindful revolution" and many big companies, such as Google, Apple, Nike and HBO, have started promoting meditation at work with free classes and new meditation rooms.

11. Mindfulness and meditation are not the same thing.

The two are talked about in conjunction often because one form of meditation is called mindfulness meditation. Mindfulness is defined most loosely as cultivating a present awareness in your everyday life. One way to do this is through meditation -- but not all meditation practices necessarily focus on mindfulness.

The two are talked about in conjunction often because one form of meditation is called mindfulness meditation. Mindfulness is defined most loosely as cultivating a present awareness in your everyday life. One way to do this is through meditation -- but not all meditation practices necessarily focus on mindfulness.

Mindfulness meditation is referred to most often when experts talk about the health benefits of meditation. Anderson Cooper recently did a special on his experience practicing mindfulness with expert Jon Kabat-Zinn for "60 Minutes."

12. Don’t believe yourself when you say you don’t have time to meditate.

While some formal meditation practices call for 20 minutes, twice a day, many other meditation exercises can be as short as five or 10 minutes. We easily spend that amount of time flipping through Netflix or liking things on Instagram. For some, it’s setting the morning alarm 10 minutes earlier or getting off email a few minutes before dinner to practice.

Another way to think about incorporating meditation into your daily routine is likening it to brushing your teeth. You might not do it at the exact same time each morning, but you always make sure you brush your teeth before you leave the house for the day. For those who start to see the benefits of daily meditation, it becomes a non-negotiable part of your routine.

13. You may not think you’re “doing it right” the first time you meditate.

Or the second or the third. That’s OK. It’s an exercise that you practice just like sit-ups or push-ups at the gym. You don’t expect a six-pack after one day of exercise, so think of meditation the same way.

Or the second or the third. That’s OK. It’s an exercise that you practice just like sit-ups or push-ups at the gym. You don’t expect a six-pack after one day of exercise, so think of meditation the same way.

14. Take a step back.

Many meditation teachers encourage you to assess your progress by noticing how you feel in between meditations -- not while you’re sitting down practicing one. It’s not uncommon to feel bored, distracted, frustrated or even discouraged some days while meditating. Hopefully you also have days of feeling energized, calm, happy and at peace. Instead of judging each meditation, try to think about how you feel throughout the week. Less stressed, less road rage, sleeping a little bit better? Sounds like it's working.

Many meditation teachers encourage you to assess your progress by noticing how you feel in between meditations -- not while you’re sitting down practicing one. It’s not uncommon to feel bored, distracted, frustrated or even discouraged some days while meditating. Hopefully you also have days of feeling energized, calm, happy and at peace. Instead of judging each meditation, try to think about how you feel throughout the week. Less stressed, less road rage, sleeping a little bit better? Sounds like it's working.

Friday, 27 December 2013

Brainwashed by the cult of the super-rich