Tim Harford in The Financial Times

In the middle of a crisis, it is not always easy to work out what has changed forever, and what will soon fade into history. Has the coronavirus pandemic ushered in the end of the office, the end of the city, the end of air travel, the end of retail and the end of theatre? Or has it merely ruined a lovely spring?

Stretch a rubber band, and you can expect it to snap back when released. Stretch a sheet of plastic wrapping and it will stay stretched. In economics, we borrow the term “hysteresis” to refer to systems that, like the plastic wrap, do not automatically return to the status quo.

The effects can be grim. A recession can leave scars that last, even once growth resumes. Good businesses disappear; people who lose jobs can then lose skills, contacts and confidence. But it is surprising how often, for better or worse, things snap back to normal, like the rubber band.

The murderous destruction of the World Trade Center in 2001, for example, had a lasting impact on airport security screening, but Manhattan is widely regarded to have bounced back quickly. There was a fear, at the time, that people would shun dense cities and tall buildings, but little evidence that they really did.

What, then, will the virus change permanently? Start with the most obvious impact: the people who have died will not be coming back. Most were elderly but not necessarily at death’s door, and some were young. More than one study has estimated that, on average, victims of Covid-19 could have expected to live for more than a decade.

But some of the economic damage will also be irreversible. The safest prediction is that activities which were already marginal will struggle to return.

After the devastating Kobe earthquake in Japan in 1995, economic recovery was impressive but partial. For a cluster of businesses making plastic shoes, already under pressure from Chinese competition, the earthquake turned a slow decline into an abrupt one.

Ask, “If we were starting from scratch, would we do it like this again?” If the answer is No, do not expect a post-coronavirus rebound. Drab high streets are in trouble.

But there is not necessarily a correlation between the hardest blow and the most lingering bruise.

Consider live music: it is devastated right now — it is hard to conceive of a packed concert hall or dance floor any time soon.

Yet live music is much loved and hard to replace. When Covid-19 has been tamed — whether by a vaccine, better treatments or familiarity breeding indifference — the demand will be back. Musicians and music businesses will have suffered hardship, but many of the venues will be untouched. The live experience has survived decades of competition from vinyl to Spotify. It will return.

Air travel is another example. We’ve had phone calls for a very long time, and they have always been much easier than getting on an aeroplane. They can replace face-to-face meetings, but they can also spark demand for further meetings. Alas for the planet, much of the travel that felt indispensable before the pandemic will feel indispensable again.

And for all the costs and indignities of a modern aeroplane, tourism depends on travel. It is hard to imagine people submitting to a swab test in order to go to the cinema, but if that becomes part of the rigmarole of flying, many people will comply.

No, the lingering changes may be more subtle. Richard Baldwin, author of The Globotics Upheaval, argues that the world has just run a massive set of experiments in telecommuting. Some have been failures, but the landscape of possibilities has changed.

If people can successfully work from home in the suburbs, how long before companies decide they can work from low-wage economies in another timezone?

The crisis will also spur automation. Robots do not catch coronavirus and are unlikely to spread it; the pandemic will not conjure robot barbers from thin air, but it has pushed companies into automating where they can. Once automated, those jobs will not be coming back.

Some changes will be welcome — a shock can jolt us out of a rut. I hope that we will strive to retain the pleasures of quiet streets, clean air and communities looking out for each other.

But there will be scars that last, especially for the young. People who graduate during a recession are at a measurable disadvantage relative to those who are slightly older or younger. The harm is larger for those in disadvantaged groups, such as racial minorities, and it persists for many years.

And children can suffer long-term harm when they miss school. Those who lack computers, books, quiet space and parents with the time and confidence to help them study are most vulnerable. Good-quality schooling is supposed to last a lifetime; its absence may be felt for a lifetime, too.

This crisis will not last for decades, but some of its effects will.

'People will forgive you for being wrong, but they will never forgive you for being right - especially if events prove you right while proving them wrong.' Thomas Sowell

Search This Blog

Showing posts with label robot. Show all posts

Showing posts with label robot. Show all posts

Friday, 5 June 2020

Thursday, 27 February 2020

Why your brain is not a computer

For decades it has been the dominant metaphor in neuroscience. But could this idea have been leading us astray all along? By Matthew Cobb in The Guardian

We are living through one of the greatest of scientific endeavours – the attempt to understand the most complex object in the universe, the brain. Scientists are accumulating vast amounts of data about structure and function in a huge array of brains, from the tiniest to our own. Tens of thousands of researchers are devoting massive amounts of time and energy to thinking about what brains do, and astonishing new technology is enabling us to both describe and manipulate that activity.

There are many alternative scenarios about how the future of our understanding of the brain could play out: perhaps the various computational projects will come good and theoreticians will crack the functioning of all brains, or the connectomes will reveal principles of brain function that are currently hidden from us. Or a theory will somehow pop out of the vast amounts of imaging data we are generating. Or we will slowly piece together a theory (or theories) out of a series of separate but satisfactory explanations. Or by focusing on simple neural network principles we will understand higher-level organisation. Or some radical new approach integrating physiology and biochemistry and anatomy will shed decisive light on what is going on. Or new comparative evolutionary studies will show how other animals are conscious and provide insight into the functioning of our own brains. Or unimagined new technology will change all our views by providing a radical new metaphor for the brain. Or our computer systems will provide us with alarming new insight by becoming conscious. Or a new framework will emerge from cybernetics, control theory, complexity and dynamical systems theory, semantics and semiotics. Or we will accept that there is no theory to be found because brains have no overall logic, just adequate explanations of each tiny part, and we will have to be satisfied with that.

We are living through one of the greatest of scientific endeavours – the attempt to understand the most complex object in the universe, the brain. Scientists are accumulating vast amounts of data about structure and function in a huge array of brains, from the tiniest to our own. Tens of thousands of researchers are devoting massive amounts of time and energy to thinking about what brains do, and astonishing new technology is enabling us to both describe and manipulate that activity.

We can now make a mouse remember something about a smell it has never encountered, turn a bad mouse memory into a good one, and even use a surge of electricity to change how people perceive faces. We are drawing up increasingly detailed and complex functional maps of the brain, human and otherwise. In some species, we can change the brain’s very structure at will, altering the animal’s behaviour as a result. Some of the most profound consequences of our growing mastery can be seen in our ability to enable a paralysed person to control a robotic arm with the power of their mind.

Every day, we hear about new discoveries that shed light on how brains work, along with the promise – or threat – of new technology that will enable us to do such far-fetched things as read minds, or detect criminals, or even be uploaded into a computer. Books are repeatedly produced that each claim to explain the brain in different ways.

And yet there is a growing conviction among some neuroscientists that our future path is not clear. It is hard to see where we should be going, apart from simply collecting more data or counting on the latest exciting experimental approach. As the German neuroscientist Olaf Sporns has put it: “Neuroscience still largely lacks organising principles or a theoretical framework for converting brain data into fundamental knowledge and understanding.” Despite the vast number of facts being accumulated, our understanding of the brain appears to be approaching an impasse.

I n 2017, the French neuroscientist Yves Frégnac focused on the current fashion of collecting massive amounts of data in expensive, large-scale projects and argued that the tsunami of data they are producing is leading to major bottlenecks in progress, partly because, as he put it pithily, “big data is not knowledge”.

“Only 20 to 30 years ago, neuroanatomical and neurophysiological information was relatively scarce, while understanding mind-related processes seemed within reach,” Frégnac wrote. “Nowadays, we are drowning in a flood of information. Paradoxically, all sense of global understanding is in acute danger of getting washed away. Each overcoming of technological barriers opens a Pandora’s box by revealing hidden variables, mechanisms and nonlinearities, adding new levels of complexity.”

The neuroscientists Anne Churchland and Larry Abbott have also emphasised our difficulties in interpreting the massive amount of data that is being produced by laboratories all over the world: “Obtaining deep understanding from this onslaught will require, in addition to the skilful and creative application of experimental technologies, substantial advances in data analysis methods and intense application of theoretic concepts and models.”

There are indeed theoretical approaches to brain function, including to the most mysterious thing the human brain can do – produce consciousness. But none of these frameworks are widely accepted, for none has yet passed the decisive test of experimental investigation. It is possible that repeated calls for more theory may be a pious hope. It can be argued that there is no possible single theory of brain function, not even in a worm, because a brain is not a single thing. (Scientists even find it difficult to come up with a precise definition of what a brain is.)

As observed by Francis Crick, the co-discoverer of the DNA double helix, the brain is an integrated, evolved structure with different bits of it appearing at different moments in evolution and adapted to solve different problems. Our current comprehension of how it all works is extremely partial – for example, most neuroscience sensory research has been focused on sight, not smell; smell is conceptually and technically more challenging. But the way that olfaction and vision work are different, both computationally and structurally. By focusing on vision, we have developed a very limited understanding of what the brain does and how it does it.

The nature of the brain – simultaneously integrated and composite – may mean that our future understanding will inevitably be fragmented and composed of different explanations for different parts. Churchland and Abbott spelled out the implication: “Global understanding, when it comes, will likely take the form of highly diverse panels loosely stitched together into a patchwork quilt.”

For more than half a century, all those highly diverse panels of patchwork we have been working on have been framed by thinking that brain processes involve something like those carried out in a computer. But that does not mean this metaphor will continue to be useful in the future. At the very beginning of the digital age, in 1951, the pioneer neuroscientist Karl Lashley argued against the use of any machine-based metaphor.

“Descartes was impressed by the hydraulic figures in the royal gardens, and developed a hydraulic theory of the action of the brain,” Lashley wrote. “We have since had telephone theories, electrical field theories and now theories based on computing machines and automatic rudders. I suggest we are more likely to find out about how the brain works by studying the brain itself, and the phenomena of behaviour, than by indulging in far-fetched physical analogies.”

This dismissal of metaphor has recently been taken even further by the French neuroscientist Romain Brette, who has challenged the most fundamental metaphor of brain function: coding. Since its inception in the 1920s, the idea of a neural code has come to dominate neuroscientific thinking – more than 11,000 papers on the topic have been published in the past 10 years. Brette’s fundamental criticism was that, in thinking about “code”, researchers inadvertently drift from a technical sense, in which there is a link between a stimulus and the activity of the neuron, to a representational sense, according to which neuronal codes represent that stimulus.

The unstated implication in most descriptions of neural coding is that the activity of neural networks is presented to an ideal observer or reader within the brain, often described as “downstream structures” that have access to the optimal way to decode the signals. But the ways in which such structures actually process those signals is unknown, and is rarely explicitly hypothesised, even in simple models of neural network function.

Every day, we hear about new discoveries that shed light on how brains work, along with the promise – or threat – of new technology that will enable us to do such far-fetched things as read minds, or detect criminals, or even be uploaded into a computer. Books are repeatedly produced that each claim to explain the brain in different ways.

And yet there is a growing conviction among some neuroscientists that our future path is not clear. It is hard to see where we should be going, apart from simply collecting more data or counting on the latest exciting experimental approach. As the German neuroscientist Olaf Sporns has put it: “Neuroscience still largely lacks organising principles or a theoretical framework for converting brain data into fundamental knowledge and understanding.” Despite the vast number of facts being accumulated, our understanding of the brain appears to be approaching an impasse.

I n 2017, the French neuroscientist Yves Frégnac focused on the current fashion of collecting massive amounts of data in expensive, large-scale projects and argued that the tsunami of data they are producing is leading to major bottlenecks in progress, partly because, as he put it pithily, “big data is not knowledge”.

“Only 20 to 30 years ago, neuroanatomical and neurophysiological information was relatively scarce, while understanding mind-related processes seemed within reach,” Frégnac wrote. “Nowadays, we are drowning in a flood of information. Paradoxically, all sense of global understanding is in acute danger of getting washed away. Each overcoming of technological barriers opens a Pandora’s box by revealing hidden variables, mechanisms and nonlinearities, adding new levels of complexity.”

The neuroscientists Anne Churchland and Larry Abbott have also emphasised our difficulties in interpreting the massive amount of data that is being produced by laboratories all over the world: “Obtaining deep understanding from this onslaught will require, in addition to the skilful and creative application of experimental technologies, substantial advances in data analysis methods and intense application of theoretic concepts and models.”

There are indeed theoretical approaches to brain function, including to the most mysterious thing the human brain can do – produce consciousness. But none of these frameworks are widely accepted, for none has yet passed the decisive test of experimental investigation. It is possible that repeated calls for more theory may be a pious hope. It can be argued that there is no possible single theory of brain function, not even in a worm, because a brain is not a single thing. (Scientists even find it difficult to come up with a precise definition of what a brain is.)

As observed by Francis Crick, the co-discoverer of the DNA double helix, the brain is an integrated, evolved structure with different bits of it appearing at different moments in evolution and adapted to solve different problems. Our current comprehension of how it all works is extremely partial – for example, most neuroscience sensory research has been focused on sight, not smell; smell is conceptually and technically more challenging. But the way that olfaction and vision work are different, both computationally and structurally. By focusing on vision, we have developed a very limited understanding of what the brain does and how it does it.

The nature of the brain – simultaneously integrated and composite – may mean that our future understanding will inevitably be fragmented and composed of different explanations for different parts. Churchland and Abbott spelled out the implication: “Global understanding, when it comes, will likely take the form of highly diverse panels loosely stitched together into a patchwork quilt.”

For more than half a century, all those highly diverse panels of patchwork we have been working on have been framed by thinking that brain processes involve something like those carried out in a computer. But that does not mean this metaphor will continue to be useful in the future. At the very beginning of the digital age, in 1951, the pioneer neuroscientist Karl Lashley argued against the use of any machine-based metaphor.

“Descartes was impressed by the hydraulic figures in the royal gardens, and developed a hydraulic theory of the action of the brain,” Lashley wrote. “We have since had telephone theories, electrical field theories and now theories based on computing machines and automatic rudders. I suggest we are more likely to find out about how the brain works by studying the brain itself, and the phenomena of behaviour, than by indulging in far-fetched physical analogies.”

This dismissal of metaphor has recently been taken even further by the French neuroscientist Romain Brette, who has challenged the most fundamental metaphor of brain function: coding. Since its inception in the 1920s, the idea of a neural code has come to dominate neuroscientific thinking – more than 11,000 papers on the topic have been published in the past 10 years. Brette’s fundamental criticism was that, in thinking about “code”, researchers inadvertently drift from a technical sense, in which there is a link between a stimulus and the activity of the neuron, to a representational sense, according to which neuronal codes represent that stimulus.

The unstated implication in most descriptions of neural coding is that the activity of neural networks is presented to an ideal observer or reader within the brain, often described as “downstream structures” that have access to the optimal way to decode the signals. But the ways in which such structures actually process those signals is unknown, and is rarely explicitly hypothesised, even in simple models of neural network function.

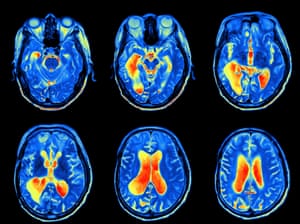

MRI scan of a brain. Photograph: Getty/iStockphoto

The processing of neural codes is generally seen as a series of linear steps – like a line of dominoes falling one after another. The brain, however, consists of highly complex neural networks that are interconnected, and which are linked to the outside world to effect action. Focusing on sets of sensory and processing neurons without linking these networks to the behaviour of the animal misses the point of all that processing.

By viewing the brain as a computer that passively responds to inputs and processes data, we forget that it is an active organ, part of a body that is intervening in the world, and which has an evolutionary past that has shaped its structure and function. This view of the brain has been outlined by the Hungarian neuroscientist György Buzsáki in his recent book The Brain from Inside Out. According to Buzsáki, the brain is not simply passively absorbing stimuli and representing them through a neural code, but rather is actively searching through alternative possibilities to test various options. His conclusion – following scientists going back to the 19th century – is that the brain does not represent information: it constructs it.

The metaphors of neuroscience – computers, coding, wiring diagrams and so on – are inevitably partial. That is the nature of metaphors, which have been intensely studied by philosophers of science and by scientists, as they seem to be so central to the way scientists think. But metaphors are also rich and allow insight and discovery. There will come a point when the understanding they allow will be outweighed by the limits they impose, but in the case of computational and representational metaphors of the brain, there is no agreement that such a moment has arrived. From a historical point of view, the very fact that this debate is taking place suggests that we may indeed be approaching the end of the computational metaphor. What is not clear, however, is what would replace it.

Scientists often get excited when they realise how their views have been shaped by the use of metaphor, and grasp that new analogies could alter how they understand their work, or even enable them to devise new experiments. Coming up with those new metaphors is challenging – most of those used in the past with regard to the brain have been related to new kinds of technology. This could imply that the appearance of new and insightful metaphors for the brain and how it functions hinges on future technological breakthroughs, on a par with hydraulic power, the telephone exchange or the computer. There is no sign of such a development; despite the latest buzzwords that zip about – blockchain, quantum supremacy (or quantum anything), nanotech and so on – it is unlikely that these fields will transform either technology or our view of what brains do.

One sign that our metaphors may be losing their explanatory power is the widespread assumption that much of what nervous systems do, from simple systems right up to the appearance of consciousness in humans, can only be explained as emergent properties – things that you cannot predict from an analysis of the components, but which emerge as the system functions.

In 1981, the British psychologist Richard Gregory argued that the reliance on emergence as a way of explaining brain function indicated a problem with the theoretical framework: “The appearance of ‘emergence’ may well be a sign that a more general (or at least different) conceptual scheme is needed … It is the role of good theories to remove the appearance of emergence. (So explanations in terms of emergence are bogus.)”

This overlooks the fact that there are different kinds of emergence: weak and strong. Weak emergent features, such as the movement of a shoal of tiny fish in response to a shark, can be understood in terms of the rules that govern the behaviour of their component parts. In such cases, apparently mysterious group behaviours are based on the behaviour of individuals, each of which is responding to factors such as the movement of a neighbour, or external stimuli such as the approach of a predator.

This kind of weak emergence cannot explain the activity of even the simplest nervous systems, never mind the working of your brain, so we fall back on strong emergence, where the phenomenon that emerges cannot be explained by the activity of the individual components. You and the page you are reading this on are both made of atoms, but your ability to read and understand comes from features that emerge through atoms in your body forming higher-level structures, such as neurons and their patterns of firing – not simply from atoms interacting.

Strong emergence has recently been criticised by some neuroscientists as risking “metaphysical implausibility”, because there is no evident causal mechanism, nor any single explanation, of how emergence occurs. Like Gregory, these critics claim that the reliance on emergence to explain complex phenomena suggests that neuroscience is at a key historical juncture, similar to that which saw the slow transformation of alchemy into chemistry. But faced with the mysteries of neuroscience, emergence is often our only resort. And it is not so daft – the amazing properties of deep-learning programmes, which at root cannot be explained by the people who design them, are essentially emergent properties.

Interestingly, while some neuroscientists are discombobulated by the metaphysics of emergence, researchers in artificial intelligence revel in the idea, believing that the sheer complexity of modern computers, or of their interconnectedness through the internet, will lead to what is dramatically known as the singularity. Machines will become conscious.

There are plenty of fictional explorations of this possibility (in which things often end badly for all concerned), and the subject certainly excites the public’s imagination, but there is no reason, beyond our ignorance of how consciousness works, to suppose that it will happen in the near future. In principle, it must be possible, because the working hypothesis is that mind is a product of matter, which we should therefore be able to mimic in a device. But the scale of complexity of even the simplest brains dwarfs any machine we can currently envisage. For decades – centuries – to come, the singularity will be the stuff of science fiction, not science.Get the Guardian’s award-winning long reads sent direct to you every Saturday morning

A related view of the nature of consciousness turns the brain-as-computer metaphor into a strict analogy. Some researchers view the mind as a kind of operating system that is implemented on neural hardware, with the implication that our minds, seen as a particular computational state, could be uploaded on to some device or into another brain. In the way this is generally presented, this is wrong, or at best hopelessly naive.

The materialist working hypothesis is that brains and minds, in humans and maggots and everything else, are identical. Neurons and the processes they support – including consciousness – are the same thing. In a computer, software and hardware are separate; however, our brains and our minds consist of what can best be described as wetware, in which what is happening and where it is happening are completely intertwined.

Imagining that we can repurpose our nervous system to run different programmes, or upload our mind to a server, might sound scientific, but lurking behind this idea is a non-materialist view going back to Descartes and beyond. It implies that our minds are somehow floating about in our brains, and could be transferred into a different head or replaced by another mind. It would be possible to give this idea a veneer of scientific respectability by posing it in terms of reading the state of a set of neurons and writing that to a new substrate, organic or artificial.

But to even begin to imagine how that might work in practice, we would need both an understanding of neuronal function that is far beyond anything we can currently envisage, and would require unimaginably vast computational power and a simulation that precisely mimicked the structure of the brain in question. For this to be possible even in principle, we would first need to be able to fully model the activity of a nervous system capable of holding a single state, never mind a thought. We are so far away from taking this first step that the possibility of uploading your mind can be dismissed as a fantasy, at least until the far future.

For the moment, the brain-as-computer metaphor retains its dominance, although there is disagreement about how strong a metaphor it is. In 2015, the roboticist Rodney Brooks chose the computational metaphor of the brain as his pet hate in his contribution to a collection of essays entitled This Idea Must Die. Less dramatically, but drawing similar conclusions, two decades earlier the historian S Ryan Johansson argued that “endlessly debating the truth or falsity of a metaphor like ‘the brain is a computer’ is a waste of time. The relationship proposed is metaphorical, and it is ordering us to do something, not trying to tell us the truth.”

On the other hand, the US expert in artificial intelligence, Gary Marcus, has made a robust defence of the computer metaphor: “Computers are, in a nutshell, systematic architectures that take inputs, encode and manipulate information, and transform their inputs into outputs. Brains are, so far as we can tell, exactly that. The real question isn’t whether the brain is an information processor, per se, but rather how do brains store and encode information, and what operations do they perform over that information, once it is encoded.”

Marcus went on to argue that the task of neuroscience is to “reverse engineer” the brain, much as one might study a computer, examining its components and their interconnections to decipher how it works. This suggestion has been around for some time. In 1989, Crick recognised its attractiveness, but felt it would fail, because of the brain’s complex and messy evolutionary history – he dramatically claimed it would be like trying to reverse engineer a piece of “alien technology”. Attempts to find an overall explanation of how the brain works that flow logically from its structure would be doomed to failure, he argued, because the starting point is almost certainly wrong – there is no overall logic.

Reverse engineering a computer is often used as a thought experiment to show how, in principle, we might understand the brain. Inevitably, these thought experiments are successful, encouraging us to pursue this way of understanding the squishy organs in our heads. But in 2017, a pair of neuroscientists decided to actually do the experiment on a real computer chip, which had a real logic and real components with clearly designed functions. Things did not go as expected.

The duo – Eric Jonas and Konrad Paul Kording – employed the very techniques they normally used to analyse the brain and applied them to the MOS 6507 processor found in computers from the late 70s and early 80s that enabled those machines to run video games such as Donkey Kong and Space Invaders.

First, they obtained the connectome of the chip by scanning the 3510 enhancement-mode transistors it contained and simulating the device on a modern computer (including running the games programmes for 10 seconds). They then used the full range of neuroscientific techniques, such as “lesions” (removing transistors from the simulation), analysing the “spiking” activity of the virtual transistors and studying their connectivity, observing the effect of various manipulations on the behaviour of the system, as measured by its ability to launch each of the games.

Despite deploying this powerful analytical armoury, and despite the fact that there is a clear explanation for how the chip works (it has “ground truth”, in technospeak), the study failed to detect the hierarchy of information processing that occurs inside the chip. As Jonas and Kording put it, the techniques fell short of producing “a meaningful understanding”. Their conclusion was bleak: “Ultimately, the problem is not that neuroscientists could not understand a microprocessor, the problem is that they would not understand it given the approaches they are currently taking.”

This sobering outcome suggests that, despite the attractiveness of the computer metaphor and the fact that brains do indeed process information and somehow represent the external world, we still need to make significant theoretical breakthroughs in order to make progress. Even if our brains were designed along logical lines, which they are not, our present conceptual and analytical tools would be completely inadequate for the task of explaining them. This does not mean that simulation projects are pointless – by modelling (or simulating) we can test hypotheses and, by linking the model with well-established systems that can be precisely manipulated, we can gain insight into how real brains function. This is an extremely powerful tool, but a degree of modesty is required when it comes to the claims that are made for such studies, and realism is needed with regard to the difficulties of drawing parallels between brains and artificial systems.

The processing of neural codes is generally seen as a series of linear steps – like a line of dominoes falling one after another. The brain, however, consists of highly complex neural networks that are interconnected, and which are linked to the outside world to effect action. Focusing on sets of sensory and processing neurons without linking these networks to the behaviour of the animal misses the point of all that processing.

By viewing the brain as a computer that passively responds to inputs and processes data, we forget that it is an active organ, part of a body that is intervening in the world, and which has an evolutionary past that has shaped its structure and function. This view of the brain has been outlined by the Hungarian neuroscientist György Buzsáki in his recent book The Brain from Inside Out. According to Buzsáki, the brain is not simply passively absorbing stimuli and representing them through a neural code, but rather is actively searching through alternative possibilities to test various options. His conclusion – following scientists going back to the 19th century – is that the brain does not represent information: it constructs it.

The metaphors of neuroscience – computers, coding, wiring diagrams and so on – are inevitably partial. That is the nature of metaphors, which have been intensely studied by philosophers of science and by scientists, as they seem to be so central to the way scientists think. But metaphors are also rich and allow insight and discovery. There will come a point when the understanding they allow will be outweighed by the limits they impose, but in the case of computational and representational metaphors of the brain, there is no agreement that such a moment has arrived. From a historical point of view, the very fact that this debate is taking place suggests that we may indeed be approaching the end of the computational metaphor. What is not clear, however, is what would replace it.

Scientists often get excited when they realise how their views have been shaped by the use of metaphor, and grasp that new analogies could alter how they understand their work, or even enable them to devise new experiments. Coming up with those new metaphors is challenging – most of those used in the past with regard to the brain have been related to new kinds of technology. This could imply that the appearance of new and insightful metaphors for the brain and how it functions hinges on future technological breakthroughs, on a par with hydraulic power, the telephone exchange or the computer. There is no sign of such a development; despite the latest buzzwords that zip about – blockchain, quantum supremacy (or quantum anything), nanotech and so on – it is unlikely that these fields will transform either technology or our view of what brains do.

One sign that our metaphors may be losing their explanatory power is the widespread assumption that much of what nervous systems do, from simple systems right up to the appearance of consciousness in humans, can only be explained as emergent properties – things that you cannot predict from an analysis of the components, but which emerge as the system functions.

In 1981, the British psychologist Richard Gregory argued that the reliance on emergence as a way of explaining brain function indicated a problem with the theoretical framework: “The appearance of ‘emergence’ may well be a sign that a more general (or at least different) conceptual scheme is needed … It is the role of good theories to remove the appearance of emergence. (So explanations in terms of emergence are bogus.)”

This overlooks the fact that there are different kinds of emergence: weak and strong. Weak emergent features, such as the movement of a shoal of tiny fish in response to a shark, can be understood in terms of the rules that govern the behaviour of their component parts. In such cases, apparently mysterious group behaviours are based on the behaviour of individuals, each of which is responding to factors such as the movement of a neighbour, or external stimuli such as the approach of a predator.

This kind of weak emergence cannot explain the activity of even the simplest nervous systems, never mind the working of your brain, so we fall back on strong emergence, where the phenomenon that emerges cannot be explained by the activity of the individual components. You and the page you are reading this on are both made of atoms, but your ability to read and understand comes from features that emerge through atoms in your body forming higher-level structures, such as neurons and their patterns of firing – not simply from atoms interacting.

Strong emergence has recently been criticised by some neuroscientists as risking “metaphysical implausibility”, because there is no evident causal mechanism, nor any single explanation, of how emergence occurs. Like Gregory, these critics claim that the reliance on emergence to explain complex phenomena suggests that neuroscience is at a key historical juncture, similar to that which saw the slow transformation of alchemy into chemistry. But faced with the mysteries of neuroscience, emergence is often our only resort. And it is not so daft – the amazing properties of deep-learning programmes, which at root cannot be explained by the people who design them, are essentially emergent properties.

Interestingly, while some neuroscientists are discombobulated by the metaphysics of emergence, researchers in artificial intelligence revel in the idea, believing that the sheer complexity of modern computers, or of their interconnectedness through the internet, will lead to what is dramatically known as the singularity. Machines will become conscious.

There are plenty of fictional explorations of this possibility (in which things often end badly for all concerned), and the subject certainly excites the public’s imagination, but there is no reason, beyond our ignorance of how consciousness works, to suppose that it will happen in the near future. In principle, it must be possible, because the working hypothesis is that mind is a product of matter, which we should therefore be able to mimic in a device. But the scale of complexity of even the simplest brains dwarfs any machine we can currently envisage. For decades – centuries – to come, the singularity will be the stuff of science fiction, not science.Get the Guardian’s award-winning long reads sent direct to you every Saturday morning

A related view of the nature of consciousness turns the brain-as-computer metaphor into a strict analogy. Some researchers view the mind as a kind of operating system that is implemented on neural hardware, with the implication that our minds, seen as a particular computational state, could be uploaded on to some device or into another brain. In the way this is generally presented, this is wrong, or at best hopelessly naive.

The materialist working hypothesis is that brains and minds, in humans and maggots and everything else, are identical. Neurons and the processes they support – including consciousness – are the same thing. In a computer, software and hardware are separate; however, our brains and our minds consist of what can best be described as wetware, in which what is happening and where it is happening are completely intertwined.

Imagining that we can repurpose our nervous system to run different programmes, or upload our mind to a server, might sound scientific, but lurking behind this idea is a non-materialist view going back to Descartes and beyond. It implies that our minds are somehow floating about in our brains, and could be transferred into a different head or replaced by another mind. It would be possible to give this idea a veneer of scientific respectability by posing it in terms of reading the state of a set of neurons and writing that to a new substrate, organic or artificial.

But to even begin to imagine how that might work in practice, we would need both an understanding of neuronal function that is far beyond anything we can currently envisage, and would require unimaginably vast computational power and a simulation that precisely mimicked the structure of the brain in question. For this to be possible even in principle, we would first need to be able to fully model the activity of a nervous system capable of holding a single state, never mind a thought. We are so far away from taking this first step that the possibility of uploading your mind can be dismissed as a fantasy, at least until the far future.

For the moment, the brain-as-computer metaphor retains its dominance, although there is disagreement about how strong a metaphor it is. In 2015, the roboticist Rodney Brooks chose the computational metaphor of the brain as his pet hate in his contribution to a collection of essays entitled This Idea Must Die. Less dramatically, but drawing similar conclusions, two decades earlier the historian S Ryan Johansson argued that “endlessly debating the truth or falsity of a metaphor like ‘the brain is a computer’ is a waste of time. The relationship proposed is metaphorical, and it is ordering us to do something, not trying to tell us the truth.”

On the other hand, the US expert in artificial intelligence, Gary Marcus, has made a robust defence of the computer metaphor: “Computers are, in a nutshell, systematic architectures that take inputs, encode and manipulate information, and transform their inputs into outputs. Brains are, so far as we can tell, exactly that. The real question isn’t whether the brain is an information processor, per se, but rather how do brains store and encode information, and what operations do they perform over that information, once it is encoded.”

Marcus went on to argue that the task of neuroscience is to “reverse engineer” the brain, much as one might study a computer, examining its components and their interconnections to decipher how it works. This suggestion has been around for some time. In 1989, Crick recognised its attractiveness, but felt it would fail, because of the brain’s complex and messy evolutionary history – he dramatically claimed it would be like trying to reverse engineer a piece of “alien technology”. Attempts to find an overall explanation of how the brain works that flow logically from its structure would be doomed to failure, he argued, because the starting point is almost certainly wrong – there is no overall logic.

Reverse engineering a computer is often used as a thought experiment to show how, in principle, we might understand the brain. Inevitably, these thought experiments are successful, encouraging us to pursue this way of understanding the squishy organs in our heads. But in 2017, a pair of neuroscientists decided to actually do the experiment on a real computer chip, which had a real logic and real components with clearly designed functions. Things did not go as expected.

The duo – Eric Jonas and Konrad Paul Kording – employed the very techniques they normally used to analyse the brain and applied them to the MOS 6507 processor found in computers from the late 70s and early 80s that enabled those machines to run video games such as Donkey Kong and Space Invaders.

First, they obtained the connectome of the chip by scanning the 3510 enhancement-mode transistors it contained and simulating the device on a modern computer (including running the games programmes for 10 seconds). They then used the full range of neuroscientific techniques, such as “lesions” (removing transistors from the simulation), analysing the “spiking” activity of the virtual transistors and studying their connectivity, observing the effect of various manipulations on the behaviour of the system, as measured by its ability to launch each of the games.

Despite deploying this powerful analytical armoury, and despite the fact that there is a clear explanation for how the chip works (it has “ground truth”, in technospeak), the study failed to detect the hierarchy of information processing that occurs inside the chip. As Jonas and Kording put it, the techniques fell short of producing “a meaningful understanding”. Their conclusion was bleak: “Ultimately, the problem is not that neuroscientists could not understand a microprocessor, the problem is that they would not understand it given the approaches they are currently taking.”

This sobering outcome suggests that, despite the attractiveness of the computer metaphor and the fact that brains do indeed process information and somehow represent the external world, we still need to make significant theoretical breakthroughs in order to make progress. Even if our brains were designed along logical lines, which they are not, our present conceptual and analytical tools would be completely inadequate for the task of explaining them. This does not mean that simulation projects are pointless – by modelling (or simulating) we can test hypotheses and, by linking the model with well-established systems that can be precisely manipulated, we can gain insight into how real brains function. This is an extremely powerful tool, but a degree of modesty is required when it comes to the claims that are made for such studies, and realism is needed with regard to the difficulties of drawing parallels between brains and artificial systems.

Current ‘reverse engineering’ techniques cannot deliver a proper understanding of an Atari console chip, let alone of a human brain. Photograph: Radharc Images/Alamy

Even something as apparently straightforward as working out the storage capacity of a brain falls apart when it is attempted. Such calculations are fraught with conceptual and practical difficulties. Brains are natural, evolved phenomena, not digital devices. Although it is often argued that particular functions are tightly localised in the brain, as they are in a machine, this certainty has been repeatedly challenged by new neuroanatomical discoveries of unsuspected connections between brain regions, or amazing examples of plasticity, in which people can function normally without bits of the brain that are supposedly devoted to particular behaviours.

In reality, the very structures of a brain and a computer are completely different. In 2006, Larry Abbott wrote an essay titled “Where are the switches on this thing?”, in which he explored the potential biophysical bases of that most elementary component of an electronic device – a switch. Although inhibitory synapses can change the flow of activity by rendering a downstream neuron unresponsive, such interactions are relatively rare in the brain.

A neuron is not like a binary switch that can be turned on or off, forming a wiring diagram. Instead, neurons respond in an analogue way, changing their activity in response to changes in stimulation. The nervous system alters its working by changes in the patterns of activation in networks of cells composed of large numbers of units; it is these networks that channel, shift and shunt activity. Unlike any device we have yet envisaged, the nodes of these networks are not stable points like transistors or valves, but sets of neurons – hundreds, thousands, tens of thousands strong – that can respond consistently as a network over time, even if the component cells show inconsistent behaviour.

Understanding even the simplest of such networks is currently beyond our grasp. Eve Marder, a neuroscientist at Brandeis University, has spent much of her career trying to understand how a few dozen neurons in the lobster’s stomach produce a rhythmic grinding. Despite vast amounts of effort and ingenuity, we still cannot predict the effect of changing one component in this tiny network that is not even a simple brain.

This is the great problem we have to solve. On the one hand, brains are made of neurons and other cells, which interact together in networks, the activity of which is influenced not only by synaptic activity, but also by various factors such as neuromodulators. On the other hand, it is clear that brain function involves complex dynamic patterns of neuronal activity at a population level. Finding the link between these two levels of analysis will be a challenge for much of the rest of the century, I suspect. And the prospect of properly understanding what is happening in cases of mental illness is even further away.

Not all neuroscientists are pessimistic – some confidently claim that the application of new mathematical methods will enable us to understand the myriad interconnections in the human brain. Others – like myself – favour studying animals at the other end of the scale, focusing our attention on the tiny brains of worms or maggots and employing the well-established approach of seeking to understand how a simple system works and then applying those lessons to more complex cases. Many neuroscientists, if they think about the problem at all, simply consider that progress will inevitably be piecemeal and slow, because there is no grand unified theory of the brain lurking around the corner.

Even something as apparently straightforward as working out the storage capacity of a brain falls apart when it is attempted. Such calculations are fraught with conceptual and practical difficulties. Brains are natural, evolved phenomena, not digital devices. Although it is often argued that particular functions are tightly localised in the brain, as they are in a machine, this certainty has been repeatedly challenged by new neuroanatomical discoveries of unsuspected connections between brain regions, or amazing examples of plasticity, in which people can function normally without bits of the brain that are supposedly devoted to particular behaviours.

In reality, the very structures of a brain and a computer are completely different. In 2006, Larry Abbott wrote an essay titled “Where are the switches on this thing?”, in which he explored the potential biophysical bases of that most elementary component of an electronic device – a switch. Although inhibitory synapses can change the flow of activity by rendering a downstream neuron unresponsive, such interactions are relatively rare in the brain.

A neuron is not like a binary switch that can be turned on or off, forming a wiring diagram. Instead, neurons respond in an analogue way, changing their activity in response to changes in stimulation. The nervous system alters its working by changes in the patterns of activation in networks of cells composed of large numbers of units; it is these networks that channel, shift and shunt activity. Unlike any device we have yet envisaged, the nodes of these networks are not stable points like transistors or valves, but sets of neurons – hundreds, thousands, tens of thousands strong – that can respond consistently as a network over time, even if the component cells show inconsistent behaviour.

Understanding even the simplest of such networks is currently beyond our grasp. Eve Marder, a neuroscientist at Brandeis University, has spent much of her career trying to understand how a few dozen neurons in the lobster’s stomach produce a rhythmic grinding. Despite vast amounts of effort and ingenuity, we still cannot predict the effect of changing one component in this tiny network that is not even a simple brain.

This is the great problem we have to solve. On the one hand, brains are made of neurons and other cells, which interact together in networks, the activity of which is influenced not only by synaptic activity, but also by various factors such as neuromodulators. On the other hand, it is clear that brain function involves complex dynamic patterns of neuronal activity at a population level. Finding the link between these two levels of analysis will be a challenge for much of the rest of the century, I suspect. And the prospect of properly understanding what is happening in cases of mental illness is even further away.

Not all neuroscientists are pessimistic – some confidently claim that the application of new mathematical methods will enable us to understand the myriad interconnections in the human brain. Others – like myself – favour studying animals at the other end of the scale, focusing our attention on the tiny brains of worms or maggots and employing the well-established approach of seeking to understand how a simple system works and then applying those lessons to more complex cases. Many neuroscientists, if they think about the problem at all, simply consider that progress will inevitably be piecemeal and slow, because there is no grand unified theory of the brain lurking around the corner.

There are many alternative scenarios about how the future of our understanding of the brain could play out: perhaps the various computational projects will come good and theoreticians will crack the functioning of all brains, or the connectomes will reveal principles of brain function that are currently hidden from us. Or a theory will somehow pop out of the vast amounts of imaging data we are generating. Or we will slowly piece together a theory (or theories) out of a series of separate but satisfactory explanations. Or by focusing on simple neural network principles we will understand higher-level organisation. Or some radical new approach integrating physiology and biochemistry and anatomy will shed decisive light on what is going on. Or new comparative evolutionary studies will show how other animals are conscious and provide insight into the functioning of our own brains. Or unimagined new technology will change all our views by providing a radical new metaphor for the brain. Or our computer systems will provide us with alarming new insight by becoming conscious. Or a new framework will emerge from cybernetics, control theory, complexity and dynamical systems theory, semantics and semiotics. Or we will accept that there is no theory to be found because brains have no overall logic, just adequate explanations of each tiny part, and we will have to be satisfied with that.

Friday, 29 June 2018

Would basic incomes or basic jobs be better when robots take over?

Tim Harford in The Financial Times

We all seem to be worried about the robots taking over these days — and they don’t need to take all the jobs to be horrendously disruptive. A situation where 30 to 40 per cent of the working age population was economically useless would be tough enough. They might be taxi drivers replaced by a self-driving car, hedge fund managers replaced by an algorithm, or financial journalists replaced by a chatbot on Instagram.

By “economically useless” I mean people unable to secure work at anything approaching a living wage. For all their value as citizens, friends, parents, and their intrinsic worth as human beings, they would simply have no role in the economic system.

I’m not sure how likely this is — I would bet against it happening soon — but it is never too early to prepare for what might be a utopia, or a catastrophe. And an intriguing debate has broken out over how to look after disadvantaged workers both now and in this robot future.

Should everyone be given free money? Or should everyone receive the guarantee of a decently-paid job? Various non-profits, polemicists and even Silicon Valley types have thrown their weight behind the “free money” idea in the form of a universal basic income, while US senators including Bernie Sanders, Elizabeth Warren, Cory Booker and Kirsten Gillibrand have been pushing for trials of a jobs guarantee.

Basic income or basic jobs? There are countless details for the policy wonks to argue over, but what interests me at the moment is the psychology. In a world of mass technological unemployment, would either of these two remedies make us happy?

Author Rutger Bregman describes a basic income in glowing terms, as “venture capital for everyone”. He sees the cash as liberation from abusive working conditions, and a potential launch pad to creative and fulfilling projects.

Yet the economist Edward Glaeser views a basic income as a “horror” for the recipients. “You’re telling them their lives are not going to be ones of contribution,” he remarked in a recent interview with the EconTalk podcast. “Their lives aren’t going to be producing a product that anyone values.”

Surely both of them have a point. A similar disagreement exists regarding the psychological effect of a basic jobs guarantee, with advocates emphasising the dignity of work, while sceptics fear a Sisyphean exercise in punching the clock to do a fake job.

So what does the evidence suggest? Neither a jobs guarantee nor a basic income has been tried at scale in a modern economy, so we are forced to make educated guesses.

We know that joblessness makes us miserable. In the words of Warwick university economist Andrew Oswald: “There is overwhelming statistical evidence that involuntary unemployment produces extreme unhappiness.”

What’s more, adds Prof Oswald, most of this unhappiness seems to be because of a loss of prestige, identity or self-worth. Money is only a small part of it. This suggests that the advocates of a jobs guarantee may be on to something.

In this context, it’s worth noting two recent studies of lottery winners in the Netherlands and Sweden, both of which find that big winners tend to scale back their hours rather than quitting their jobs. We seem to find something in our jobs worth holding on to.

Yet many of the trappings of work frustrate us. Researchers led by Daniel Kahneman and Alan Krueger asked people to reflect on the emotions they felt as they recalled episodes in the previous day. The most negative episodes were the evening commute, the morning commute, and work itself. Things were better if people got to chat to colleagues while working, but (unsurprisingly) they were worse for low status jobs, or jobs for which people felt overqualified. None of which suggests that people will enjoy working on a guaranteed-job scheme.

Psychologists have found that we like and benefit from feeling in control. That is a mark in favour of a universal basic income: being unconditional, it is likely to enhance our feelings of control. The money would be ours, by right, to do with as we wish. A job guarantee might work the other way: it makes money conditional on punching the clock.

On the other hand (again!), we like to keep busy. Harvard researchers Matthew Killingsworth and Daniel Gilbert have found that “a wandering mind is an unhappy mind”. And social contact is generally good for our wellbeing. Maybe guaranteed jobs would help keep us active and socially connected.

The truth is, we don’t really know. I would hesitate to pronounce with confidence about which policy might ultimately be better for our wellbeing. It is good to see that the more thoughtful advocates of either policy — or both policies simultaneously — are asking for large-scale trials to learn more.

Meanwhile, I am confident that we would all benefit from an economy that creates real jobs which are sociable, engaging, and decently paid. Grand reforms of the welfare system notwithstanding, none of us should be giving up on making work work better.

We all seem to be worried about the robots taking over these days — and they don’t need to take all the jobs to be horrendously disruptive. A situation where 30 to 40 per cent of the working age population was economically useless would be tough enough. They might be taxi drivers replaced by a self-driving car, hedge fund managers replaced by an algorithm, or financial journalists replaced by a chatbot on Instagram.

By “economically useless” I mean people unable to secure work at anything approaching a living wage. For all their value as citizens, friends, parents, and their intrinsic worth as human beings, they would simply have no role in the economic system.

I’m not sure how likely this is — I would bet against it happening soon — but it is never too early to prepare for what might be a utopia, or a catastrophe. And an intriguing debate has broken out over how to look after disadvantaged workers both now and in this robot future.

Should everyone be given free money? Or should everyone receive the guarantee of a decently-paid job? Various non-profits, polemicists and even Silicon Valley types have thrown their weight behind the “free money” idea in the form of a universal basic income, while US senators including Bernie Sanders, Elizabeth Warren, Cory Booker and Kirsten Gillibrand have been pushing for trials of a jobs guarantee.

Basic income or basic jobs? There are countless details for the policy wonks to argue over, but what interests me at the moment is the psychology. In a world of mass technological unemployment, would either of these two remedies make us happy?

Author Rutger Bregman describes a basic income in glowing terms, as “venture capital for everyone”. He sees the cash as liberation from abusive working conditions, and a potential launch pad to creative and fulfilling projects.

Yet the economist Edward Glaeser views a basic income as a “horror” for the recipients. “You’re telling them their lives are not going to be ones of contribution,” he remarked in a recent interview with the EconTalk podcast. “Their lives aren’t going to be producing a product that anyone values.”

Surely both of them have a point. A similar disagreement exists regarding the psychological effect of a basic jobs guarantee, with advocates emphasising the dignity of work, while sceptics fear a Sisyphean exercise in punching the clock to do a fake job.

So what does the evidence suggest? Neither a jobs guarantee nor a basic income has been tried at scale in a modern economy, so we are forced to make educated guesses.

We know that joblessness makes us miserable. In the words of Warwick university economist Andrew Oswald: “There is overwhelming statistical evidence that involuntary unemployment produces extreme unhappiness.”

What’s more, adds Prof Oswald, most of this unhappiness seems to be because of a loss of prestige, identity or self-worth. Money is only a small part of it. This suggests that the advocates of a jobs guarantee may be on to something.

In this context, it’s worth noting two recent studies of lottery winners in the Netherlands and Sweden, both of which find that big winners tend to scale back their hours rather than quitting their jobs. We seem to find something in our jobs worth holding on to.

Yet many of the trappings of work frustrate us. Researchers led by Daniel Kahneman and Alan Krueger asked people to reflect on the emotions they felt as they recalled episodes in the previous day. The most negative episodes were the evening commute, the morning commute, and work itself. Things were better if people got to chat to colleagues while working, but (unsurprisingly) they were worse for low status jobs, or jobs for which people felt overqualified. None of which suggests that people will enjoy working on a guaranteed-job scheme.

Psychologists have found that we like and benefit from feeling in control. That is a mark in favour of a universal basic income: being unconditional, it is likely to enhance our feelings of control. The money would be ours, by right, to do with as we wish. A job guarantee might work the other way: it makes money conditional on punching the clock.

On the other hand (again!), we like to keep busy. Harvard researchers Matthew Killingsworth and Daniel Gilbert have found that “a wandering mind is an unhappy mind”. And social contact is generally good for our wellbeing. Maybe guaranteed jobs would help keep us active and socially connected.

The truth is, we don’t really know. I would hesitate to pronounce with confidence about which policy might ultimately be better for our wellbeing. It is good to see that the more thoughtful advocates of either policy — or both policies simultaneously — are asking for large-scale trials to learn more.

Meanwhile, I am confident that we would all benefit from an economy that creates real jobs which are sociable, engaging, and decently paid. Grand reforms of the welfare system notwithstanding, none of us should be giving up on making work work better.

Sunday, 18 February 2018

Robots + Capital - The redundancy of human beings

Tabish Khair in The Hindu

Human beings are being made redundant by something they created. This is not a humanoid, robot, or computer but money as capital

We have all read stories, or seen films, about robots taking over. How, some time in the future, human beings will be marginalised, effectively replaced by machines, real or virtual. Common to these stories is the trope of the world taken over by something constructed of inert material, something mechanical and ‘heartless’. Also common to these stories is the idea that this will happen in the future.

What if I tell you that it has already happened? The future is here!

The culprit that humans created

In fact, the future has been building up for some decades. Roughly from the 1970s onwards, human beings have been increasingly made redundant by something they created, and that was once of use to them. Except that this ‘something’ is not a humanoid, robot, or even a computer; it is money. Or, more precisely, it is money as capital.

It was precipitated in 1973, when floating exchange rates were introduced. As economist Samir Amin notes, this was the logical result of the “concomitance of the U.S. deficit (leading to an excess of dollars available on the market) and the crisis of productive investment” which had produced “a mass of floating capital with no place to go.” With floating exchange rates, this excess of dollars could be plunged into sheer financial speculation across national borders. Financial speculation had always been a part of capitalism, but floating exchange rates dissolved the ties between capital, goods (trade and production) and labour. Financial speculation gradually floated free of human labour and even of money, as a medium of exchange. If I were a theorist of capitalism of the Adam Smith variety, I would say that capitalism, as we knew it (and still, erroneously, imagine it), started dying in 1973.

Amin goes on to stress the consequences of this: The ratio between hedging operations on the one side and production and international trading on the other rose to 28:1 by 2002 — “a disproportion that has been constantly growing for about the last twenty years and which has never been witnessed in the entire history of capitalism.” In other words, while world trade was valued at $2 billion around 2005, international capital movements were estimated at $50 billion.

How can there be capital movements in such excess of trade? Adam Smith would have failed to understand it. Karl Marx, who feared something like this, would have failed to imagine its scale.

This is what has happened: capital, which was always the abstract logic of money, has riven free of money as a medium of exchange. It no longer needs anything to exchange — and, hence, anyone to produce — in order to grow. (I am exaggerating, but only a bit.)

Theorists have argued that money is a social relation and a medium of exchange. That is not true of most capital today, which need not be ploughed back into any kind of production, trade, labour or even services. It can just be moved around as numbers. This is what day traders do. They do not look at company balance sheets or supply-demand statistics; they simply look at numbers on the computer screen.

This is what explains the dichotomy — most obvious in Donald Trump’s U.S., but not absent in places like the U.K., France or India — between the rhetoric of politicians and their actual actions. Politicians might come to power by promising to ‘drain the swamp’, but what they do, once assured of political power, is to partake in the monopoly of finance capital. This abstract capital is the ‘robot’ — call it Robital — that has marginalised human beings.

I am not making a Marxist point about capital under classical capitalism: despite its tendency towards exploitation, this was still largely invested in human labour. This is not the case any longer. Finance capital does not really need humans — apart from the 1% that own most of it, and another 30% or so of necessary service providers, including IT ones, whose numbers should be expected to steadily shrink.

Robotisation has already taken place: it is only its physical enactment (actual robots) that is still building up. Robots, as replacements for human beings, are the consequence of the abstract nature of finance capital. Robotised agriculture and office robots are a consequence of this. If most humans are redundant and most capital is in the hands of a 1% superclass, it is inevitable that this capital will be invested in creating machines that can make the elite even less dependent on other human beings.

The underlying cause

My American friends wonder about the blindness of Republican politicians who refuse to provide medical support to ordinary Americans and even dismantle the few supports that exist. My British friends talk of the slow spread of homelessness in the U.K. My Indian friends worry about matters such as thousands of farmer suicides. The working middle class crumbles in most countries.

Here is the underlying cause of all of this: the redundancy of human beings, because capital can now replicate itself, endlessly, without being forced back into human labour and trade. We are entering an age where visible genocides — as in Syria or Yemen — might be matched by invisible ones, such as the unremarked deaths of the homeless, the deprived and the marginal.

Robital is here.

Human beings are being made redundant by something they created. This is not a humanoid, robot, or computer but money as capital

We have all read stories, or seen films, about robots taking over. How, some time in the future, human beings will be marginalised, effectively replaced by machines, real or virtual. Common to these stories is the trope of the world taken over by something constructed of inert material, something mechanical and ‘heartless’. Also common to these stories is the idea that this will happen in the future.

What if I tell you that it has already happened? The future is here!

The culprit that humans created

In fact, the future has been building up for some decades. Roughly from the 1970s onwards, human beings have been increasingly made redundant by something they created, and that was once of use to them. Except that this ‘something’ is not a humanoid, robot, or even a computer; it is money. Or, more precisely, it is money as capital.

It was precipitated in 1973, when floating exchange rates were introduced. As economist Samir Amin notes, this was the logical result of the “concomitance of the U.S. deficit (leading to an excess of dollars available on the market) and the crisis of productive investment” which had produced “a mass of floating capital with no place to go.” With floating exchange rates, this excess of dollars could be plunged into sheer financial speculation across national borders. Financial speculation had always been a part of capitalism, but floating exchange rates dissolved the ties between capital, goods (trade and production) and labour. Financial speculation gradually floated free of human labour and even of money, as a medium of exchange. If I were a theorist of capitalism of the Adam Smith variety, I would say that capitalism, as we knew it (and still, erroneously, imagine it), started dying in 1973.

Amin goes on to stress the consequences of this: The ratio between hedging operations on the one side and production and international trading on the other rose to 28:1 by 2002 — “a disproportion that has been constantly growing for about the last twenty years and which has never been witnessed in the entire history of capitalism.” In other words, while world trade was valued at $2 billion around 2005, international capital movements were estimated at $50 billion.

How can there be capital movements in such excess of trade? Adam Smith would have failed to understand it. Karl Marx, who feared something like this, would have failed to imagine its scale.

This is what has happened: capital, which was always the abstract logic of money, has riven free of money as a medium of exchange. It no longer needs anything to exchange — and, hence, anyone to produce — in order to grow. (I am exaggerating, but only a bit.)

Theorists have argued that money is a social relation and a medium of exchange. That is not true of most capital today, which need not be ploughed back into any kind of production, trade, labour or even services. It can just be moved around as numbers. This is what day traders do. They do not look at company balance sheets or supply-demand statistics; they simply look at numbers on the computer screen.

This is what explains the dichotomy — most obvious in Donald Trump’s U.S., but not absent in places like the U.K., France or India — between the rhetoric of politicians and their actual actions. Politicians might come to power by promising to ‘drain the swamp’, but what they do, once assured of political power, is to partake in the monopoly of finance capital. This abstract capital is the ‘robot’ — call it Robital — that has marginalised human beings.

I am not making a Marxist point about capital under classical capitalism: despite its tendency towards exploitation, this was still largely invested in human labour. This is not the case any longer. Finance capital does not really need humans — apart from the 1% that own most of it, and another 30% or so of necessary service providers, including IT ones, whose numbers should be expected to steadily shrink.

Robotisation has already taken place: it is only its physical enactment (actual robots) that is still building up. Robots, as replacements for human beings, are the consequence of the abstract nature of finance capital. Robotised agriculture and office robots are a consequence of this. If most humans are redundant and most capital is in the hands of a 1% superclass, it is inevitable that this capital will be invested in creating machines that can make the elite even less dependent on other human beings.

The underlying cause

My American friends wonder about the blindness of Republican politicians who refuse to provide medical support to ordinary Americans and even dismantle the few supports that exist. My British friends talk of the slow spread of homelessness in the U.K. My Indian friends worry about matters such as thousands of farmer suicides. The working middle class crumbles in most countries.

Here is the underlying cause of all of this: the redundancy of human beings, because capital can now replicate itself, endlessly, without being forced back into human labour and trade. We are entering an age where visible genocides — as in Syria or Yemen — might be matched by invisible ones, such as the unremarked deaths of the homeless, the deprived and the marginal.

Robital is here.

Sunday, 29 October 2017

From climate change to robots: what politicians aren’t telling us

Simon Kuper in The Financial Times

On US television news this autumn, wildfires and hurricanes have replaced terrorism and — mostly — even mass shootings as primetime content. Climate change is making natural disasters more frequent, and more Americans now live in at-risk areas. But meanwhile, Donald Trump argues on Twitter about what he supposedly said to a soldier’s widow. So far, Trump is dangerous less because of what he says (hot air) or does (little) than because of the issues he ignores.

On US television news this autumn, wildfires and hurricanes have replaced terrorism and — mostly — even mass shootings as primetime content. Climate change is making natural disasters more frequent, and more Americans now live in at-risk areas. But meanwhile, Donald Trump argues on Twitter about what he supposedly said to a soldier’s widow. So far, Trump is dangerous less because of what he says (hot air) or does (little) than because of the issues he ignores.

He’s not alone: politics in many western countries has become a displacement activity. Most politicians bang on about identity while ignoring automation, climate change and the imminent revolution in medicine. They talk more about the 1950s than the 2020s. This is partly because they want to distract voters from real problems, and partly because today’s politicians tend to be lawyers, entertainers and ex-journalists who know less about tech than the average 14-year-old. (Trump said in a sworn deposition in 2007 that he didn’t own a computer; his secretary sent his emails.) But the new forces are already transforming politics.

Ironically, given the volume of American climate denial, the US looks like becoming the first western country to be hit by climate change. Each new natural disaster will prompt political squabbles over whether Washington should bail out the stricken region. At-risk cities such as Miami and New Orleans will gradually lose appeal as the risks become uninsurable. If you buy an apartment on Miami Beach now, are you confident it will survive another 30 years undamaged? And who will want to buy it from you in 2047? Miami could fade as Detroit did.

American climate denial may fade too, as tech companies displace Big Oil as the country’s chief lobbyists. Already in the first half of this year, Amazon outspent Exxon and Walmart on lobbying. Facebook, now taking a kicking over fake news, will lobby its way back. Meanwhile, northern Europe, for some years at least, will benefit from its historical unique selling point: its mild and rainy climate. Its problem will be that millions of Africans will try to move there.