Nadeem F Paracha in The Dawn

In the last few years, my research, in areas such as religious extremism, historical distortions in school textbooks, the culture of conspiracy theories and reactionary attitudes towards science, has produced findings that are a lot more universal than one suspected.

This century’s second decade (2010-2020) saw some startling political and social tendencies in Europe and the US, which mirrored those in developing countries. Before the mentioned decade, these tendencies had been repeatedly commented upon in the West, as if they were entirely specific to poorer regions. Even though many Western historians, while discussing the presence of religious extremism, superstition or political upheavals in developing countries, agreed that these existed in developed countries as well, they insisted that these were only largely present in their own countries during their teething years.

In 2012, at a conference in London, I heard a British political scientist and an American historian emphasise that the problems that developing countries face — i.e. religiously-motivated violence, a suspect disposition towards science, and continual political disruption — were present at one time in developed countries as well, but had been overcome through an evolutionary process, and by the construction of political and economic systems that were self-correcting in times of crisis. What these two gentlemen were suggesting was that most developing countries were still at a stage that the developed countries had been two hundred years or so ago.

However, eight years after that conference, Europe and the US, it seems, have been flung back two hundred years in the past. Mainstream political structures there have been invaded by firebrand right-wing populists, dogmatic ‘cultural warriors’ from the left and the right are battling it out to define what is ‘good’ and what is ‘evil’ — in the process, wrecking the carefully constructed pillars of the Enlightenment era on which their nations’ whole existential meaning rests — the most outlandish conspiracy theories have migrated from the edges of the lunatic fringe into the mainstream, and science is being perceived as a demonic force out to destroy faith.

Take for instance, the practice of authoring distorted textbooks. Over the years, some excellent research cropped up in Pakistan and India that systematically exposed how historical distortions and religious biases in textbooks have contributed (and still are contributing) to episodes of bigotry in both the countries. During my own research in this area, I began to notice that this problem was not restricted to developing countries alone.

In 1971, a joint study by a group of American and British historians showed that out of the 36 British and American school textbooks that they examined, no less than 25 contained inaccurate information and ideological bias. In 2007, the American sociologist James W. Loewen surveyed 18 American history texts and found them to be “marred by an embarrassing combination of blind patriotism, sheer misinformation, and outright lies.” He published his findings in the aptly titled book Lies My Teacher Told Me.

In 2020, 181 historians in the UK wrote an open letter demanding changes to the history section of the British Home Office’s citizenship test. The campaign was initiated by the British professor of history and archeology Frank Trentmann. A debate on the issue, through an exchange of letters between Trentmann and Stephen Parkinson, a former Home Office special adviser, was published in the August 23, 2020 issue of The Spectator. Trentmann laments that the problem lay in a combination of errors, omissions and distortions in the history section pages, which were also littered with mistakes.

Not only are historical distortions in textbooks a universal practice, but the many ways that this is done are equally universal and cut across competing ideologies. In Textbooks as Propaganda, the historian Joanna Wojdon demonstrates the methods that were used by the state in this respect in communist Poland (1944-1989).

The methods of distortions in this case were similar to the ones that were used in various former communist dictatorships such the Soviet Union and its satellite states in East Europe, and in China. The same methods in this context were also employed by totalitarian regimes in Nazi Germany, and in fascist Italy and Spain.

And if one examines the methods of distorting history textbooks, as examined by Loewen in the US and Trentmann in the UK, one can come across various similarities between how it is done in liberal democracies and how it was done in totalitarian set-ups.

I once shared this observation with an American academic in 2018. He somewhat agreed but argued that because of the Cold War (1945-1991) many democratic countries were pressed to adopt certain propaganda techniques that were originally devised by communist regimes. I tend to disagree. Because if this were a reason, then how is one to explain the publication of the book The Menace of Nationalism in Education by Jonathan French Scott in 1926 — almost 20 years before the start of the Cold War?

Scott meticulously examined history textbooks being taught in France, Germany, Britain and the US in the 1920s. It is fascinating to see how the methods used to write textbooks, described by Scott as tools of indoctrination, are quite similar to those applied in communist and fascist dictatorships, and how they are being employed in both developing as well as developed countries.

In a nutshell, no matter what ideological bent is being welded into textbooks in various countries, it has always been about altering history through engineered stories as a means of promoting particular agendas. This is done by concocting events that did not happen, altering those that did take place, or omitting events altogether.

It was Scott who most clearly understood this as a problem that is inherent in the whole idea of the nation state, which is largely constructed by clubbing people together as ‘nations’, not only within physical but also ideological boundaries.

This leaves nation states always feeling vulnerable and fearing that the glue that binds a nation together, through largely fabricated and manufactured ideas of ethnic, religious or racial homogeneity, will wear off. Thus the need is felt to keep it intact through continuous historical distortions.

'People will forgive you for being wrong, but they will never forgive you for being right - especially if events prove you right while proving them wrong.' Thomas Sowell

Search This Blog

Showing posts with label distortion. Show all posts

Showing posts with label distortion. Show all posts

Sunday, 14 February 2021

Thursday, 30 March 2017

The myth of the ‘lone wolf’ terrorist

Jason Burke in The Guardian

At around 8pm on Sunday 29 January, a young man walked into a mosque in the Sainte-Foy neighbourhood of Quebec City and opened fire on worshippers with a 9mm handgun. The imam had just finished leading the congregation in prayer when the intruder started shooting at them. He killed six and injured 19 more. The dead included an IT specialist employed by the city council, a grocer, and a science professor.

The suspect, Alexandre Bissonnette, a 27-year-old student, has been charged with six counts of murder, though not terrorism. Within hours of the attack, Ralph Goodale, the Canadian minister for public safety, described the killer as “a lone wolf”. His statement was rapidly picked up by the world’s media.

The Boston Marathon bombing carried out by Dzhokhar and Tamerlan Tsarnaev in 2013. Photograph: Dan Lampariello/Reuters

One problem facing security services, politicians and the media is that instant analysis is difficult. It takes months to unravel the truth behind a major, or even minor, terrorist operation. The demand for information from a frightened public, relayed by a febrile news media, is intense. People seek quick, familiar explanations.

Yet many of the attacks that have been confidently identified as lone-wolf operations have turned out to be nothing of the sort. Very often, terrorists who are initially labelled lone wolves, have active links to established groups such as Islamic State and al-Qaida. Merah, for instance, had recently travelled to Pakistan and been trained, albeit cursorily, by a jihadi group allied with al-Qaida. Merah was also linked to a network of local extremists, some of whom went on to carry out attacks in Libya, Iraq and Syria. Bernard Cazeneuve, who was then the French interior minister, later agreed that calling Merah a lone wolf had been a mistake.

If, in cases such as Merah’s, the label of lone wolf is plainly incorrect, there are other, more subtle cases where it is still highly misleading. Another category of attackers, for instance, are those who strike alone, without guidance from formal terrorist organisations, but who have had face-to-face contact with loose networks of people who share extremist beliefs. The Exeter restaurant bomber, dismissed as an unstable loner, was actually in contact with a circle of local militant sympathisers before his attack. (They have never been identified.) The killers of Lee Rigby had been on the periphery of extremist movements in the UK for years, appearing at rallies of groups such as the now proscribed al-Muhajiroun, run by Anjem Choudary, a preacher convicted of terrorist offences in 2016 who is reported to have “inspired” up to 100 British militants.

A third category is made up of attackers who strike alone, after having had close contact online, rather than face-to-face, with extremist groups or individuals. A wave of attackers in France last year were, at first, wrongly seen as lone wolves “inspired” rather than commissioned by Isis. It soon emerged that the individuals involved, such as the two teenagers who killed a priest in front of his congregation in Normandy, had been recruited online by a senior Isis militant. In three recent incidents in Germany, all initially dubbed “lone-wolf attacks”, Isis militants actually used messaging apps to direct recruits in the minutes before they attacked. “Pray that I become a martyr,” one attacker who assaulted passengers on a train with an axe and knife told his interlocutor. “I am now waiting for the train.” Then: “I am starting now.”

Very often, what appear to be the clearest lone-wolf cases are revealed to be more complex. Even the strange case of the man who killed 86 people with a truck in Nice in July 2016 – with his background of alcohol abuse, casual sex and lack of apparent interest in religion or radical ideologies – may not be a true lone wolf. Eight of his friends and associates have been arrested and police are investigating his potential links to a broader network.

What research does show is that we may be more likely to find lone wolves among far-right extremists than among their jihadi counterparts. Though even in those cases, the term still conceals more than it reveals.

The murder of the Labour MP Jo Cox, days before the EU referendum, by a 52-year-old called Thomas Mair, was the culmination of a steady intensification of rightwing extremist violence in the UK that had been largely ignored by the media and policymakers. According to police, on several occasions attackers came close to causing more casualties in a single operation than jihadis had ever inflicted. The closest call came in 2013 when Pavlo Lapshyn, a Ukrainian PhD student in the UK, planted a bomb outside a mosque in Tipton, West Midlands. Fortunately, Lapshyn had got his timings wrong and the congregation had yet to gather when the device exploded. Embedded in the trunks of trees surrounding the building, police found some of the 100 nails Lapshyn had added to the bomb to make it more lethal.

Lapshyn was a recent arrival, but the UK has produced numerous homegrown far-right extremists in recent years. One was Martyn Gilleard, who was sentenced to 16 years for terrorism and child pornography offences in 2008. When officers searched his home in Goole, East Yorkshire, they found knives, guns, machetes, swords, axes, bullets and four nail bombs. A year later, Ian Davison became the first Briton convicted under new legislation dealing with the production of chemical weapons. Davison was sentenced to 10 years in prison for manufacturing ricin, a lethal biological poison made from castor beans. His aim, the court heard, was “the creation of an international Aryan group who would establish white supremacy in white countries”.

Lapshyn, Gilleard and Davison were each described as lone wolves by police officers, judges and journalists. Yet even a cursory survey of their individual stories undermines this description. Gilleard was the local branch organiser of a neo-Nazi group, while Davison founded the Aryan Strike Force, the members of which went on training days in Cumbria where they flew swastika flags.

Thomas Mair, who was also widely described as a lone wolf, does appear to have been an authentic loner, yet his involvement in rightwing extremism goes back decades. In May 1999, the National Alliance, a white-supremacist organisation in West Virginia, sent Mair manuals that explained how to construct bombs and assemble homemade pistols. Seventeen years later, when police raided his home after the murder, they found stacks of far-right literature, Nazi memorabilia and cuttings on Anders Breivik, the Norwegian terrorist who murdered 77 people in 2011.

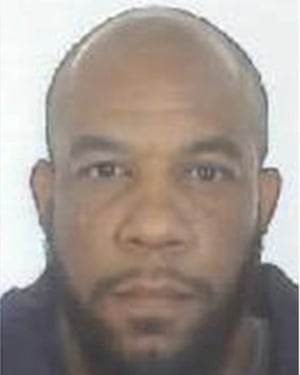

Westminster terrorist Khalid Masood. Photograph: Reuters

The reason that many attacks are not prevented is not because it was impossible to anticipate the perpetrator’s actions, but because someone screwed up. German law enforcement agencies were aware that the man who killed 12 in Berlin before Christmas was an Isis sympathiser and had talked about committing an attack. Repeated attempts to deport him had failed, stymied by bureaucracy, lack of resources and poor case preparation. In Britain, a parliamentary report into the killing of Lee Rigby identified a number of serious delays and potential missed opportunities to prevent it. Khalid Masood, the man who attacked Westminster last week, was identified in 2010 as a potential extremist by MI5.

But perhaps the most disquieting explanation for the ubiquity of the term is that it tells us something we want to believe. Yes, the terrorist threat now appears much more amorphous and unpredictable than ever before. At the same time, the idea that terrorists operate alone allows us to break the link between an act of violence and its ideological hinterland. It implies that the responsibility for an individual’s violent extremism lies solely with the individual themselves.

The truth is much more disturbing. Terrorism is not something you do by yourself, it is highly social. People become interested in ideas, ideologies and activities, even appalling ones, because other people are interested in them.

In his eulogy at the funeral of those killed in the mosque shooting in Quebec, the imam Hassan Guillet spoke of the alleged shooter. Over previous days details had emerged of the young man’s life. “Alexandre [Bissonette], before being a killer, was a victim himself,” said Hassan. “Before he planted his bullets in the heads of his victims, somebody planted ideas more dangerous than the bullets in his head. Unfortunately, day after day, week after week, month after month, certain politicians, and certain reporters and certain media, poisoned our atmosphere.

“We did not want to see it …. because we love this country, we love this society. We wanted our society to be perfect. We were like some parents who, when a neighbour tells them their kid is smoking or taking drugs, answers: ‘I don’t believe it, my child is perfect.’ We don’t want to see it. And we didn’t see it, and it happened.”

“But,” he went on to say, “there was a certain malaise. Let us face it. Alexandre Bissonnette didn’t emerge from a vacuum.”

At around 8pm on Sunday 29 January, a young man walked into a mosque in the Sainte-Foy neighbourhood of Quebec City and opened fire on worshippers with a 9mm handgun. The imam had just finished leading the congregation in prayer when the intruder started shooting at them. He killed six and injured 19 more. The dead included an IT specialist employed by the city council, a grocer, and a science professor.

The suspect, Alexandre Bissonnette, a 27-year-old student, has been charged with six counts of murder, though not terrorism. Within hours of the attack, Ralph Goodale, the Canadian minister for public safety, described the killer as “a lone wolf”. His statement was rapidly picked up by the world’s media.

Goodale’s statement came as no surprise. In early 2017, well into the second decade of the most intense wave of international terrorism since the 1970s, the lone wolf has, for many observers, come to represent the most urgent security threat faced by the west. The term, which describes an individual actor who strikes alone and is not affiliated with any larger group, is now widely used by politicians, journalists, security officials and the general public. It is used for Islamic militant attackers and, as the shooting in Quebec shows, for killers with other ideological motivations. Within hours of the news breaking of an attack on pedestrians and a policeman in central London last week, it was used to describe the 52-year-old British convert responsible. Yet few beyond the esoteric world of terrorism analysis appear to give this almost ubiquitous term much thought.

Terrorism has changed dramatically in recent years. Attacks by groups with defined chains of command have become rarer, as the prevalence of terrorist networks, autonomous cells, and, in rare cases, individuals, has grown. This evolution has prompted a search for a new vocabulary, as it should. The label that seems to have been decided on is “lone wolves”. They are, we have been repeatedly told, “Terror enemy No 1”.

Yet using the term as liberally as we do is a mistake. Labels frame the way we see the world, and thus influence attitudes and eventually policies. Using the wrong words to describe problems that we need to understand distorts public perceptions, as well as the decisions taken by our leaders. Lazy talk of “lone wolves” obscures the real nature of the threat against us, and makes us all less safe.

The image of the lone wolf who splits from the pack has been a staple of popular culture since the 19th century, cropping up in stories about empire and exploration from British India to the wild west. From 1914 onwards, the term was popularised by a bestselling series of crime novels and films centred upon a criminal-turned-good-guy nicknamed Lone Wolf. Around that time, it also began to appear in US law enforcement circles and newspapers. In April 1925, the New York Times reported on a man who “assumed the title of ‘Lone Wolf’”, who terrorised women in a Boston apartment building. But it would be many decades before the term came to be associated with terrorism.

In the 1960s and 1970s, waves of rightwing and leftwing terrorism struck the US and western Europe. It was often hard to tell who was responsible: hierarchical groups, diffuse networks or individuals effectively operating alone. Still, the majority of actors belonged to organisations modelled on existing military or revolutionary groups. Lone actors were seen as eccentric oddities, not as the primary threat.

The modern concept of lone-wolf terrorism was developed by rightwing extremists in the US. In 1983, at a time when far-right organisations were coming under immense pressure from the FBI, a white nationalist named Louis Beam published a manifesto that called for “leaderless resistance” to the US government. Beam, who was a member of both the Ku Klux Klan and the Aryan Nations group, was not the first extremist to elaborate the strategy, but he is one of the best known. He told his followers that only a movement based on “very small or even one-man cells of resistance … could combat the most powerful government on earth”.

Terrorism has changed dramatically in recent years. Attacks by groups with defined chains of command have become rarer, as the prevalence of terrorist networks, autonomous cells, and, in rare cases, individuals, has grown. This evolution has prompted a search for a new vocabulary, as it should. The label that seems to have been decided on is “lone wolves”. They are, we have been repeatedly told, “Terror enemy No 1”.

Yet using the term as liberally as we do is a mistake. Labels frame the way we see the world, and thus influence attitudes and eventually policies. Using the wrong words to describe problems that we need to understand distorts public perceptions, as well as the decisions taken by our leaders. Lazy talk of “lone wolves” obscures the real nature of the threat against us, and makes us all less safe.

The image of the lone wolf who splits from the pack has been a staple of popular culture since the 19th century, cropping up in stories about empire and exploration from British India to the wild west. From 1914 onwards, the term was popularised by a bestselling series of crime novels and films centred upon a criminal-turned-good-guy nicknamed Lone Wolf. Around that time, it also began to appear in US law enforcement circles and newspapers. In April 1925, the New York Times reported on a man who “assumed the title of ‘Lone Wolf’”, who terrorised women in a Boston apartment building. But it would be many decades before the term came to be associated with terrorism.

In the 1960s and 1970s, waves of rightwing and leftwing terrorism struck the US and western Europe. It was often hard to tell who was responsible: hierarchical groups, diffuse networks or individuals effectively operating alone. Still, the majority of actors belonged to organisations modelled on existing military or revolutionary groups. Lone actors were seen as eccentric oddities, not as the primary threat.

The modern concept of lone-wolf terrorism was developed by rightwing extremists in the US. In 1983, at a time when far-right organisations were coming under immense pressure from the FBI, a white nationalist named Louis Beam published a manifesto that called for “leaderless resistance” to the US government. Beam, who was a member of both the Ku Klux Klan and the Aryan Nations group, was not the first extremist to elaborate the strategy, but he is one of the best known. He told his followers that only a movement based on “very small or even one-man cells of resistance … could combat the most powerful government on earth”.

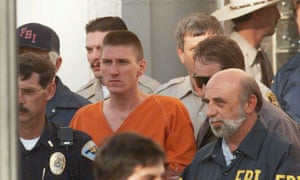

Oklahoma City bomber Timothy McVeigh leaves court, 1995. Photograph: David Longstreath/AP

Experts still argue over how much impact the thinking of Beam and other like-minded white supremacists had on rightwing extremists in the US. Timothy McVeigh, who killed 168 people with a bomb directed at a government office in Oklahoma City in 1995, is sometimes cited as an example of someone inspired by their ideas. But McVeigh had told others of his plans, had an accomplice, and had been involved for many years with rightwing militia groups. McVeigh may have thought of himself as a lone wolf, but he was not one.

One far-right figure who made explicit use of the term lone wolf was Tom Metzger, the leader of White Aryan Resistance, a group based in Indiana. Metzger is thought to have authored, or at least published on his website, a call to arms entitled “Laws for the Lone Wolf”. “I am preparing for the coming War. I am ready when the line is crossed … I am the underground Insurgent fighter and independent. I am in your neighborhoods, schools, police departments, bars, coffee shops, malls, etc. I am, The Lone Wolf!,” it reads.

From the mid-1990s onwards, as Metzger’s ideas began to spread, the number of hate crimes committed by self-styled “leaderless” rightwing extremists rose. In 1998, the FBI launched Operation Lone Wolf against a small group of white supremacists on the US west coast. A year later, Alex Curtis, a young, influential rightwing extremist and protege of Metzger, told his hundreds of followers in an email that “lone wolves who are smart and commit to action in a cold-mannered way can accomplish virtually any task before them ... We are already too far along to try to educate the white masses and we cannot worry about [their] reaction to lone wolf/small cell strikes.”

The same year, the New York Times published a long article on the new threat headlined “New Face of Terror Crimes: ‘Lone Wolf’ Weaned on Hate”. This seems to have been the moment when the idea of terrorist “lone wolves” began to migrate from rightwing extremist circles, and the law enforcement officials monitoring them, to the mainstream. In court on charges of hate crimes in 2000, Curtis was described by prosecutors as an advocate of lone-wolf terrorism.

When, more than a decade later, the term finally became a part of the everyday vocabulary of millions of people, it was in a dramatically different context.

After 9/11, lone-wolf terrorism suddenly seemed like a distraction from more serious threats. The 19 men who carried out the attacks were jihadis who had been hand picked, trained, equipped and funded by Osama bin Laden, the leader of al-Qaida, and a small group of close associates.

Although 9/11 was far from a typical terrorist attack, it quickly came to dominate thinking about the threat from Islamic militants. Security services built up organograms of terrorist groups. Analysts focused on individual terrorists only insofar as they were connected to bigger entities. Personal relations – particularly friendships based on shared ambitions and battlefield experiences, as well as tribal or familial links – were mistaken for institutional ones, formally connecting individuals to organisations and placing them under a chain of command.

As the 2000s drew to a close, attacks perpetrated by people who seemed to be acting alone began to outnumber all others

This approach suited the institutions and individuals tasked with carrying out the “war on terror”. For prosecutors, who were working with outdated legislation, proving membership of a terrorist group was often the only way to secure convictions of individuals planning violence. For a number of governments around the world – Uzbekistan, Pakistan, Egypt – linking attacks on their soil to “al-Qaida” became a way to shift attention away from their own brutality, corruption and incompetence, and to gain diplomatic or material benefits from Washington. For some officials in Washington, linking terrorist attacks to “state-sponsored” groups became a convenient way to justify policies, such as the continuing isolation of Iran, or military interventions such as the invasion of Iraq. For many analysts and policymakers, who were heavily influenced by the conventional wisdom on terrorism inherited from the cold war, thinking in terms of hierarchical groups and state sponsors was comfortably familiar.

A final factor was more subtle. Attributing the new wave of violence to a single group not only obscured the deep, complex and troubling roots of Islamic militancy but also suggested the threat it posed would end when al-Qaida was finally eliminated. This was reassuring, both for decision-makers and the public.

By the middle of the decade, it was clear that this analysis was inadequate. Bombs in Bali, Istanbul and Mombasa were the work of centrally organised attackers, but the 2004 attack on trains in Madrid had been executed by a small network only tenuously connected to the al-Qaida senior leadership 4,000 miles away. For every operation like the 2005 bombings in London – which was close to the model established by the 9/11 attacks – there were more attacks that didn’t seem to have any direct link to Bin Laden, even if they might have been inspired by his ideology. There was growing evidence that the threat from Islamic militancy was evolving into something different, something closer to the “leaderless resistance” promoted by white supremacists two decades earlier.

As the 2000s drew to a close, attacks perpetrated by people who seemed to be acting alone began to outnumber all others. These events were less deadly than the spectacular strikes of a few years earlier, but the trend was alarming. In the UK in 2008, a convert to Islam with mental health problems attempted to blow up a restaurant in Exeter, though he injured no one but himself. In 2009, a US army major shot 13 dead in Fort Hood, Texas. In 2010, a female student stabbed an MPin London. None appeared, initially, to have any broader connections to the global jihadi movement.

Experts still argue over how much impact the thinking of Beam and other like-minded white supremacists had on rightwing extremists in the US. Timothy McVeigh, who killed 168 people with a bomb directed at a government office in Oklahoma City in 1995, is sometimes cited as an example of someone inspired by their ideas. But McVeigh had told others of his plans, had an accomplice, and had been involved for many years with rightwing militia groups. McVeigh may have thought of himself as a lone wolf, but he was not one.

One far-right figure who made explicit use of the term lone wolf was Tom Metzger, the leader of White Aryan Resistance, a group based in Indiana. Metzger is thought to have authored, or at least published on his website, a call to arms entitled “Laws for the Lone Wolf”. “I am preparing for the coming War. I am ready when the line is crossed … I am the underground Insurgent fighter and independent. I am in your neighborhoods, schools, police departments, bars, coffee shops, malls, etc. I am, The Lone Wolf!,” it reads.

From the mid-1990s onwards, as Metzger’s ideas began to spread, the number of hate crimes committed by self-styled “leaderless” rightwing extremists rose. In 1998, the FBI launched Operation Lone Wolf against a small group of white supremacists on the US west coast. A year later, Alex Curtis, a young, influential rightwing extremist and protege of Metzger, told his hundreds of followers in an email that “lone wolves who are smart and commit to action in a cold-mannered way can accomplish virtually any task before them ... We are already too far along to try to educate the white masses and we cannot worry about [their] reaction to lone wolf/small cell strikes.”

The same year, the New York Times published a long article on the new threat headlined “New Face of Terror Crimes: ‘Lone Wolf’ Weaned on Hate”. This seems to have been the moment when the idea of terrorist “lone wolves” began to migrate from rightwing extremist circles, and the law enforcement officials monitoring them, to the mainstream. In court on charges of hate crimes in 2000, Curtis was described by prosecutors as an advocate of lone-wolf terrorism.

When, more than a decade later, the term finally became a part of the everyday vocabulary of millions of people, it was in a dramatically different context.

After 9/11, lone-wolf terrorism suddenly seemed like a distraction from more serious threats. The 19 men who carried out the attacks were jihadis who had been hand picked, trained, equipped and funded by Osama bin Laden, the leader of al-Qaida, and a small group of close associates.

Although 9/11 was far from a typical terrorist attack, it quickly came to dominate thinking about the threat from Islamic militants. Security services built up organograms of terrorist groups. Analysts focused on individual terrorists only insofar as they were connected to bigger entities. Personal relations – particularly friendships based on shared ambitions and battlefield experiences, as well as tribal or familial links – were mistaken for institutional ones, formally connecting individuals to organisations and placing them under a chain of command.

As the 2000s drew to a close, attacks perpetrated by people who seemed to be acting alone began to outnumber all others

This approach suited the institutions and individuals tasked with carrying out the “war on terror”. For prosecutors, who were working with outdated legislation, proving membership of a terrorist group was often the only way to secure convictions of individuals planning violence. For a number of governments around the world – Uzbekistan, Pakistan, Egypt – linking attacks on their soil to “al-Qaida” became a way to shift attention away from their own brutality, corruption and incompetence, and to gain diplomatic or material benefits from Washington. For some officials in Washington, linking terrorist attacks to “state-sponsored” groups became a convenient way to justify policies, such as the continuing isolation of Iran, or military interventions such as the invasion of Iraq. For many analysts and policymakers, who were heavily influenced by the conventional wisdom on terrorism inherited from the cold war, thinking in terms of hierarchical groups and state sponsors was comfortably familiar.

A final factor was more subtle. Attributing the new wave of violence to a single group not only obscured the deep, complex and troubling roots of Islamic militancy but also suggested the threat it posed would end when al-Qaida was finally eliminated. This was reassuring, both for decision-makers and the public.

By the middle of the decade, it was clear that this analysis was inadequate. Bombs in Bali, Istanbul and Mombasa were the work of centrally organised attackers, but the 2004 attack on trains in Madrid had been executed by a small network only tenuously connected to the al-Qaida senior leadership 4,000 miles away. For every operation like the 2005 bombings in London – which was close to the model established by the 9/11 attacks – there were more attacks that didn’t seem to have any direct link to Bin Laden, even if they might have been inspired by his ideology. There was growing evidence that the threat from Islamic militancy was evolving into something different, something closer to the “leaderless resistance” promoted by white supremacists two decades earlier.

As the 2000s drew to a close, attacks perpetrated by people who seemed to be acting alone began to outnumber all others. These events were less deadly than the spectacular strikes of a few years earlier, but the trend was alarming. In the UK in 2008, a convert to Islam with mental health problems attempted to blow up a restaurant in Exeter, though he injured no one but himself. In 2009, a US army major shot 13 dead in Fort Hood, Texas. In 2010, a female student stabbed an MPin London. None appeared, initially, to have any broader connections to the global jihadi movement.

In an attempt to understand how this new threat had developed, analysts raked through the growing body of texts posted online by jihadi thinkers. It seemed that one strategist had been particularly influential: a Syrian called Mustafa Setmariam Nasar, better known as Abu Musab al-Suri. In 2004, in a sprawling set of writings posted on an extremist website, Nasar had laid out a new strategy that was remarkably similar to “leaderless resistance”, although there is no evidence that he knew of the thinking of men such as Beam or Metzger. Nasar’s maxim was “Principles, not organisations”. He envisaged individual attackers and cells, guided by texts published online, striking targets across the world.

Having identified this new threat, security officials, journalists and policymakers needed a new vocabulary to describe it. The rise of the term lone wolf wasn’t wholly unprecedented. In the aftermath of 9/11, the US had passed anti-terror legislation that included a so-called “lone wolf provision”. This made it possible to pursue terrorists who were members of groups based abroad but who were acting alone in the US. Yet this provision conformed to the prevailing idea that all terrorists belonged to bigger groups and acted on orders from their superiors. The stereotype of the lone wolf terrorist that dominates today’s media landscape was not yet fully formed.

It is hard to be exact about when things changed. By around 2006, a small number of analysts had begun to refer to lone-wolf attacks in the context of Islamic militancy, and Israeli officials were using the term to describe attacks by apparently solitary Palestinian attackers. Yet these were outliers. In researching this article, I called eight counter-terrorism officials active over the last decade to ask them when they had first heard references to lone-wolf terrorism. One said around 2008, three said 2009, three 2010 and one around 2011. “The expression is what gave the concept traction,” Richard Barrett, who held senior counter-terrorist positions in MI6, the British overseas intelligence service, and the UN through the period, told me. Before the rise of the lone wolf, security officials used phrases – all equally flawed – such as “homegrowns”, “cleanskins”, “freelancers” or simply “unaffiliated”.

As successive jihadi plots were uncovered that did not appear to be linked to al-Qaida or other such groups, the term became more common. Between 2009 and 2012 it appears in around 300 articles in major English-language news publications each year, according the professional cuttings search engine Lexis Nexis. Since then, the term has become ubiquitous. In the 12 months before the London attack last week, the number of references to “lone wolves” exceeded the total of those over the previous three years, topping 1,000.

Lone wolves are now apparently everywhere, stalking our streets, schools and airports. Yet, as with the tendency to attribute all terrorist attacks to al-Qaida a decade earlier, this is a dangerous simplification.

In March 2012, a 23-year-old petty criminal named Mohamed Merah went on a shooting spree – a series of three attacks over a period of nine days – in south-west France, killing seven people. Bernard Squarcini, head of the French domestic intelligence service, described Merah as a lone wolf. So did the interior ministry spokesman, and, inevitably, many journalists. A year later, Lee Rigby, an off-duty soldier, was run over and hacked to death in London. Once again, the two attackers were dubbed lone wolves by officials and the media. So, too, were Dzhokhar and Tamerlan Tsarnaev, the brothers who bombed the Boston Marathon in 2013. The same label has been applied to more recent attackers, including the men who drove vehicles into crowds in Nice and Berlin last year, and in London last week.

Having identified this new threat, security officials, journalists and policymakers needed a new vocabulary to describe it. The rise of the term lone wolf wasn’t wholly unprecedented. In the aftermath of 9/11, the US had passed anti-terror legislation that included a so-called “lone wolf provision”. This made it possible to pursue terrorists who were members of groups based abroad but who were acting alone in the US. Yet this provision conformed to the prevailing idea that all terrorists belonged to bigger groups and acted on orders from their superiors. The stereotype of the lone wolf terrorist that dominates today’s media landscape was not yet fully formed.

It is hard to be exact about when things changed. By around 2006, a small number of analysts had begun to refer to lone-wolf attacks in the context of Islamic militancy, and Israeli officials were using the term to describe attacks by apparently solitary Palestinian attackers. Yet these were outliers. In researching this article, I called eight counter-terrorism officials active over the last decade to ask them when they had first heard references to lone-wolf terrorism. One said around 2008, three said 2009, three 2010 and one around 2011. “The expression is what gave the concept traction,” Richard Barrett, who held senior counter-terrorist positions in MI6, the British overseas intelligence service, and the UN through the period, told me. Before the rise of the lone wolf, security officials used phrases – all equally flawed – such as “homegrowns”, “cleanskins”, “freelancers” or simply “unaffiliated”.

As successive jihadi plots were uncovered that did not appear to be linked to al-Qaida or other such groups, the term became more common. Between 2009 and 2012 it appears in around 300 articles in major English-language news publications each year, according the professional cuttings search engine Lexis Nexis. Since then, the term has become ubiquitous. In the 12 months before the London attack last week, the number of references to “lone wolves” exceeded the total of those over the previous three years, topping 1,000.

Lone wolves are now apparently everywhere, stalking our streets, schools and airports. Yet, as with the tendency to attribute all terrorist attacks to al-Qaida a decade earlier, this is a dangerous simplification.

In March 2012, a 23-year-old petty criminal named Mohamed Merah went on a shooting spree – a series of three attacks over a period of nine days – in south-west France, killing seven people. Bernard Squarcini, head of the French domestic intelligence service, described Merah as a lone wolf. So did the interior ministry spokesman, and, inevitably, many journalists. A year later, Lee Rigby, an off-duty soldier, was run over and hacked to death in London. Once again, the two attackers were dubbed lone wolves by officials and the media. So, too, were Dzhokhar and Tamerlan Tsarnaev, the brothers who bombed the Boston Marathon in 2013. The same label has been applied to more recent attackers, including the men who drove vehicles into crowds in Nice and Berlin last year, and in London last week.

The Boston Marathon bombing carried out by Dzhokhar and Tamerlan Tsarnaev in 2013. Photograph: Dan Lampariello/Reuters

One problem facing security services, politicians and the media is that instant analysis is difficult. It takes months to unravel the truth behind a major, or even minor, terrorist operation. The demand for information from a frightened public, relayed by a febrile news media, is intense. People seek quick, familiar explanations.

Yet many of the attacks that have been confidently identified as lone-wolf operations have turned out to be nothing of the sort. Very often, terrorists who are initially labelled lone wolves, have active links to established groups such as Islamic State and al-Qaida. Merah, for instance, had recently travelled to Pakistan and been trained, albeit cursorily, by a jihadi group allied with al-Qaida. Merah was also linked to a network of local extremists, some of whom went on to carry out attacks in Libya, Iraq and Syria. Bernard Cazeneuve, who was then the French interior minister, later agreed that calling Merah a lone wolf had been a mistake.

If, in cases such as Merah’s, the label of lone wolf is plainly incorrect, there are other, more subtle cases where it is still highly misleading. Another category of attackers, for instance, are those who strike alone, without guidance from formal terrorist organisations, but who have had face-to-face contact with loose networks of people who share extremist beliefs. The Exeter restaurant bomber, dismissed as an unstable loner, was actually in contact with a circle of local militant sympathisers before his attack. (They have never been identified.) The killers of Lee Rigby had been on the periphery of extremist movements in the UK for years, appearing at rallies of groups such as the now proscribed al-Muhajiroun, run by Anjem Choudary, a preacher convicted of terrorist offences in 2016 who is reported to have “inspired” up to 100 British militants.

A third category is made up of attackers who strike alone, after having had close contact online, rather than face-to-face, with extremist groups or individuals. A wave of attackers in France last year were, at first, wrongly seen as lone wolves “inspired” rather than commissioned by Isis. It soon emerged that the individuals involved, such as the two teenagers who killed a priest in front of his congregation in Normandy, had been recruited online by a senior Isis militant. In three recent incidents in Germany, all initially dubbed “lone-wolf attacks”, Isis militants actually used messaging apps to direct recruits in the minutes before they attacked. “Pray that I become a martyr,” one attacker who assaulted passengers on a train with an axe and knife told his interlocutor. “I am now waiting for the train.” Then: “I am starting now.”

Very often, what appear to be the clearest lone-wolf cases are revealed to be more complex. Even the strange case of the man who killed 86 people with a truck in Nice in July 2016 – with his background of alcohol abuse, casual sex and lack of apparent interest in religion or radical ideologies – may not be a true lone wolf. Eight of his friends and associates have been arrested and police are investigating his potential links to a broader network.

What research does show is that we may be more likely to find lone wolves among far-right extremists than among their jihadi counterparts. Though even in those cases, the term still conceals more than it reveals.

The murder of the Labour MP Jo Cox, days before the EU referendum, by a 52-year-old called Thomas Mair, was the culmination of a steady intensification of rightwing extremist violence in the UK that had been largely ignored by the media and policymakers. According to police, on several occasions attackers came close to causing more casualties in a single operation than jihadis had ever inflicted. The closest call came in 2013 when Pavlo Lapshyn, a Ukrainian PhD student in the UK, planted a bomb outside a mosque in Tipton, West Midlands. Fortunately, Lapshyn had got his timings wrong and the congregation had yet to gather when the device exploded. Embedded in the trunks of trees surrounding the building, police found some of the 100 nails Lapshyn had added to the bomb to make it more lethal.

Lapshyn was a recent arrival, but the UK has produced numerous homegrown far-right extremists in recent years. One was Martyn Gilleard, who was sentenced to 16 years for terrorism and child pornography offences in 2008. When officers searched his home in Goole, East Yorkshire, they found knives, guns, machetes, swords, axes, bullets and four nail bombs. A year later, Ian Davison became the first Briton convicted under new legislation dealing with the production of chemical weapons. Davison was sentenced to 10 years in prison for manufacturing ricin, a lethal biological poison made from castor beans. His aim, the court heard, was “the creation of an international Aryan group who would establish white supremacy in white countries”.

Lapshyn, Gilleard and Davison were each described as lone wolves by police officers, judges and journalists. Yet even a cursory survey of their individual stories undermines this description. Gilleard was the local branch organiser of a neo-Nazi group, while Davison founded the Aryan Strike Force, the members of which went on training days in Cumbria where they flew swastika flags.

Thomas Mair, who was also widely described as a lone wolf, does appear to have been an authentic loner, yet his involvement in rightwing extremism goes back decades. In May 1999, the National Alliance, a white-supremacist organisation in West Virginia, sent Mair manuals that explained how to construct bombs and assemble homemade pistols. Seventeen years later, when police raided his home after the murder, they found stacks of far-right literature, Nazi memorabilia and cuttings on Anders Breivik, the Norwegian terrorist who murdered 77 people in 2011.

A government building in Oslo bombed by Anders Breivik, July 2011. Photograph: Scanpix/Reuters

Even Breivik himself, who has been called “the deadliest lone-wolf attacker in [Europe’s] history”, was not a true lone wolf. Prior to his arrest, Breivik had long been in contact with far-right organisations. A member of the English Defence League told the Telegraph that Breivik had been in regular contact with its members via Facebook, and had a “hypnotic” effect on them.

If such facts fit awkwardly with the commonly accepted idea of the lone wolf, they fit better with academic research that has shown that very few violent extremists who launch attacks act without letting others know what they may be planning. In the late 1990s, after realising that in most instances school shooters would reveal their intentions to close associates before acting, the FBI began to talk about “leakage” of critical information. By 2009, it had extended the concept to terrorist attacks, and found that “leakage” was identifiable in more than four-fifths of 80 ongoing cases they were investigating. Of these leaks, 95% were to friends, close relatives or authority figures.

More recent research has underlined the garrulous nature of violent extremists. In 2013, researchers at Pennsylvania State University examined the interactions of 119 lone-wolf terrorists from a wide variety of ideological and faith backgrounds. The academics found that, even though the terrorists launched their attacks alone, in 79% of cases others were aware of the individual’s extremist ideology, and in 64% of cases family and friends were aware of the individual’s intent to engage in terrorism-related activity. Another more recent survey found that 45% of Islamic militant cases talked about their inspiration and possible actions with family and friends. While only 18% of rightwing counterparts did, they were much more likely to “post telling indicators” on the internet.

Few extremists remain without human contact, even if that contact is only found online. Last year, a team at the University of Miami studied 196 pro-Isis groupsoperating on social media during the first eight months of 2015. These groups had a combined total of more than 100,000 members. Researchers also found that pro-Isis individuals who were not in a group – who they dubbed “online ‘lone wolf’ actors” – had either recently been in a group or soon went on to join one.

Any terrorist, however socially or physically isolated, is still part of a broader movement

There is a much broader point here. Any terrorist, however socially or physically isolated, is still part of a broader movement. The lengthy manifesto that Breivik published hours before he started killing drew heavily on a dense ecosystem of far-right blogs, websites and writers. His ideas on strategy drew directly from the “leaderless resistance” school of Beam and others. Even his musical tastes were shaped by his ideology. He was, for example, a fan of Saga, a Swedish white nationalist singer, whose lyrics include lines about “The greatest race to ever walk the earth … betrayed”.

It is little different for Islamic militants, who emerge as often from the fertile and desperately depressing world of online jihadism – with its execution videos, mythologised history, selectively read religious texts and Photoshopped pictures of alleged atrocities against Muslims – as from organised groups that meet in person.

Terrorist violence of all kinds is directed against specific targets. These are not selected at random, nor are such attacks the products of a fevered and irrational imagination operating in complete isolation.

Just like the old idea that a single organisation, al-Qaida, was responsible for all Islamic terrorism, the rise of the lone-wolf paradigm is convenient for many different actors. First, there are the terrorists themselves. The notion that we are surrounded by anonymous lone wolves poised to strike at any time inspires fear and polarises the public. What could be more alarming and divisive than the idea that someone nearby – perhaps a colleague, a neighbour, a fellow commuter – might secretly be a lone wolf?

Terrorist groups also need to work constantly to motivate their activists. The idea of “lone wolves” invests murderous attackers with a special status, even glamour. Breivik, for instance, congratulated himself in his manifesto for becoming a “self-financed and self-indoctrinated single individual attack cell”. Al-Qaida propaganda lauded the 2009 Fort Hood shooter as “a pioneer, a trailblazer, and a role model who has opened a door, lit a path, and shown the way forward for every Muslim who finds himself among the unbelievers”.

The lone-wolf paradigm can be helpful for security services and policymakers, too, since the public assumes that lone wolves are difficult to catch. This would be justified if the popular image of the lone wolf as a solitary actor was accurate. But, as we have seen, this is rarely the case.

Even Breivik himself, who has been called “the deadliest lone-wolf attacker in [Europe’s] history”, was not a true lone wolf. Prior to his arrest, Breivik had long been in contact with far-right organisations. A member of the English Defence League told the Telegraph that Breivik had been in regular contact with its members via Facebook, and had a “hypnotic” effect on them.

If such facts fit awkwardly with the commonly accepted idea of the lone wolf, they fit better with academic research that has shown that very few violent extremists who launch attacks act without letting others know what they may be planning. In the late 1990s, after realising that in most instances school shooters would reveal their intentions to close associates before acting, the FBI began to talk about “leakage” of critical information. By 2009, it had extended the concept to terrorist attacks, and found that “leakage” was identifiable in more than four-fifths of 80 ongoing cases they were investigating. Of these leaks, 95% were to friends, close relatives or authority figures.

More recent research has underlined the garrulous nature of violent extremists. In 2013, researchers at Pennsylvania State University examined the interactions of 119 lone-wolf terrorists from a wide variety of ideological and faith backgrounds. The academics found that, even though the terrorists launched their attacks alone, in 79% of cases others were aware of the individual’s extremist ideology, and in 64% of cases family and friends were aware of the individual’s intent to engage in terrorism-related activity. Another more recent survey found that 45% of Islamic militant cases talked about their inspiration and possible actions with family and friends. While only 18% of rightwing counterparts did, they were much more likely to “post telling indicators” on the internet.

Few extremists remain without human contact, even if that contact is only found online. Last year, a team at the University of Miami studied 196 pro-Isis groupsoperating on social media during the first eight months of 2015. These groups had a combined total of more than 100,000 members. Researchers also found that pro-Isis individuals who were not in a group – who they dubbed “online ‘lone wolf’ actors” – had either recently been in a group or soon went on to join one.

Any terrorist, however socially or physically isolated, is still part of a broader movement

There is a much broader point here. Any terrorist, however socially or physically isolated, is still part of a broader movement. The lengthy manifesto that Breivik published hours before he started killing drew heavily on a dense ecosystem of far-right blogs, websites and writers. His ideas on strategy drew directly from the “leaderless resistance” school of Beam and others. Even his musical tastes were shaped by his ideology. He was, for example, a fan of Saga, a Swedish white nationalist singer, whose lyrics include lines about “The greatest race to ever walk the earth … betrayed”.

It is little different for Islamic militants, who emerge as often from the fertile and desperately depressing world of online jihadism – with its execution videos, mythologised history, selectively read religious texts and Photoshopped pictures of alleged atrocities against Muslims – as from organised groups that meet in person.

Terrorist violence of all kinds is directed against specific targets. These are not selected at random, nor are such attacks the products of a fevered and irrational imagination operating in complete isolation.

Just like the old idea that a single organisation, al-Qaida, was responsible for all Islamic terrorism, the rise of the lone-wolf paradigm is convenient for many different actors. First, there are the terrorists themselves. The notion that we are surrounded by anonymous lone wolves poised to strike at any time inspires fear and polarises the public. What could be more alarming and divisive than the idea that someone nearby – perhaps a colleague, a neighbour, a fellow commuter – might secretly be a lone wolf?

Terrorist groups also need to work constantly to motivate their activists. The idea of “lone wolves” invests murderous attackers with a special status, even glamour. Breivik, for instance, congratulated himself in his manifesto for becoming a “self-financed and self-indoctrinated single individual attack cell”. Al-Qaida propaganda lauded the 2009 Fort Hood shooter as “a pioneer, a trailblazer, and a role model who has opened a door, lit a path, and shown the way forward for every Muslim who finds himself among the unbelievers”.

The lone-wolf paradigm can be helpful for security services and policymakers, too, since the public assumes that lone wolves are difficult to catch. This would be justified if the popular image of the lone wolf as a solitary actor was accurate. But, as we have seen, this is rarely the case.

Westminster terrorist Khalid Masood. Photograph: Reuters

The reason that many attacks are not prevented is not because it was impossible to anticipate the perpetrator’s actions, but because someone screwed up. German law enforcement agencies were aware that the man who killed 12 in Berlin before Christmas was an Isis sympathiser and had talked about committing an attack. Repeated attempts to deport him had failed, stymied by bureaucracy, lack of resources and poor case preparation. In Britain, a parliamentary report into the killing of Lee Rigby identified a number of serious delays and potential missed opportunities to prevent it. Khalid Masood, the man who attacked Westminster last week, was identified in 2010 as a potential extremist by MI5.

But perhaps the most disquieting explanation for the ubiquity of the term is that it tells us something we want to believe. Yes, the terrorist threat now appears much more amorphous and unpredictable than ever before. At the same time, the idea that terrorists operate alone allows us to break the link between an act of violence and its ideological hinterland. It implies that the responsibility for an individual’s violent extremism lies solely with the individual themselves.

The truth is much more disturbing. Terrorism is not something you do by yourself, it is highly social. People become interested in ideas, ideologies and activities, even appalling ones, because other people are interested in them.

In his eulogy at the funeral of those killed in the mosque shooting in Quebec, the imam Hassan Guillet spoke of the alleged shooter. Over previous days details had emerged of the young man’s life. “Alexandre [Bissonette], before being a killer, was a victim himself,” said Hassan. “Before he planted his bullets in the heads of his victims, somebody planted ideas more dangerous than the bullets in his head. Unfortunately, day after day, week after week, month after month, certain politicians, and certain reporters and certain media, poisoned our atmosphere.

“We did not want to see it …. because we love this country, we love this society. We wanted our society to be perfect. We were like some parents who, when a neighbour tells them their kid is smoking or taking drugs, answers: ‘I don’t believe it, my child is perfect.’ We don’t want to see it. And we didn’t see it, and it happened.”

“But,” he went on to say, “there was a certain malaise. Let us face it. Alexandre Bissonnette didn’t emerge from a vacuum.”

Friday, 12 December 2014

MCC: the greatest anachronism of English cricket

by Maxie Allen in The Full Toss •

There’s been an outbreak of egg-and-bacon-striped handbags at dawn. Sir John Major’s resignation from the Main Committee of the MCC, in a row about redevelopment plans at Lord’s, has triggered a furious war of words in St John’s Wood.

Put simply, the former prime minister took umbrage at the process by which the MCC decided to downgrade the project. He then claimed that Phillip Hodson, the club’s president, publicly misrepresented his reasons for resigning, and in response Sir John wrote an open letter to set the record straight, in scathing terms.

The saga has been all over the cricket press, and even beyond, in recent weeks – underlining the anomalously prominent role the MCC continues to maintain within the eccentric geography of English cricket.

To this observer it’s both puzzling and slightly troubling that the people who run cricket, and the mainstream media who report on it, remain so reverentially fascinated by an organisation whose function has so little resonance for the vast majority of people who follow the game in this country.

Virtually anything the Marylebone Cricket Club do or say is news – and more importantly, cricket’s opinion-formers and decision-makers attach great weight to its actions and utterances. Whenever Jonathan Agnew interviews an MCC bigwig during the TMS tea-break – which is often – you’d think from the style and manner of the questioning that he had the prime minister or Archbishop of Canterbury in the chair.

Too many people at the apex of cricket’s hierarchy buy unthinkingly into the mythology of the MCC. Their belief in it borders on the religious. A divine provenance and mystique are ascribed to everything symbolised by the red and yellow iconography. The club’s leaders are regarded as high priests, their significance beyond question.

The reality is rather more prosaic. The MCC is a private club, and nothing more. It exists to cater for the wishes of its 18,000 members, which are twofold: to run Lord’s to their comfort and satisfaction, and to promote their influence within cricket both in England and abroad. The MCC retains several powerful roles in the game – of which more in a moment.

You can’t just walk up to the Grace Gates and join the MCC. Membership is an exclusive business. To be accepted, you must secure the endorsement of four existing members, of whom one must hold a senior rank, and then wait for twenty years. Only four hundred new members are admitted each year. But if you’re a VIP, or have influential friends in the right places, you can usually contrive to jump the queue.

Much of the MCC’s clout derives from its ownership of Lord’s, which the club incessantly proclaims to be ‘the home of cricket’. This assertion involves a distorting simplification of cricket’s early history. Lord’s was certainly one of the most important grounds in the development of cricket from rural pastime to national sport, but far from the only one. The vast majority of pioneering cricketers never played there – partly because only some of them were based in London.

Neither the first test match in England, not the first test match of all, were played at Lord’s. The latter distinction belongs to the MCG, which to my mind entitles it to an equal claim for history’s bragging rights.

The obsession with the status of Lord’s is rather unfair to England’s other long-established test grounds, all of whom have a rich heritage. If you were to list the most epic events of our nation’s test and county history, you’d find that only a few of them took place at Lord’s. Headingley provided the stage for the 1981 miracle, for Bradman in 1930, and many others beside. The Oval is where test series usually reach their climax. In 2005, Edgbaston witnessed the greatest match of all time.

Lord’s is only relevant if you are within easy reach of London. And personally, as a spectator, the place leaves me cold. I just don’t feel the magic. Lord’s is too corporate, too lacking in atmosphere, and too full of people who are there purely for the social scene, not to watch the cricket.

Nevertheless, Lord’s gives the MCC influence, which is manifested in two main ways. Firstly, the club has a permanent seat on the fourteen-member ECB Board – the most senior decision-making tier of English cricket. In other words, a private club – both unaccountable to, and exclusive from, the general cricketing public – has a direct say in the way our game is run. No other organisation of its kind enjoys this privilege. The MCC is not elected to this position – neither you nor I have any say in the matter – which it is free to use in furtherance of its own interests.

It was widely reported that, in April 2007, MCC’s then chief executive Keith Bradshaw played a leading part in the removal of Duncan Fletcher as England coach. If so, why? What business was it of his?

The MCC is cricket’s version of a hereditary peer – less an accident of history, but a convenient political arrangement between the elite powerbrokers of the English game. The reasoning goes like this: because once upon a time the MCC used to run everything, well, it wouldn’t really do to keep them out completely, would it? Especially as they’re such damn good chaps.

Why should the MCC alone enjoy so special a status, and no other of the thousands of cricket clubs in England? What’s so virtuous about it, compared to the club you or I belong to – which is almost certainly easier to join and more accessible.

What’s even more eccentric about the MCC’s place on the ECB board is that the entire county game only has three representatives. In the ECB’s reckoning, therefore, one private cricket club (which competes in no first-class competitions) deserves to have one-third of the power allocated to all eighteen counties and their supporters in their entirety.

The second stratum of MCC’s power lies in its role as custodian of the Laws of Cricket. The club decides – for the whole world – how the game shall be played, and what the rules are. From Dhaka to Bridgetown, every cricketer across the globe must conform to a code laid down in St John’s Wood, and – sorry to keep repeating this point, but it’s integral – by a private organisation in which they have no say.

Admittedly, the ICC is now also involved in any revisions to the Laws, but the MCC have the final say, and own the copyright.

You could make a strong argument for the wisdom of delegating such a sensitive matter as cricket’s Laws to – in the form of MCC – a disinterested body with no sectional interests but the werewithal to muster huge expertise. That’s far better, the argument goes, than leaving it to the squabbling politicians of the ICC, who will act only in the selfish interests of their own nations.

But that said, the arrangement still feels peculiar, in an uncomfortable way. The ICC, and its constituent national boards, may be deeply flawed, but they are at least notionally accountable, and in some senses democratic. You could join a county club tomorrow and in theory rise up the ranks to ECB chairman. The ICC and the boards could be reformed without changing the concept underpinning their existence. None of these are true of the MCC.

Why does this one private club – and no others – enjoy such remarkable privileges? The answer lies in an interpretation of English cricket history which although blindly accepted by the establishment – and fed to us, almost as propaganda – is rather misleading.

History, as they often say, is written by the winners, and this is certainly true in cricket. From the early nineteenth century the MCC used its power, wealth and connections to take control of the game of cricket – first in England, and then the world. No one asked the club to do this, nor did they consult the public or hold a ballot. They simply, and unilaterally, assumed power, in the manner of an autocrat, and inspired by a similar sense of entitlement to that which built the British empire.

This private club, with its exclusive membership, ran test and domestic English cricket, almost on its own, until 1968. Then the Test and County Cricket Board was formed, in which the MCC maintained a hefty role until the creation of the ECB in 1997. The England team continued to play in MCC colours when overseas until the 1990s. Internationally, the MCC oversaw the ICC until as recently as 1993.

All through these near two centuries of quasi-monarchical rule, the MCC believed it was their divine right to govern. They knew best. Their role was entirely self-appointed, with the collusion of England’s social and political elite. At no stage did they claim to represent the general cricketing public, nor allow the public to participate in their processes.

The considerable authority the MCC still enjoys today derives not from its inherent virtues, or any popular mandate, but from its history. Because it has always had a leadership role, it will always be entitled to one.

The other bulwark of the MCC’s authority is predicated on the widespread assumption that the club virtually invented cricket, single-handedly. It was certainly one of the most influential clubs in the evolution of the game, and its codification in Victorian times, but far from the only one, and by no means the first. Neither did the MCC pioneer cricket’s Laws – their own first version was the fifth in all.

Hundreds of cricket clubs, across huge swathes of England, all contributed to the development of cricket into its modern form. The cast of cricket’s history is varied and complex – from the gambling aristocrats, to the wily promoters, the public schools, and the nascent county sides who invented the professional game as we know it now. Tens of thousands of individuals were involved, almost of all whom never went to Lord’s or had anything to thank the MCC for.

And that’s before you even start considering the countless Indians, West Indians, South Africans and especially Australians who all helped shape the dynamics, traditions and culture of our sport.

And yet it was the egg-and-bacon wearers who took all the credit. They appointed themselves leaders, and succeeded in doing so – due to the wealth, power and social connections of their membership. And because the winners write the history, the history says that MCC gave us cricket. It is this mythology which underpins their retention of power in the twenty first century.

Just to get things into perspective – I’m not suggesting we gather outside the Grace Gates at dawn, brandishing flaming torches. This is not an exhortation to storm the MCC’s ramparts and tear down the rose-red pavilion brick by brick until we secure the overthrow of these villainous tyrants.

In many ways the MCC is a force for good. It funds coaching and access schemes, gives aspiring young players opportunities on the ground staff, promotes the Spirit Of Cricket initiative, organises tours to remote cricketing nations, and engages in many charitable enterprises.

Their members may wear hideous ties and blazers, and usually conform to their snobbish and fusty stereotype, but no harm comes of that. As a private club, the MCC can act as it pleases, and do whatever it wants with Lord’s, which is its property.

But the MCC should have no say or involvement whatsoever in the running of English cricket. The club’s powers were never justifiable in the first place, and certainly not in the year 2012. The club must lose its place on the ECB Board. That is beyond argument.

As for the Laws, the MCC should bring their expertise to bear as consultants. But surely now the ultimate decisions should rest with the ICC.

Unpalatable though it may seem to hand over something so precious to so Byzantine an organisation, it is no longer fair or logical to expect every cricketer from Mumbai to Harare to dance to a St John’s Wood tune. This is an age in which Ireland and Afghanistan are playing serious cricket, and even China are laying the foundations. The process must be transparent, global, and participatory.

Cricket is both the beneficiary and victim of its history. No other game has a richer or more fascinating heritage, and ours has bequeathed a value system, international context, cherished rivalries, and an endless source of intrigue and delight.

But history is to be selected from with care – you maintain the traditions which still have value and relevance, and update or discard those which don’t. The role of the MCC is the apotheosis of this principle within cricket. For this private and morally remote club to still wield power in 2012 is as anachronistic as two stumps, a curved bat, and underarm bowling.

Wednesday, 23 October 2013

Sir John Major gets his carefully-crafted revenge on the bastards

Tory former prime minister's speech was a nostalgic trip down memory lane, where he mugged the Eurosceptics

Sir John Major made a lunchtime speech in parliament in which he got his revenge on the Eurosceptics and other enemies Photograph: Gareth Fuller/PA

John Major, our former prime minister, was in reflective mood at a lunch in parliament. Asked about his famous description of Eurosceptics as "bastards", he remarked ruefully: "What I said was unforgivable." Pause for contrition. "My only excuse – is that it was true." Pause for loud laughter. Behind that mild demeanour, he is a good hater.

The event was steeped in nostalgia. Sir John may have hair that is more silvery than ever, and his sky-blue tie shines like the sun on a tropical sea at daybreak, but he still brings a powerful whiff of the past. Many of us can recall those days of the early 1990s. Right Said Fred topped the charts with Deeply Dippy, still on all our lips. The top TV star was Mr Blobby. The Ford Mondeo hit the showrooms, bringing gladness and stereo tape decks to travelling salesmen. Unemployment nudged 3 million.

Sir John dropped poison pellets into everyone's wine glass. But for a while he spoke only in lapidary epigrams. "The music hall star Dan Leno said 'I earn so much more than the prime minister; on the other hand I do so much less harm'."

"Tories only ever plot against themselves. Labour are much more egalitarian – they plot against everyone."

"The threat of a federal Europe is now deader than Jacob Marley."

"David Cameron's government is not Conservative enough. Of course it isn't; it's a coalition, stupid!"

Sometimes the saws and proverbs crashed into each other: "If we Tories navel-gaze and only pander to our comfort zone, we will never get elected."

And in a riposte to the Tebbit wing of the Tory party (now only represented by another old enemy, Norman Tebbit): "There is no point in telling people to get on their bikes if there is nowhere to live when they get there."

He was worried about the "dignified poor and the semi-poor", who, he implied, were ignored by the government. Iain Duncan Smith, leader of the bastards outside the cabinet during the Major government, was dispatched. "IDS is trying to reform benefits. But unless he is lucky or a genius, which last time I looked was not true, he may get things wrong." Oof.

"If he listens only to bean-counters and cheerleaders only concerned with abuse of the system, he will fail." Ouch!

"Governments should exist to help people, not institutions."

But he had kind words for David or Ed, "or whichever Miliband it is". Ed's proposal for an energy price freeze showed his heart was in the right place, even if "his head has gone walkabout".

He predicted a cold, cold winter. "It is not acceptable for people to have to make a choice between eating and heating." His proposal, a windfall tax, was rejected by No 10 within half an hour of Sir John sitting down.

Such is 24-hour news. Or as he put it: "I was never very good at soundbites – if I had been, I might have felt the hand of history on my shoulder." And having laid waste to all about him, he left with a light smile playing about his glistening tie.

-------

Steve Richards in The Independent

Former Prime Ministers tend to have very little impact on the eras that follow them. John Major is a dramatic exception. When Major spoke in Westminster earlier this week he offered the vividly accessible insights of a genuine Conservative moderniser. In doing so he exposed the narrow limits of the self-proclaimed modernising project instigated by David Cameron and George Osborne. Major’s speech was an event of considerable significance, presenting a subtle and formidable challenge to the current leadership. Margaret Thatcher never achieved such a feat when she sought – far more actively – to undermine Major after she had suddenly become a former Prime Minister.

The former Prime Minister’s call for a one-off tax on the energy companies was eye-catching, but his broader argument was far more powerful. Without qualification, Major insisted that governments had a duty to intervene in failing markets. He made this statement as explicitly as Ed Miliband has done since the latter became Labour’s leader. The former Prime Minister disagrees with Miliband’s solution – a temporary price freeze – but he is fully behind the proposition that a government has a duty to act. He stressed that he was making the case as a committed Conservative.

Yet the proposition offends the ideological souls of those who currently lead the Conservative party. Today’s Tory leadership, the political children of Margaret Thatcher, is purer than the Lady herself in its disdain for state intervention. In developing his case, Major pointed the way towards a modern Tory party rather than one that looks to the 1980s for guidance. Yes, Tories should intervene in failing markets, but not in the way Labour suggests. He proposes a one-off tax on energy companies. At least he has a policy. In contrast, the generation of self-proclaimed Tory modernisers is paralysed, caught between its attachment to unfettered free markets and the practical reality that powerless consumers are being taken for a ride by a market that does not work.

Major went much further, outlining other areas in which the Conservatives could widen their appeal. They included the case for a subtler approach to welfare reform. He went as far as to suggest that some of those protesting at the injustice of current measures might have a point. He was powerful too on the impoverished squalor of some people’s lives, and on their sense of helplessness – suggesting that housing should be a central concern for a genuinely compassionate Conservative party. The language was vivid, most potently when Major outlined the choice faced by some in deciding whether to eat or turn the heating on. Every word was placed in the wider context of the need for the Conservatives to win back support in the north of England and Scotland.