'People will forgive you for being wrong, but they will never forgive you for being right - especially if events prove you right while proving them wrong.' Thomas Sowell

Search This Blog

Showing posts with label myth. Show all posts

Showing posts with label myth. Show all posts

Tuesday, 26 August 2025

Saturday, 13 April 2024

The myth of the second chance

Janan Ganesh in The FT

In the novels of Ian McEwan, a pattern recurs. The main character makes a mistake — just one — which then hangs over them forever. A girl misidentifies a rapist, and in doing so shatters three lives, including her own (Atonement). A man exchanges a lingering glance with another, who becomes a tenacious stalker (Enduring Love). A just-married couple fail to have sex, or rather have it badly, and aren’t themselves again, either as individuals or as a pair (On Chesil Beach). Often, the mistake reverberates over much of the 20th century.

This plot trick is said to be unbecoming of a serious artist. McEwan is accused of an obsession with incident that isn’t true to the gradualism and untidiness of real life. Whereas Proust luxuriates in the slow accretion of human experience, McEwan homes in on the singular event. It is too neat. It is written to be filmed.

Well, I am old enough now to observe peers in their middle years, including some disappointed and hurt ones. I suggest it is McEwan who gets life right. The surprise of middle age, and the terror of it, is how much of a person’s fate can boil down to one misjudgement.

Such as? What in particular should the young know? If you marry badly — or marry at all, when it isn’t for you — don’t assume the damage is recoverable. If you make the wrong career choice, and realise it as early as age 30, don’t count on a way back. Even the decision to go down a science track at school, when the humanities turn out to be your bag, can mangle a life. None of these errors need consign a person to eternal and acute distress. But life is path-dependent: each mistake narrows the next round of choices. A big one, or just an early one, can foreclose all hope of the life you wanted.

There should be more candour about this from the people who are looked to (and paid) for guidance. The rise of the advice-industrial complex — the self-help podcasts, the chief executive coaches, the men’s conferences — has been mostly benign. But much of the content is American, and reflects the optimism of that country. The notion of an unsalvageable mistake is almost transgressive in the land of second chances.

Also, for obvious commercial reasons, the audience has to be told that all is not lost, that life is still theirs to shape deep into adulthood. No one is signing up to the Ganesh Motivational Bootcamp (“You had kids without thinking it through? It’s over, son”) however radiant the speaker.

A mistake, in the modern telling, is not a mistake but a chance to “grow”, to form “resilience”. It is a mere bridge towards ultimate success. And in most cases, quite so. But a person’s life at 40 isn’t the sum of most decisions. It is skewed by a disproportionately important few: sometimes professional, often romantic. Get these wrong, and the scope for retrieving the situation is, if not zero, then overblown by a culture that struggles to impart bad news.

Martin Amis, that peer of McEwan’s, once attempted an explanation of the vast international appeal of football. “It’s the only sport which is usually decided by one goal,” he theorised, “so the pressure on the moment is more intense in football than any other sport.” His point is borne out across Europe most weekends. A team hogs the ball, creates superior chances, wins more duels — and loses the game to one error. It is, as the statisticians say, a “stupid” sport.

But it is also the one that most approximates life outside the stadium. I am now roughly midway through that other low-scoring game. Looking around at the distress and regret of some peers, I feel sympathy, but also amazement at the casualness with which people entered into big life choices. Perhaps this is what happens when ideas of redemption and resurrection — the ultimate second chance — are encoded into the historic faith of a culture. It takes a more profane cast of mind to see through it.

Sunday, 7 April 2024

Never meet your hero!

Nadeem F Paracha in The Dawn

The German writer J Wolfgang Goethe once quipped, “Blessed is the nation that doesn’t need heroes.” As if to expand upon Goethe’s words, the British philosopher Herbert Spencer wrote, “Hero-worship is strongest where there is least regard for human freedom.”

There is every likelihood that Goethe was viewing societies as collectives, in which self-interest was the primary motivation but where the creation and worship of ‘heroes’ are acts to make people feel virtuous.

Heroes can’t become heroes without an audience. A segment of the society exhibits an individual and explains his or her actions or traits as ‘heroic’. If these receive enough applause, a hero is created. But then no one is really interested in knowing the actual person who has been turned into a hero. Only his mythologised sides are to be viewed.

The mythologising is done to quench a yearning in society — a yearning that cannot be fulfilled because it might be too impractical, utopian, irrational and, therefore, against self-interest. So, the mythologised individual becomes an alter ego of a society conscious of its inherent flaws. Great effort is thus invested in hiding the actual from the gaze of society, so that only the mythologised can be viewed.

One often comes across videos on social media of common everyday people doing virtuous deeds, such as helping an old person cross a busy road, or helping an animal. The helping hands in this regard are exhibited as ‘heroes’, even though they might not even be aware that they are being filmed.

What if they weren’t? What if they remain unaware about the applause that their ‘viral video’ has attracted? Will they stop being helpful without having an audience? They certainly won’t be hailed as heroes. They are often exhibited as heroes by those who want to use them to signal their own appreciative attitude towards ‘goodness’.

This is a harmless ploy. But since self-interest is rampant in almost every society, this can push some people to mould themselves as heroes. There have been cases in which men and women have actually staged certain ‘heroic’ acts, filmed them, and then put them out for all to view. The purpose is to generate praise and accolades for themselves and, when possible, even monetary gains.

But it is also possible that they truly want to be seen as heroes in an unheroic age, despite displaying forged heroism. Then there are those who are so smitten by the romanticised notions of a ‘heroic age’ that they actually plunge into real-life scenarios to quench their intense yearning to be seen as heroes.

For example, a person who voluntarily sticks his neck out for a cause that may lead to his arrest. He knows this. But he also knows that there will be many on social and electronic media who will begin to portray him as a hero. But the applauders often do this to signal their own disposition towards a ‘heroic’ cause.

We apparently live in an unheroic age — an age that philosophers such as Søren Kierkegaard, Friedrich Nietzsche or, for that matter, Muhammad Iqbal, detested. Each had their own understanding of a bygone heroic age.

To Nietzsche, the heroic age existed in some pre-modern period in history, when the Germanic people were fearless. To Iqbal, the heroic age was when early Muslims were powered by an unadulterated faith and passion to conquer the world. There are multiple periods in time that are referred to as ‘heroic ages’, depending on one’s favourite ideology or professed faith.

The yearning for heroes and the penchant for creating them to be revered — so that societies can feel better about themselves — is as old as when the first major civilisations began to appear, thousands of years ago. So when they spoke of heroic ages, what period of history were they reminiscing about — the Stone Age?

Humans are naturally pragmatic. From hunter-gatherers, we became scavenger-survivalists. The image may be off-putting but the latter actually requires one to be more rational, clever and pragmatic. This is how we have survived and progressed.

That ancient yearning for a heroic age has remained, though. An age that never was — an age that was always an imagined one. That’s why we even mythologise known histories, because the actual in this regard can be awkward to deal with. But it is possible to unfold.

America’s ‘founding fathers’ were revered for over two centuries as untainted heroes, until some historians decided to demystify them by exploring their lives outside their mythologised imaginings. Many of these heroes turned out to be slave-owners and not very pleasant people.

Mahatma Gandhi, revered as a symbol of tolerance, turned out to also be a man who disliked black South Africans. The founder of Pakistan MA Jinnah is mythologised as a man who supposedly strived to create an ‘Islamic state’, yet the fact is that he was a declared liberal and loved his wine. Martin Luther King Jr, the revered black rights activist, was also a prolific philanderer.

When freed from mythology, the heroes become human — still important men and women, but with various flaws. This is when they become real and more relatable. They become ‘anti-heroes.’

But there is always an urgency in societies to keep the flaws hidden. The flaws can damage the emotions that are invested in revering ‘heroes’, both dead and living. The act of revering provides an opportunity to feel bigger than a scavenger-survivor, even if this requires forged memories and heavily mythologised men and women.

Therefore, hero-worship can also make one blurt out even the most absurd things to keep a popular but distorted memory of a perceived hero intact. For example, this is exactly what one populist former Pakistani prime minister did when he declared that the terrorist Osama bin Laden was a martyr.

By doing this, the former PM was signalling his own ‘heroism’ as well — that of a proud fool who saw greatness in a mass murderer to signal his own ‘greatness’ in an unheroic age.

The French philosopher Voltaire viewed this tendency as a chain that one has fallen in love with. Voltaire wrote, “It is difficult to free fools from the chains they revere.”

The German writer J Wolfgang Goethe once quipped, “Blessed is the nation that doesn’t need heroes.” As if to expand upon Goethe’s words, the British philosopher Herbert Spencer wrote, “Hero-worship is strongest where there is least regard for human freedom.”

There is every likelihood that Goethe was viewing societies as collectives, in which self-interest was the primary motivation but where the creation and worship of ‘heroes’ are acts to make people feel virtuous.

Heroes can’t become heroes without an audience. A segment of the society exhibits an individual and explains his or her actions or traits as ‘heroic’. If these receive enough applause, a hero is created. But then no one is really interested in knowing the actual person who has been turned into a hero. Only his mythologised sides are to be viewed.

The mythologising is done to quench a yearning in society — a yearning that cannot be fulfilled because it might be too impractical, utopian, irrational and, therefore, against self-interest. So, the mythologised individual becomes an alter ego of a society conscious of its inherent flaws. Great effort is thus invested in hiding the actual from the gaze of society, so that only the mythologised can be viewed.

One often comes across videos on social media of common everyday people doing virtuous deeds, such as helping an old person cross a busy road, or helping an animal. The helping hands in this regard are exhibited as ‘heroes’, even though they might not even be aware that they are being filmed.

What if they weren’t? What if they remain unaware about the applause that their ‘viral video’ has attracted? Will they stop being helpful without having an audience? They certainly won’t be hailed as heroes. They are often exhibited as heroes by those who want to use them to signal their own appreciative attitude towards ‘goodness’.

This is a harmless ploy. But since self-interest is rampant in almost every society, this can push some people to mould themselves as heroes. There have been cases in which men and women have actually staged certain ‘heroic’ acts, filmed them, and then put them out for all to view. The purpose is to generate praise and accolades for themselves and, when possible, even monetary gains.

But it is also possible that they truly want to be seen as heroes in an unheroic age, despite displaying forged heroism. Then there are those who are so smitten by the romanticised notions of a ‘heroic age’ that they actually plunge into real-life scenarios to quench their intense yearning to be seen as heroes.

For example, a person who voluntarily sticks his neck out for a cause that may lead to his arrest. He knows this. But he also knows that there will be many on social and electronic media who will begin to portray him as a hero. But the applauders often do this to signal their own disposition towards a ‘heroic’ cause.

We apparently live in an unheroic age — an age that philosophers such as Søren Kierkegaard, Friedrich Nietzsche or, for that matter, Muhammad Iqbal, detested. Each had their own understanding of a bygone heroic age.

To Nietzsche, the heroic age existed in some pre-modern period in history, when the Germanic people were fearless. To Iqbal, the heroic age was when early Muslims were powered by an unadulterated faith and passion to conquer the world. There are multiple periods in time that are referred to as ‘heroic ages’, depending on one’s favourite ideology or professed faith.

The yearning for heroes and the penchant for creating them to be revered — so that societies can feel better about themselves — is as old as when the first major civilisations began to appear, thousands of years ago. So when they spoke of heroic ages, what period of history were they reminiscing about — the Stone Age?

Humans are naturally pragmatic. From hunter-gatherers, we became scavenger-survivalists. The image may be off-putting but the latter actually requires one to be more rational, clever and pragmatic. This is how we have survived and progressed.

That ancient yearning for a heroic age has remained, though. An age that never was — an age that was always an imagined one. That’s why we even mythologise known histories, because the actual in this regard can be awkward to deal with. But it is possible to unfold.

America’s ‘founding fathers’ were revered for over two centuries as untainted heroes, until some historians decided to demystify them by exploring their lives outside their mythologised imaginings. Many of these heroes turned out to be slave-owners and not very pleasant people.

Mahatma Gandhi, revered as a symbol of tolerance, turned out to also be a man who disliked black South Africans. The founder of Pakistan MA Jinnah is mythologised as a man who supposedly strived to create an ‘Islamic state’, yet the fact is that he was a declared liberal and loved his wine. Martin Luther King Jr, the revered black rights activist, was also a prolific philanderer.

When freed from mythology, the heroes become human — still important men and women, but with various flaws. This is when they become real and more relatable. They become ‘anti-heroes.’

But there is always an urgency in societies to keep the flaws hidden. The flaws can damage the emotions that are invested in revering ‘heroes’, both dead and living. The act of revering provides an opportunity to feel bigger than a scavenger-survivor, even if this requires forged memories and heavily mythologised men and women.

Therefore, hero-worship can also make one blurt out even the most absurd things to keep a popular but distorted memory of a perceived hero intact. For example, this is exactly what one populist former Pakistani prime minister did when he declared that the terrorist Osama bin Laden was a martyr.

By doing this, the former PM was signalling his own ‘heroism’ as well — that of a proud fool who saw greatness in a mass murderer to signal his own ‘greatness’ in an unheroic age.

The French philosopher Voltaire viewed this tendency as a chain that one has fallen in love with. Voltaire wrote, “It is difficult to free fools from the chains they revere.”

Wednesday, 29 November 2023

Monday, 7 June 2021

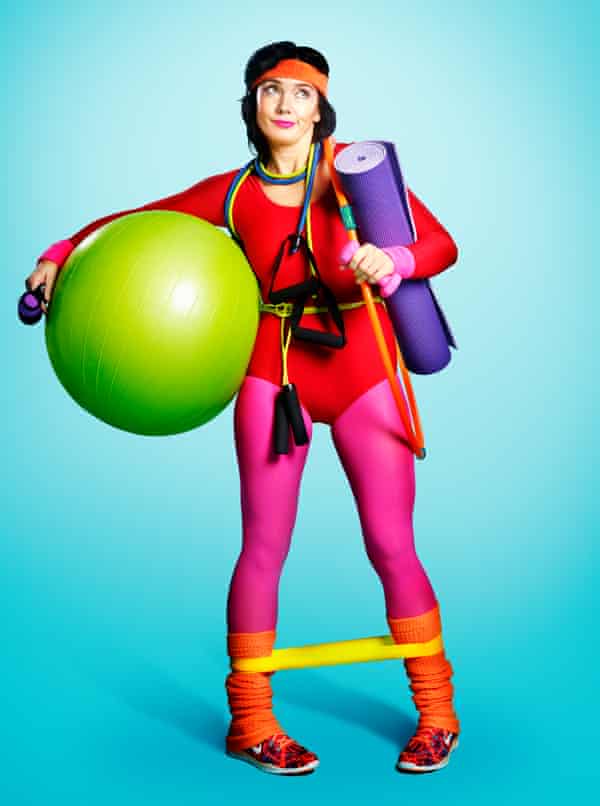

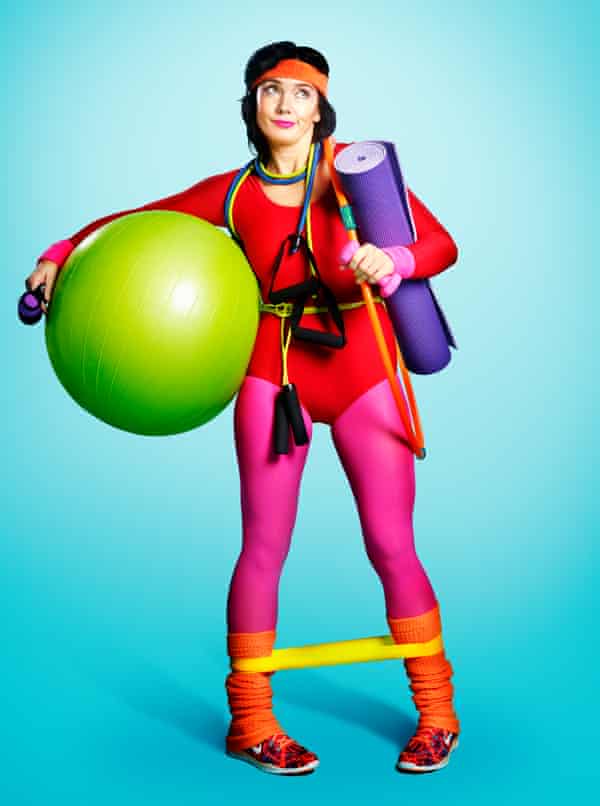

Just don’t do it: 10 exercise myths

We all believe we should exercise more. So why is it so hard to keep it up? Daniel E Lieberman, Harvard professor of evolutionary biology, explodes the most common and unhelpful workout myths by Daniel E Lieberman in The Guardian

Yesterday at an outdoor coffee shop, I met my old friend James in person for the first time since the pandemic began. Over the past year on Zoom, he looked just fine, but in 3D there was no hiding how much weight he’d gained. As we sat down with our cappuccinos, I didn’t say a thing, but the first words out of his mouth were: “Yes, yes, I’m now 20lb too heavy and in pathetic shape. I need to diet and exercise, but I don’t want to talk about it!”

If you feel like James, you are in good company. With the end of the Covid-19 pandemic now plausibly in sight, 70% of Britons say they hope to eat a healthier diet, lose weight and exercise more. But how? Every year, millions of people vow to be more physically active, but the vast majority of these resolutions fail. We all know what happens. After a week or two of sticking to a new exercise regime we gradually slip back into old habits and then feel bad about ourselves.

Clearly, we need a new approach because the most common ways we promote exercise – medicalising and commercialising it – aren’t widely effective. The proof is in the pudding: most adults in high-income countries, such as the UK and US, don’t get the minimum of 150 minutes per week of physical activity recommended by most health professionals. Everyone knows exercise is healthy, but prescribing and selling it rarely works.

I think we can do better by looking beyond the weird world in which we live to consider how our ancestors as well as people in other cultures manage to be physically active. This kind of evolutionary anthropological perspective reveals 10 unhelpful myths about exercise. Rejecting them won’t transform you suddenly into an Olympic athlete, but they might help you turn over a new leaf without feeling bad about yourself.

Myth 1: It’s normal to exercise

Whenever you move to do anything, you’re engaging in physical activity. In contrast, exercise is voluntary physical activity undertaken for the sake of fitness. You may think exercise is normal, but it’s a very modern behaviour. Instead, for millions of years, humans were physically active for only two reasons: when it was necessary or rewarding. Necessary physical activities included getting food and doing other things to survive. Rewarding activities included playing, dancing or training to have fun or to develop skills. But no one in the stone age ever went for a five-mile jog to stave off decrepitude, or lifted weights whose sole purpose was to be lifted.

Myth 2: Avoiding exertion means you are lazy

Whenever I see an escalator next to a stairway, a little voice in my brain says, “Take the escalator.” Am I lazy? Although escalators didn’t exist in bygone days, that instinct is totally normal because physical activity costs calories that until recently were always in short supply (and still are for many people). When food is limited, every calorie spent on physical activity is a calorie not spent on other critical functions, such as maintaining our bodies, storing energy and reproducing. Because natural selection ultimately cares only about how many offspring we have, our hunter-gatherer ancestors evolved to avoid needless exertion – exercise – unless it was rewarding. So don’t feel bad about the natural instincts that are still with us. Instead, accept that they are normal and hard to overcome.

Myth 3: Sitting is the new smoking

You’ve probably heard scary statistics that we sit too much and it’s killing us. Yes, too much physical inactivity is unhealthy, but let’s not demonise a behaviour as normal as sitting. People in every culture sit a lot. Even hunter-gatherers who lack furniture sit about 10 hours a day, as much as most westerners. But there are more and less healthy ways to sit. Studies show that people who sit actively by getting up every 10 or 15 minutes wake up their metabolisms and enjoy better long-term health than those who sit inertly for hours on end. In addition, leisure-time sitting is more strongly associated with negative health outcomes than work-time sitting. So if you work all day in a chair, get up regularly, fidget and try not to spend the rest of the day in a chair, too.

Myth 4: Our ancestors were hard-working, strong and fast

A common myth is that people uncontaminated by civilisation are incredible natural-born athletes who are super-strong, super-fast and able to run marathons easily. Not true. Most hunter-gatherers are reasonably fit, but they are only moderately strong and not especially fast. Their lives aren’t easy, but on average they spend only about two to three hours a day doing moderate-to-vigorous physical activity. It is neither normal nor necessary to be ultra-fit and ultra-strong.

Myth 5: You can’t lose weight walking

Until recently just about every weight-loss programme involved exercise. Recently, however, we keep hearing that we can’t lose weight from exercise because most workouts don’t burn that many calories and just make us hungry so we eat more. The truth is that you can lose more weight much faster through diet rather than exercise, especially moderate exercise such as 150 minutes a week of brisk walking. However, longer durations and higher intensities of exercise have been shown to promote gradual weight loss. Regular exercise also helps prevent weight gain or regain after diet. Every diet benefits from including exercise.

Myth 6: Running will wear out your knees

Many people are scared of running because they’re afraid it will ruin their knees. These worries aren’t totally unfounded since knees are indeed the most common location of runners’ injuries. But knees and other joints aren’t like a car’s shock absorbers that wear out with overuse. Instead, running, walking and other activities have been shown to keep knees healthy, and numerous high-quality studies show that runners are, if anything, less likely to develop knee osteoarthritis. The strategy to avoiding knee pain is to learn to run properly and train sensibly (which means not increasing your mileage by too much too quickly).

Myth 7: It’s normal to be less active as we age

After many decades of hard work, don’t you deserve to kick up your heels and take it easy in your golden years? Not so. Despite rumours that our ancestors’ life was nasty, brutish and short, hunter-gatherers who survive childhood typically live about seven decades, and they continue to work moderately as they age. The truth is we evolved to be grandparents in order to be active in order to provide food for our children and grandchildren. In turn, staying physically active as we age stimulates myriad repair and maintenance processes that keep our bodies humming. Numerous studies find that exercise is healthier the older we get.

Myth 8: There is an optimal dose/type of exercise

One consequence of medicalising exercise is that we prescribe it. But how much and what type? Many medical professionals follow the World Health Organisation’s recommendation of at least 150 minutes a week of moderate or 75 minutes a week of vigorous exercise for adults. In truth, this is an arbitrary prescription because how much to exercise depends on dozens of factors, such as your fitness, age, injury history and health concerns. Remember this: no matter how unfit you are, even a little exercise is better than none. Just an hour a week (eight minutes a day) can yield substantial dividends. If you can do more, that’s great, but very high doses yield no additional benefits. It’s also healthy to vary the kinds of exercise you do, and do regular strength training as you age.

Myth 9: ‘Just do it’ works

Let’s face it, most people don’t like exercise and have to overcome natural tendencies to avoid it. For most of us, telling us to “just do it” doesn’t work any better than telling a smoker or a substance abuser to “just say no!” To promote exercise, we typically prescribe it and sell it, but let’s remember that we evolved to be physically active for only two reasons: it was necessary or rewarding. So let’s find ways to do both: make it necessary and rewarding. Of the many ways to accomplish this, I think the best is to make exercise social. If you agree to meet friends to exercise regularly you’ll be obliged to show up, you’ll have fun and you’ll keep each other going.

Myth 10: Exercise is a magic bullet

Finally, let’s not oversell exercise as medicine. Although we never evolved to exercise, we did evolve to be physically active just as we evolved to drink water, breathe air and have friends. Thus, it’s the absence of physical activity that makes us more vulnerable to many illnesses, both physical and mental. In the modern, western world we no longer have to be physically active, so we invented exercise, but it is not a magic bullet that guarantees good health. Fortunately, just a little exercise can slow the rate at which you age and substantially reduce your chances of getting a wide range of diseases, especially as you age. It can also be fun – something we’ve all been missing during this dreadful pandemic.

Yesterday at an outdoor coffee shop, I met my old friend James in person for the first time since the pandemic began. Over the past year on Zoom, he looked just fine, but in 3D there was no hiding how much weight he’d gained. As we sat down with our cappuccinos, I didn’t say a thing, but the first words out of his mouth were: “Yes, yes, I’m now 20lb too heavy and in pathetic shape. I need to diet and exercise, but I don’t want to talk about it!”

If you feel like James, you are in good company. With the end of the Covid-19 pandemic now plausibly in sight, 70% of Britons say they hope to eat a healthier diet, lose weight and exercise more. But how? Every year, millions of people vow to be more physically active, but the vast majority of these resolutions fail. We all know what happens. After a week or two of sticking to a new exercise regime we gradually slip back into old habits and then feel bad about ourselves.

Clearly, we need a new approach because the most common ways we promote exercise – medicalising and commercialising it – aren’t widely effective. The proof is in the pudding: most adults in high-income countries, such as the UK and US, don’t get the minimum of 150 minutes per week of physical activity recommended by most health professionals. Everyone knows exercise is healthy, but prescribing and selling it rarely works.

I think we can do better by looking beyond the weird world in which we live to consider how our ancestors as well as people in other cultures manage to be physically active. This kind of evolutionary anthropological perspective reveals 10 unhelpful myths about exercise. Rejecting them won’t transform you suddenly into an Olympic athlete, but they might help you turn over a new leaf without feeling bad about yourself.

Myth 1: It’s normal to exercise

Whenever you move to do anything, you’re engaging in physical activity. In contrast, exercise is voluntary physical activity undertaken for the sake of fitness. You may think exercise is normal, but it’s a very modern behaviour. Instead, for millions of years, humans were physically active for only two reasons: when it was necessary or rewarding. Necessary physical activities included getting food and doing other things to survive. Rewarding activities included playing, dancing or training to have fun or to develop skills. But no one in the stone age ever went for a five-mile jog to stave off decrepitude, or lifted weights whose sole purpose was to be lifted.

Myth 2: Avoiding exertion means you are lazy

Whenever I see an escalator next to a stairway, a little voice in my brain says, “Take the escalator.” Am I lazy? Although escalators didn’t exist in bygone days, that instinct is totally normal because physical activity costs calories that until recently were always in short supply (and still are for many people). When food is limited, every calorie spent on physical activity is a calorie not spent on other critical functions, such as maintaining our bodies, storing energy and reproducing. Because natural selection ultimately cares only about how many offspring we have, our hunter-gatherer ancestors evolved to avoid needless exertion – exercise – unless it was rewarding. So don’t feel bad about the natural instincts that are still with us. Instead, accept that they are normal and hard to overcome.

‘For most of us, telling us to “Just do it” doesn’t work’: exercise needs to feel rewarding as well as necessary. Photograph: Dan Saelinger/trunkarchive.com

Myth 3: Sitting is the new smoking

You’ve probably heard scary statistics that we sit too much and it’s killing us. Yes, too much physical inactivity is unhealthy, but let’s not demonise a behaviour as normal as sitting. People in every culture sit a lot. Even hunter-gatherers who lack furniture sit about 10 hours a day, as much as most westerners. But there are more and less healthy ways to sit. Studies show that people who sit actively by getting up every 10 or 15 minutes wake up their metabolisms and enjoy better long-term health than those who sit inertly for hours on end. In addition, leisure-time sitting is more strongly associated with negative health outcomes than work-time sitting. So if you work all day in a chair, get up regularly, fidget and try not to spend the rest of the day in a chair, too.

Myth 4: Our ancestors were hard-working, strong and fast

A common myth is that people uncontaminated by civilisation are incredible natural-born athletes who are super-strong, super-fast and able to run marathons easily. Not true. Most hunter-gatherers are reasonably fit, but they are only moderately strong and not especially fast. Their lives aren’t easy, but on average they spend only about two to three hours a day doing moderate-to-vigorous physical activity. It is neither normal nor necessary to be ultra-fit and ultra-strong.

Myth 5: You can’t lose weight walking

Until recently just about every weight-loss programme involved exercise. Recently, however, we keep hearing that we can’t lose weight from exercise because most workouts don’t burn that many calories and just make us hungry so we eat more. The truth is that you can lose more weight much faster through diet rather than exercise, especially moderate exercise such as 150 minutes a week of brisk walking. However, longer durations and higher intensities of exercise have been shown to promote gradual weight loss. Regular exercise also helps prevent weight gain or regain after diet. Every diet benefits from including exercise.

Myth 6: Running will wear out your knees

Many people are scared of running because they’re afraid it will ruin their knees. These worries aren’t totally unfounded since knees are indeed the most common location of runners’ injuries. But knees and other joints aren’t like a car’s shock absorbers that wear out with overuse. Instead, running, walking and other activities have been shown to keep knees healthy, and numerous high-quality studies show that runners are, if anything, less likely to develop knee osteoarthritis. The strategy to avoiding knee pain is to learn to run properly and train sensibly (which means not increasing your mileage by too much too quickly).

Myth 7: It’s normal to be less active as we age

After many decades of hard work, don’t you deserve to kick up your heels and take it easy in your golden years? Not so. Despite rumours that our ancestors’ life was nasty, brutish and short, hunter-gatherers who survive childhood typically live about seven decades, and they continue to work moderately as they age. The truth is we evolved to be grandparents in order to be active in order to provide food for our children and grandchildren. In turn, staying physically active as we age stimulates myriad repair and maintenance processes that keep our bodies humming. Numerous studies find that exercise is healthier the older we get.

Myth 8: There is an optimal dose/type of exercise

One consequence of medicalising exercise is that we prescribe it. But how much and what type? Many medical professionals follow the World Health Organisation’s recommendation of at least 150 minutes a week of moderate or 75 minutes a week of vigorous exercise for adults. In truth, this is an arbitrary prescription because how much to exercise depends on dozens of factors, such as your fitness, age, injury history and health concerns. Remember this: no matter how unfit you are, even a little exercise is better than none. Just an hour a week (eight minutes a day) can yield substantial dividends. If you can do more, that’s great, but very high doses yield no additional benefits. It’s also healthy to vary the kinds of exercise you do, and do regular strength training as you age.

Myth 9: ‘Just do it’ works

Let’s face it, most people don’t like exercise and have to overcome natural tendencies to avoid it. For most of us, telling us to “just do it” doesn’t work any better than telling a smoker or a substance abuser to “just say no!” To promote exercise, we typically prescribe it and sell it, but let’s remember that we evolved to be physically active for only two reasons: it was necessary or rewarding. So let’s find ways to do both: make it necessary and rewarding. Of the many ways to accomplish this, I think the best is to make exercise social. If you agree to meet friends to exercise regularly you’ll be obliged to show up, you’ll have fun and you’ll keep each other going.

Myth 10: Exercise is a magic bullet

Finally, let’s not oversell exercise as medicine. Although we never evolved to exercise, we did evolve to be physically active just as we evolved to drink water, breathe air and have friends. Thus, it’s the absence of physical activity that makes us more vulnerable to many illnesses, both physical and mental. In the modern, western world we no longer have to be physically active, so we invented exercise, but it is not a magic bullet that guarantees good health. Fortunately, just a little exercise can slow the rate at which you age and substantially reduce your chances of getting a wide range of diseases, especially as you age. It can also be fun – something we’ve all been missing during this dreadful pandemic.

Monday, 25 January 2021

As Joe Biden moves to double the US minimum wage, Australia can't be complacent

Van Badham in The Guardian

When I was writing about minimum wages for the Guardian six years ago, the United States only guaranteed workers US$7.25 an hour as a minimum rate of pay, dropping to a shocking US$2.13 for workers in industries that expect customers to tip (some states have higher minimum wages).

It is now 2021, and yet those federal rates remain exactly the same.

They’ve not moved since 2009. Meaningfully, America’s minimum wages have been in decline since their relative purchasing power peaked in 1968. Meanwhile, America’s cost of living has kept going up; the minimum wage is worth less now than it was half a century ago.

Now, new president Joe Biden’s $1.9tn pandemic relief plan proposes a doubling of the US federal minimum wage to $15 an hour.

It’s a position advocated both by economists who have studied comprehensive, positive effects of minimum wage increases across the world, as well as American unions of the “Fight for 15” campaign who’ve been organising minimum-wage workplaces demanding better for their members.

The logic of these arguments have been accepted across the ideological spectrum of leadership in Biden’s Democratic party. The majority of Biden’s rivals for the Democratic nomination – Bernie Sanders, Elizabeth Warren, Kamala Harris, Pete Buttigieg, Amy Klobuchar, Cory Booker and even billionaire capitalist Mike Bloomberg – are all on record supporting it and in very influential positions to advance it now.

In a 14 January speech, Biden made a simple and powerful case. “No one working 40 hours a week should live below the poverty line,” he said. “If you work for less than $15 an hour and work 40 hours a week, you’re living in poverty.”

And yet the forces opposed to minimum wage increases retain the intensity that first fought attempts at its introduction, as far back as the 1890s. America did not adopt the policy until 1938 – 31 years after Australia’s Harvester Decision legislated an explicit right for a family of four “to live in frugal comfort” within our wage standards.

When I was writing about minimum wages for the Guardian six years ago, the United States only guaranteed workers US$7.25 an hour as a minimum rate of pay, dropping to a shocking US$2.13 for workers in industries that expect customers to tip (some states have higher minimum wages).

It is now 2021, and yet those federal rates remain exactly the same.

They’ve not moved since 2009. Meaningfully, America’s minimum wages have been in decline since their relative purchasing power peaked in 1968. Meanwhile, America’s cost of living has kept going up; the minimum wage is worth less now than it was half a century ago.

Now, new president Joe Biden’s $1.9tn pandemic relief plan proposes a doubling of the US federal minimum wage to $15 an hour.

It’s a position advocated both by economists who have studied comprehensive, positive effects of minimum wage increases across the world, as well as American unions of the “Fight for 15” campaign who’ve been organising minimum-wage workplaces demanding better for their members.

The logic of these arguments have been accepted across the ideological spectrum of leadership in Biden’s Democratic party. The majority of Biden’s rivals for the Democratic nomination – Bernie Sanders, Elizabeth Warren, Kamala Harris, Pete Buttigieg, Amy Klobuchar, Cory Booker and even billionaire capitalist Mike Bloomberg – are all on record supporting it and in very influential positions to advance it now.

In a 14 January speech, Biden made a simple and powerful case. “No one working 40 hours a week should live below the poverty line,” he said. “If you work for less than $15 an hour and work 40 hours a week, you’re living in poverty.”

And yet the forces opposed to minimum wage increases retain the intensity that first fought attempts at its introduction, as far back as the 1890s. America did not adopt the policy until 1938 – 31 years after Australia’s Harvester Decision legislated an explicit right for a family of four “to live in frugal comfort” within our wage standards.

As an Australian, it’s easy to feel smug about our framework. The concept is so ingrained within our basic industrial contract we consume it almost mindlessly, in the manner our cousins might gobble a hotdog in the stands of a Sox game.

But in both cases, the appreciation of the taste depends on your level of distraction from the meat. While wage-earning Australians may tut-tut an American framework that presently allows 7 million people to both hold jobs and live in poverty, local agitation persists for the Americanisation of our own established standards.

When I wrote about minimum wages six years ago, it was in the context of Australia’s Liberal government attempting to erode and compromise them. That government is still in power and that activism from the Liberals and their spruikers is still present. The Australian Chamber of Commerce and Industry campaigned against minimum wage increases last year. So did the federal government – using the economic downtown of coronavirus as a foil to repeat American mythologies about higher wages causing unemployment increases.

They don’t. The “supply side” insistence is that labour is a transactable commodity, and therefore subject to a law of demand in which better-paid jobs equate to fewer employment opportunities … but a neoclassical economic model is not real life.

We know this because some American districts have independently increased their minimum wages over the past few years, and data from places like New York and Seattle has reaffirmed what’s been observed in the UK and internationally. There is no discernible impact on employment when minimum wage is increased. An impact on prices is also fleeting.

As Biden presses his case, economists, sociologists and even health researchers have years of additional data to back him in. Repeated studies have found that increasing the minimum wage results in communities having less crime, less poverty, less inequality and more economic growth. One study suggested it helped bring down the suicide rate. Conversely, with greater wage suppression comes more smoking, drinking, eating of fatty foods and poorer health outcomes overall.

Only the threadbare counter-argument remains that improving the income of “burger-flippers” somehow devalues the labour of qualified paramedics, teachers and ironworkers. This is both classist and weak. Removing impediments to collective bargaining and unionisation is actually what enables workers – across all industries – to negotiate an appropriate pay level.

Australians have been living with the comparative benefits of these assumptions for decades, and have been spared the vicissitudes of America’s boom-bust economic cycles in that time.

But after seven years of Liberal government policy actively corroding standards into a historical wage stagnation, if Biden’s proposals pass, the American minimum wage will suddenly leapfrog Australia’s, in both real dollar terms and purchasing power.

It’ll be a sad day of realisation for Australia to see the Americans overtake us, while we try to comprehend just why we decided to get left behind.

But in both cases, the appreciation of the taste depends on your level of distraction from the meat. While wage-earning Australians may tut-tut an American framework that presently allows 7 million people to both hold jobs and live in poverty, local agitation persists for the Americanisation of our own established standards.

When I wrote about minimum wages six years ago, it was in the context of Australia’s Liberal government attempting to erode and compromise them. That government is still in power and that activism from the Liberals and their spruikers is still present. The Australian Chamber of Commerce and Industry campaigned against minimum wage increases last year. So did the federal government – using the economic downtown of coronavirus as a foil to repeat American mythologies about higher wages causing unemployment increases.

They don’t. The “supply side” insistence is that labour is a transactable commodity, and therefore subject to a law of demand in which better-paid jobs equate to fewer employment opportunities … but a neoclassical economic model is not real life.

We know this because some American districts have independently increased their minimum wages over the past few years, and data from places like New York and Seattle has reaffirmed what’s been observed in the UK and internationally. There is no discernible impact on employment when minimum wage is increased. An impact on prices is also fleeting.

As Biden presses his case, economists, sociologists and even health researchers have years of additional data to back him in. Repeated studies have found that increasing the minimum wage results in communities having less crime, less poverty, less inequality and more economic growth. One study suggested it helped bring down the suicide rate. Conversely, with greater wage suppression comes more smoking, drinking, eating of fatty foods and poorer health outcomes overall.

Only the threadbare counter-argument remains that improving the income of “burger-flippers” somehow devalues the labour of qualified paramedics, teachers and ironworkers. This is both classist and weak. Removing impediments to collective bargaining and unionisation is actually what enables workers – across all industries – to negotiate an appropriate pay level.

Australians have been living with the comparative benefits of these assumptions for decades, and have been spared the vicissitudes of America’s boom-bust economic cycles in that time.

But after seven years of Liberal government policy actively corroding standards into a historical wage stagnation, if Biden’s proposals pass, the American minimum wage will suddenly leapfrog Australia’s, in both real dollar terms and purchasing power.

It’ll be a sad day of realisation for Australia to see the Americans overtake us, while we try to comprehend just why we decided to get left behind.

Friday, 19 June 2020

Why you should go animal-free: 18 arguments for eating meat debunked

Unpalatable as it may be for those wedded to producing and eating meat, the environmental and health evidence for a plant-based diet is clear writes Damian Carrington in The Guardian

Whether you are concerned about your health, the environment or animal welfare, scientific evidence is piling up that meat-free diets are best. Millions of people in wealthy nations are already cutting back on animal products.

Of course livestock farmers and meat lovers are unsurprisingly fighting back and it can get confusing. Are avocados really worse than beef? What about bee-massacring almond production?

The coronavirus pandemic has added another ingredient to that mix. The rampant destruction of the natural world is seen as the root cause of diseases leaping into humans and is largely driven by farming expansion. The world’s top biodiversity scientists say even more deadly pandemics will follow unless the ecological devastation is rapidly halted.

Food is also a vital part of our culture, while the affordability of food is an issue of social justice. So there isn’t a single perfect diet. But the evidence is clear: whichever healthy and sustainable diet you choose, it is going to have much less red meat and dairy than today’s standard western diets, and quite possibly none. That’s for two basic reasons.

First, the over-consumption of meat is causing an epidemic of disease, with about $285bn spent every year around the world treating illness caused by eating red meat alone. Second, eating plants is simply a far more efficient use of the planet’s stretched resources than feeding the plants to animals and then eating them. The global livestock herd and the grain it consumes takes up 83% of global farmland, but produces just 18% of food calories.

So what about all those arguments in favour of meat-eating and against vegan diets? Let’s start with the big beef about red meat.

FacebookTwitterPinterest Cattle graze in a pasture against a backdrop of wind turbines which are part of the 155 turbine Smoky Hill Wind Farm near Vesper, Kan. Photograph: Charlie Riedel/AP

Methane is a very powerful greenhouse gas and ruminants produce a lot of it. But it only remains in the atmosphere for a relatively short time: half is broken down in nine years. This leads some to argue that maintaining the global cattle herd at current levels – about 1 billion animals – is not heating the planet. The burping cows are just replacing the methane that breaks down as time goes by.

But this is simply “creative accounting”, according to Pete Smith at the University of Aberdeen and Andrew Balmford at the University of Cambridge. We shouldn’t argue that cattle farmers can continue to pollute just because they have done so in the past, they say: “We need to do more than just stand still.” In fact, the short-lived nature of methane actually makes reducing livestock numbers a “particularly attractive target”, given that we desperately need to cut greenhouse gas emissions as soon as possible to avoid the worst impacts of the climate crisis.

In any case, just focusing on methane doesn’t make the rampant deforestation by cattle ranchers in South America go away. Even if you ignore methane completely, says Poore, animal products still produce more CO2 than plants. Even one proponent of the methane claim says: “I agree that intensive livestock farming is unsustainable.”

Claim: In many places the only thing you can grow is grass for cattle and sheep

NFU president, Minette Batters, says: “Sixty-five percent of British land is only suitable for grazing livestock and we have the right climate to produce high-quality red meat and dairy.”

“But if everybody were to make the argument that ‘our pastures are the best and should be used for grazing’, then there would be no way to limit global warming,” says Marco Springmann at the University of Oxford. His work shows that a transition to a predominantly plant-based flexitarian diet would free up both pasture and cropland.

The pasture could instead be used to grow trees and lock up carbon, provide land for rewilding and the restoration of nature, and growing bio-energy crops to displace fossil fuels. The crops no longer being fed to animals could instead become food for people, increasing a nation’s self-sufficiency in grains.

FacebookTwitterPinterest The Wild Ken Hill project on the Norfolk coast, which is turning around 1,000 acres of marginal farmland and woodland back over to nature. Photograph: Graeme Lyons/Wild Ken Hill/PA

Claim: Grazing cattle help store carbon from the atmosphere in the soil

This is true. The problem is that even in the very best cases, this carbon storage offsets only 20%-60% of the total emissions from grazing cattle. “In other words, grazing livestock – even in a best-case scenario – are net contributors to the climate problem, as are all livestock,” says Tara Garnett, also at the University of Oxford.

Furthermore, research shows this carbon storage reaches its limit in a few decades, while the problem of methane emissions continue. The stored carbon is also vulnerable - a change in land use or even a drought can see it released again. Proponents of “holistic grazing” to trap carbon are also criticised for unrealistic extrapolation of local results to global levels.

Claim: There is much more wildlife in pasture than in monoculture cropland

That is probably true but misses the real point. A huge driver of the global wildlife crisis is the past and continuing destruction of natural habitat to create pasture for livestock. Herbivores do have an important role in ecosystems, but the high density of farmed herds means pasture is worse for wildlife than natural land. Eating less meat means less destruction of wild places and cutting meat significantly would also free up pasture and cropland that could be returned to nature. Furthermore, a third of all cropland is used to grow animal feed.

Claim: We need animals to convert feed into protein humans can eat

There is no lack of protein, despite the claims. In rich nations, people commonly eat 30-50% more protein than they need. All protein needs can easily be met from plant-based sources, such as beans, lentils, nuts and whole grains.

But animals can play a role in some parts of Africa and Asia where, in India for example, waste from grain production can feed cattle that produce milk. In the rest of the world, where much of cropland that could be used to feed people is actually used to feed animals, a cut in meat eating is still needed for agriculture to be sustainable.

“What about … ?”

Claim: What about soya milk and tofu that is destroying the Amazon?

It’s not. Well over 96% of soy from the Amazon region is fed to cows, pigs and chickens eaten around the world, according to data from the UN Food and Agriculture Organization, says Poore. Furthermore, 97% of Brazilian soy is genetically modified, which is banned for human consumption in many countries and is rarely used to make tofu and soya milk in any case.

Soya milk also has much smaller emissions and land and water use than cow’s milk. If you are worried about the Amazon, not eating meat remains your best bet.

Claim: Almond milk production is massacring bees and turning land into desert

Some almond production may well cause environmental problems. But that is because rising demand has driven rapid intensification in specific places, like California, which could be addressed with proper regulation. It is nothing to do with what almonds need to grow. Traditional almond production in Southern Europe uses no irrigation at all. It is also perhaps worth noting that the bees that die in California are not wild, but raised by farmers like six-legged livestock.

Like soya milk, almond milk still has lower carbon emissions and land and water use than cow’s milk. But if you are still worried, there are plenty of alternatives, with oat milk usually coming out with the lowest environmental footprint.

Whether you are concerned about your health, the environment or animal welfare, scientific evidence is piling up that meat-free diets are best. Millions of people in wealthy nations are already cutting back on animal products.

Of course livestock farmers and meat lovers are unsurprisingly fighting back and it can get confusing. Are avocados really worse than beef? What about bee-massacring almond production?

The coronavirus pandemic has added another ingredient to that mix. The rampant destruction of the natural world is seen as the root cause of diseases leaping into humans and is largely driven by farming expansion. The world’s top biodiversity scientists say even more deadly pandemics will follow unless the ecological devastation is rapidly halted.

Food is also a vital part of our culture, while the affordability of food is an issue of social justice. So there isn’t a single perfect diet. But the evidence is clear: whichever healthy and sustainable diet you choose, it is going to have much less red meat and dairy than today’s standard western diets, and quite possibly none. That’s for two basic reasons.

First, the over-consumption of meat is causing an epidemic of disease, with about $285bn spent every year around the world treating illness caused by eating red meat alone. Second, eating plants is simply a far more efficient use of the planet’s stretched resources than feeding the plants to animals and then eating them. The global livestock herd and the grain it consumes takes up 83% of global farmland, but produces just 18% of food calories.

So what about all those arguments in favour of meat-eating and against vegan diets? Let’s start with the big beef about red meat.

Meaty matters

Claim: Grass-fed beef is low carbon

This is true only when compared to intensively-reared beef linked to forest destruction. The UK’s National Farmers Union says UK beef has only half the emissions compared to the world average. But a lot of research shows grass-fed beef uses more land and produces more – or at best similar – emissions because grain is easier for cows to digest and intensively reared cows live shorter lives. Both factors mean less methane. Either way, the emissions from even the best beef are still many times that from beans and pulses.

There’s more. If all the world’s pasture lands were returned to natural vegetation, it would remove greenhouse gases equivalent to about 8 bn tonnes of carbon dioxide per year from the atmosphere, according to Joseph Poore at Oxford University. That’s about 15% of the world’s total greenhouse gas emissions. Only a small fraction of that pasture land would be needed to grow food crops to replace the lost beef. So overall, if tackling the climate crisis is your thing, then beef is not.

Claim: Cattle are actually neutral for climate, because methane is relatively short-lived greenhouse gas

Claim: Grass-fed beef is low carbon

This is true only when compared to intensively-reared beef linked to forest destruction. The UK’s National Farmers Union says UK beef has only half the emissions compared to the world average. But a lot of research shows grass-fed beef uses more land and produces more – or at best similar – emissions because grain is easier for cows to digest and intensively reared cows live shorter lives. Both factors mean less methane. Either way, the emissions from even the best beef are still many times that from beans and pulses.

There’s more. If all the world’s pasture lands were returned to natural vegetation, it would remove greenhouse gases equivalent to about 8 bn tonnes of carbon dioxide per year from the atmosphere, according to Joseph Poore at Oxford University. That’s about 15% of the world’s total greenhouse gas emissions. Only a small fraction of that pasture land would be needed to grow food crops to replace the lost beef. So overall, if tackling the climate crisis is your thing, then beef is not.

Claim: Cattle are actually neutral for climate, because methane is relatively short-lived greenhouse gas

FacebookTwitterPinterest Cattle graze in a pasture against a backdrop of wind turbines which are part of the 155 turbine Smoky Hill Wind Farm near Vesper, Kan. Photograph: Charlie Riedel/AP

Methane is a very powerful greenhouse gas and ruminants produce a lot of it. But it only remains in the atmosphere for a relatively short time: half is broken down in nine years. This leads some to argue that maintaining the global cattle herd at current levels – about 1 billion animals – is not heating the planet. The burping cows are just replacing the methane that breaks down as time goes by.

But this is simply “creative accounting”, according to Pete Smith at the University of Aberdeen and Andrew Balmford at the University of Cambridge. We shouldn’t argue that cattle farmers can continue to pollute just because they have done so in the past, they say: “We need to do more than just stand still.” In fact, the short-lived nature of methane actually makes reducing livestock numbers a “particularly attractive target”, given that we desperately need to cut greenhouse gas emissions as soon as possible to avoid the worst impacts of the climate crisis.

In any case, just focusing on methane doesn’t make the rampant deforestation by cattle ranchers in South America go away. Even if you ignore methane completely, says Poore, animal products still produce more CO2 than plants. Even one proponent of the methane claim says: “I agree that intensive livestock farming is unsustainable.”

Claim: In many places the only thing you can grow is grass for cattle and sheep

NFU president, Minette Batters, says: “Sixty-five percent of British land is only suitable for grazing livestock and we have the right climate to produce high-quality red meat and dairy.”

“But if everybody were to make the argument that ‘our pastures are the best and should be used for grazing’, then there would be no way to limit global warming,” says Marco Springmann at the University of Oxford. His work shows that a transition to a predominantly plant-based flexitarian diet would free up both pasture and cropland.

The pasture could instead be used to grow trees and lock up carbon, provide land for rewilding and the restoration of nature, and growing bio-energy crops to displace fossil fuels. The crops no longer being fed to animals could instead become food for people, increasing a nation’s self-sufficiency in grains.

FacebookTwitterPinterest The Wild Ken Hill project on the Norfolk coast, which is turning around 1,000 acres of marginal farmland and woodland back over to nature. Photograph: Graeme Lyons/Wild Ken Hill/PA

Claim: Grazing cattle help store carbon from the atmosphere in the soil

This is true. The problem is that even in the very best cases, this carbon storage offsets only 20%-60% of the total emissions from grazing cattle. “In other words, grazing livestock – even in a best-case scenario – are net contributors to the climate problem, as are all livestock,” says Tara Garnett, also at the University of Oxford.

Furthermore, research shows this carbon storage reaches its limit in a few decades, while the problem of methane emissions continue. The stored carbon is also vulnerable - a change in land use or even a drought can see it released again. Proponents of “holistic grazing” to trap carbon are also criticised for unrealistic extrapolation of local results to global levels.

Claim: There is much more wildlife in pasture than in monoculture cropland

That is probably true but misses the real point. A huge driver of the global wildlife crisis is the past and continuing destruction of natural habitat to create pasture for livestock. Herbivores do have an important role in ecosystems, but the high density of farmed herds means pasture is worse for wildlife than natural land. Eating less meat means less destruction of wild places and cutting meat significantly would also free up pasture and cropland that could be returned to nature. Furthermore, a third of all cropland is used to grow animal feed.

Claim: We need animals to convert feed into protein humans can eat

There is no lack of protein, despite the claims. In rich nations, people commonly eat 30-50% more protein than they need. All protein needs can easily be met from plant-based sources, such as beans, lentils, nuts and whole grains.

But animals can play a role in some parts of Africa and Asia where, in India for example, waste from grain production can feed cattle that produce milk. In the rest of the world, where much of cropland that could be used to feed people is actually used to feed animals, a cut in meat eating is still needed for agriculture to be sustainable.

“What about … ?”

Claim: What about soya milk and tofu that is destroying the Amazon?

It’s not. Well over 96% of soy from the Amazon region is fed to cows, pigs and chickens eaten around the world, according to data from the UN Food and Agriculture Organization, says Poore. Furthermore, 97% of Brazilian soy is genetically modified, which is banned for human consumption in many countries and is rarely used to make tofu and soya milk in any case.

Soya milk also has much smaller emissions and land and water use than cow’s milk. If you are worried about the Amazon, not eating meat remains your best bet.

Claim: Almond milk production is massacring bees and turning land into desert

Some almond production may well cause environmental problems. But that is because rising demand has driven rapid intensification in specific places, like California, which could be addressed with proper regulation. It is nothing to do with what almonds need to grow. Traditional almond production in Southern Europe uses no irrigation at all. It is also perhaps worth noting that the bees that die in California are not wild, but raised by farmers like six-legged livestock.

Like soya milk, almond milk still has lower carbon emissions and land and water use than cow’s milk. But if you are still worried, there are plenty of alternatives, with oat milk usually coming out with the lowest environmental footprint.

Claim: Avocados are causing droughts in places

Again, the problem here is the rapid growth of production in specific regions that lack prudent controls on water use, like Peru and Chile. Avocados generate three times less emissions than chicken, four times less than pork, and 20 times less than beef.

If you are still worried about avocados, you can of course choose not to eat them. But it’s not a reason to eat meat instead, which has a much bigger water and deforestation footprint.

The market is likely to solve the problem, as the high demand from consumers for avocados and almonds incentivises farmers elsewhere to grow the crops, thereby alleviating the pressure on current production hotspots.

Claim: Quinoa boom is harming poor farmers in Peru and Bolivia

Quinoa is an amazing food and has seen a boom. But the idea that this took food from the mouths of poor farmers is wrong. “The claim that rising quinoa prices were hurting those who had traditionally produced and consumed it is patently false,” said researchers who studied the issue.

Quinoa was never a staple food, representing just a few percent of the food budget for these people. The quinoa boom has had no effect on their nutrition. The boom also significantly boosted the farmers’ income.

There is an issue with falling soil quality, as the land is worked harder. But quinoa is now planted in China, India and Nepal, as well as in the US and Canada, easing the burden. The researchers are more worried now about the loss of income for South American farmers as the quinoa supply rises and the price falls.

Claim: What about palm oil destroying rainforests and orangutans?

Palm oil plantations have indeed led to terrible deforestation. But that is an issue for everybody, not only vegans: it’s in about half of all products on supermarket shelves, both food and toiletries. The International Union for the Conservation of Nature argues that choosing sustainably produced palm oil is actually positive, because other oil crops take up more land.

But Poore says: “We are abandoning millions of acres a year of oilseed land around the world, including rapeseed and sunflower fields in the former Soviet regions, and traditional olive plantations.” Making better use of this land would be preferable to using palm oil, he says.

Healthy questions

Claim: Vegans don’t get enough B12, making them stupid

A vegan diet is generally very healthy, but doctors have warned about the potential lack of B12, an important vitamin for brain function that is found in meat, eggs and cows’ milk. This is easily remedied by taking a supplement.

However, a closer look reveals some surprises. B12 is made by bacteria in soil and the guts of animals, and free-range livestock ingest the B12 as they graze and peck the ground. But most livestock are not free-range, and pesticides and antibiotics widely used on farms kill the B12-producing bugs. The result is that most B12 supplements - 90% according to one source – are fed to livestock, not people.

So there’s a choice here between taking a B12 supplement yourself, or eating an animal that has been given the supplement. Algae are a plant-based source of B12, although the degree of bio-availability is not settled yet. It is also worth noting that a significant number of non-vegans are B12 deficient, especially older people. Among vegans the figure is only about 10%.

Claim: Plant-based alternatives to meat are really unhealthy

The rapid rise of the plant-based burger has prompted some to criticise them as ultra-processed junk food. A plant-based burger could be unhealthier if the salt levels are very high, says Springmann, but it is most likely to still be healthier than a meat burger when all nutritional factors are considered, particularly fibre. Furthermore, replacing a beef burger with a plant-based alternative is certain to be less damaging to the environment.

There is certainly a strong argument to be made that overall we eat far too much processed food, but that applies just as much to meat eaters as to vegetarians and vegans. And given that most people are unlikely to give up their burgers and sausages any time, the plant-based options are a useful alternative.

‘Catching out’ vegans

Claim: Fruit and vegetables aren’t vegan because they rely on animal manure as fertiliser

Most vegans would say it’s just silly to say fruit and veg are animal products and plenty are produced without animal dung. In any case there is no reason for horticulture to rely on manure at all. Synthetic fertiliser is easily made from the nitrogen in the air and there is plenty of organic fertiliser available if we chose to use it more widely in the form of human faeces. Over application of fertiliser does cause water pollution problems in many parts of the world. But that applies to both synthetic fertiliser and manure and results from bad management.

Claim: Vegan diets kill millions of insects

Piers Morgan is among those railing against “hypocrite” vegans because commercially kept bees die while pollinating almonds and avocados and combine harvesters “create mass murder of bugs” and small mammals while bringing in the grain harvest. But almost everyone eats these foods, not just vegans.

It is true that insects are in a terrible decline across the planet. But the biggest drivers of this are the destruction of wild habitat, largely for meat production, and widespread pesticide use. If it is insects that you are really worried about, then eating a plant-based organic diet is the option to choose.

Claim: Telling people to eat less meat and dairy is denying vital nutrition to the world’s poorest

A “planetary health diet” published by scientists to meet both global health and environmental needs was criticised by journalist Joanna Blythman: “When ideologues living in affluent countries pressurise poor countries to eschew animal foods and go plant-based, they are displaying crass insensitivity, and a colonial White Saviour mindset.”

In fact, says Springmann, who was part of the team behind the planetary health diet, it would improve nutritional intake in all regions, including poorer regions where starchy foods currently dominate diets. The big cuts in meat and dairy are needed in rich nations. In other parts of the world, many healthy, traditional diets are already low in animal products.

On the road

Claim: Transport emissions mean that eating plants from all over the world is much worse than local meat and dairy

“‘Eating local’ is a recommendation you hear often [but] is one of the most misguided pieces of advice,” says Hannah Ritchie, at the University of Oxford. “Greenhouse gas emissions from transportation make up a very small amount of the emissions from food and what you eat is far more important than where your food traveled from.”

Beef and lamb have many times the carbon footprint of most other foods, she says. So whether the meat is produced locally or shipped from the other side of the world, plants will still have much lower carbon footprints. Transport emissions for beef are about 0.5% of the total and for lamb it’s 2%.

The reason for this is that almost all food transported long distances is carried by ships, which can accommodate huge loads and are therefore fairly efficient. For example, the shipping emissions for avocados crossing the Atlantic are about 8% of their total footprint. Air freight does of course result in high emissions, but very little food is transported this way; it accounts for just 0.16% of food miles.

Claim: All the farmers who raise livestock would be unemployed if the world went meat-free

Livestock farming is massively subsidised with taxpayers money around the world – unlike vegetables and fruit. That money could be used to support more sustainable foods such as beans and nuts instead, and to pay for other valuable services, such as capturing carbon in woodlands and wetlands, restoring wildlife, cleaning water and reducing flood risks. Shouldn’t your taxes be used to provide public goods rather than harms?

So, food is complicated. But however much we might wish to continue farming and eating as we do today, the evidence is crystal clear that consuming less meat and more plants is very good for both our health and the planet. The fact that some plant crops have problems is not a reason to eat meat instead.

In the end, you will choose what you eat. If you want to eat healthily and sustainably, you don’t have to stop eating meat and dairy altogether. The planetary health diet allows for a beef burger, some fish and an egg each week, and a glass of milk or some cheese each day.

Food writer Michael Pollan foreshadowed the planetary health diet in 2008 with a simple seven-word rule: “Eat food. Not too much. Mostly plants.” But if you want to have the maximum impact on fighting the climate and wildlife crisis, then it is going to be all plants.

If you are still worried about avocados, you can of course choose not to eat them. But it’s not a reason to eat meat instead, which has a much bigger water and deforestation footprint.

The market is likely to solve the problem, as the high demand from consumers for avocados and almonds incentivises farmers elsewhere to grow the crops, thereby alleviating the pressure on current production hotspots.

Claim: Quinoa boom is harming poor farmers in Peru and Bolivia

Quinoa is an amazing food and has seen a boom. But the idea that this took food from the mouths of poor farmers is wrong. “The claim that rising quinoa prices were hurting those who had traditionally produced and consumed it is patently false,” said researchers who studied the issue.

Quinoa was never a staple food, representing just a few percent of the food budget for these people. The quinoa boom has had no effect on their nutrition. The boom also significantly boosted the farmers’ income.

There is an issue with falling soil quality, as the land is worked harder. But quinoa is now planted in China, India and Nepal, as well as in the US and Canada, easing the burden. The researchers are more worried now about the loss of income for South American farmers as the quinoa supply rises and the price falls.

Claim: What about palm oil destroying rainforests and orangutans?

Palm oil plantations have indeed led to terrible deforestation. But that is an issue for everybody, not only vegans: it’s in about half of all products on supermarket shelves, both food and toiletries. The International Union for the Conservation of Nature argues that choosing sustainably produced palm oil is actually positive, because other oil crops take up more land.

But Poore says: “We are abandoning millions of acres a year of oilseed land around the world, including rapeseed and sunflower fields in the former Soviet regions, and traditional olive plantations.” Making better use of this land would be preferable to using palm oil, he says.

Healthy questions

Claim: Vegans don’t get enough B12, making them stupid

A vegan diet is generally very healthy, but doctors have warned about the potential lack of B12, an important vitamin for brain function that is found in meat, eggs and cows’ milk. This is easily remedied by taking a supplement.

However, a closer look reveals some surprises. B12 is made by bacteria in soil and the guts of animals, and free-range livestock ingest the B12 as they graze and peck the ground. But most livestock are not free-range, and pesticides and antibiotics widely used on farms kill the B12-producing bugs. The result is that most B12 supplements - 90% according to one source – are fed to livestock, not people.

So there’s a choice here between taking a B12 supplement yourself, or eating an animal that has been given the supplement. Algae are a plant-based source of B12, although the degree of bio-availability is not settled yet. It is also worth noting that a significant number of non-vegans are B12 deficient, especially older people. Among vegans the figure is only about 10%.

Claim: Plant-based alternatives to meat are really unhealthy

The rapid rise of the plant-based burger has prompted some to criticise them as ultra-processed junk food. A plant-based burger could be unhealthier if the salt levels are very high, says Springmann, but it is most likely to still be healthier than a meat burger when all nutritional factors are considered, particularly fibre. Furthermore, replacing a beef burger with a plant-based alternative is certain to be less damaging to the environment.

There is certainly a strong argument to be made that overall we eat far too much processed food, but that applies just as much to meat eaters as to vegetarians and vegans. And given that most people are unlikely to give up their burgers and sausages any time, the plant-based options are a useful alternative.

‘Catching out’ vegans

Claim: Fruit and vegetables aren’t vegan because they rely on animal manure as fertiliser

Most vegans would say it’s just silly to say fruit and veg are animal products and plenty are produced without animal dung. In any case there is no reason for horticulture to rely on manure at all. Synthetic fertiliser is easily made from the nitrogen in the air and there is plenty of organic fertiliser available if we chose to use it more widely in the form of human faeces. Over application of fertiliser does cause water pollution problems in many parts of the world. But that applies to both synthetic fertiliser and manure and results from bad management.

Claim: Vegan diets kill millions of insects

Piers Morgan is among those railing against “hypocrite” vegans because commercially kept bees die while pollinating almonds and avocados and combine harvesters “create mass murder of bugs” and small mammals while bringing in the grain harvest. But almost everyone eats these foods, not just vegans.

It is true that insects are in a terrible decline across the planet. But the biggest drivers of this are the destruction of wild habitat, largely for meat production, and widespread pesticide use. If it is insects that you are really worried about, then eating a plant-based organic diet is the option to choose.

Claim: Telling people to eat less meat and dairy is denying vital nutrition to the world’s poorest

A “planetary health diet” published by scientists to meet both global health and environmental needs was criticised by journalist Joanna Blythman: “When ideologues living in affluent countries pressurise poor countries to eschew animal foods and go plant-based, they are displaying crass insensitivity, and a colonial White Saviour mindset.”

In fact, says Springmann, who was part of the team behind the planetary health diet, it would improve nutritional intake in all regions, including poorer regions where starchy foods currently dominate diets. The big cuts in meat and dairy are needed in rich nations. In other parts of the world, many healthy, traditional diets are already low in animal products.

On the road

Claim: Transport emissions mean that eating plants from all over the world is much worse than local meat and dairy