From elite football to tech giants, our lives are increasingly governed by ‘free’ markets that turn out to be rigged writes Larry Elliott in The Guardian

‘The organisers of the ESL have taken textbook free-market capitalism and turned it on its head.’ Graffiti showing the Juventus president, Andrea Agnelli, near the headquarters of the Italian Football Federation in Rome. Photograph: Filippo Monteforte/AFP/Getty Images

‘The organisers of the ESL have taken textbook free-market capitalism and turned it on its head.’ Graffiti showing the Juventus president, Andrea Agnelli, near the headquarters of the Italian Football Federation in Rome. Photograph: Filippo Monteforte/AFP/Getty Images

Back in the days of the Soviet Union, it was common to hear people on the left criticise the Kremlin for pursuing the wrong kind of socialism. There was nothing wrong with the theory, they said, rather the warped form of it conducted behind the iron curtain.

The same argument has surfaced this week amid the furious response to the now-aborted plans to form a European Super League for 20 football clubs, only this time from the right. Free-market purists say they hate the idea because it is the wrong form of capitalism.

They are both right and wrong about that. Free-market capitalism is supposed to work through competition, which means no barriers to entry for new, innovative products. In football’s case, that would be a go-ahead small club with a manager trying radical new training methods and fielding a crop of players it had nurtured itself or invested in through the transfer market. The league-winning Derby County and Nottingham Forest teams developed by Brian Clough in the 1970s would be an example of this.

Supporters of free-market capitalism say that the system can tolerate inequality provided there is the opportunity to better yourself. They are opposed to cartels and firms that use their market power to protect themselves from smaller and nimbler rivals. Nor do they like rentier capitalism, which is where people can make large returns from assets they happen to own but without doing anything themselves.

The organisers of the ESL have taken textbook free-market capitalism and turned it on its head. Having 15 of the 20 places guaranteed for the founder members represents a colossal barrier to entry and clearly stifles competition. There is not much chance of “creative destruction” if an elite group of clubs can entrench their position by trousering the bulk of the TV receipts that their matches will generate. Owners of the clubs are classic rentier capitalists.

Where the free-market critics of the ESL are wrong is in thinking the ESL is some sort of aberration, a one-off deviation from established practice, rather than a metaphor for what global capitalism has become: an edifice built on piles of debt where the owners of businesses say they love competition but do everything they can to avoid it. Just as the top European clubs have feeder teams that they can exploit for new talent, so the US tech giants have been busy buying up anything that looks like providing competition. It is why Google has bought a slew of rival online advertising vendors and why Facebook bought Instagram and WhatsApp.

For those who want to understand how the economics of football have changed, a good starting point is The Glory Game, a book Hunter Davies wrote about his life behind the scenes with Tottenham Hotspur, one of the wannabe members of the ESL, in the 1971-72 season. (Full disclosure: I am a Spurs season ticket holder.)

Davies’s book devotes a chapter to the directors of Spurs in the early 1970s, who were all lifelong supporters of the club and who received no payment for their services. They lived in Enfield, not in the Bahamas, which is where the current owner, Joe Lewis, resides as a tax exile. These were not radical men. They could not conceive of there ever being women on the board; they opposed advertising inside the ground and were only just coming round to the idea of a club shop to sell official Spurs merchandise. They were conservative in all senses of the word.

In the intervening half century, the men who made their money out of nuts and bolts and waste paper firms in north London have been replaced by oligarchs and hedge funds. TV, barely mentioned in the Glory Game, has arrived with its billions of pounds in revenue. Facilities have improved and the players are fitter, stronger and much better paid than those of the early 1970s. In very few sectors of modern Britain can it be said that the workers receive the full fruits of their labours: the Premier League is one of them.

Even so, the model is not really working and would have worked even less well had the ESL come about. And it goes a lot deeper than greed, something that can hardly be said to be new to football.

No question, greed is part of the story, because for some clubs the prospect of sharing an initial €3.5bn (£3bn) pot of money was just too tempting given their debts, but there was also a problem with the product on offer.

Some of the competitive verve has already been sucked out of football thanks to the concentration of wealth. In the 1970s, there was far more chance of a less prosperous club having their moment of glory: not only did Derby and Forest win the league, but Sunderland, Southampton and Ipswich won the FA Cup. Fans can accept the despair of defeat if they can occasionally hope for the thrill of victory, but the ESL was essentially a way for an elite to insulate itself against the risk of failure.

By presenting their half-baked idea in the way they did, the ESL clubs committed one of capitalism’s cardinal sins: they damaged their own brand. Companies – especially those that rely on loyalty to their product – do that at their peril, not least because it forces politicians to respond. Supporters have power and so do governments, if they choose to exercise it.

The ESL has demonstrated that global capitalism operates on the basis of rigged markets not free markets, and those running the show are only interested in entrenching existing inequalities. It was a truly bad idea, but by providing a lesson in economics to millions of fans it may have performed a public service.

‘The organisers of the ESL have taken textbook free-market capitalism and turned it on its head.’ Graffiti showing the Juventus president, Andrea Agnelli, near the headquarters of the Italian Football Federation in Rome. Photograph: Filippo Monteforte/AFP/Getty Images

‘The organisers of the ESL have taken textbook free-market capitalism and turned it on its head.’ Graffiti showing the Juventus president, Andrea Agnelli, near the headquarters of the Italian Football Federation in Rome. Photograph: Filippo Monteforte/AFP/Getty ImagesBack in the days of the Soviet Union, it was common to hear people on the left criticise the Kremlin for pursuing the wrong kind of socialism. There was nothing wrong with the theory, they said, rather the warped form of it conducted behind the iron curtain.

The same argument has surfaced this week amid the furious response to the now-aborted plans to form a European Super League for 20 football clubs, only this time from the right. Free-market purists say they hate the idea because it is the wrong form of capitalism.

They are both right and wrong about that. Free-market capitalism is supposed to work through competition, which means no barriers to entry for new, innovative products. In football’s case, that would be a go-ahead small club with a manager trying radical new training methods and fielding a crop of players it had nurtured itself or invested in through the transfer market. The league-winning Derby County and Nottingham Forest teams developed by Brian Clough in the 1970s would be an example of this.

Supporters of free-market capitalism say that the system can tolerate inequality provided there is the opportunity to better yourself. They are opposed to cartels and firms that use their market power to protect themselves from smaller and nimbler rivals. Nor do they like rentier capitalism, which is where people can make large returns from assets they happen to own but without doing anything themselves.

The organisers of the ESL have taken textbook free-market capitalism and turned it on its head. Having 15 of the 20 places guaranteed for the founder members represents a colossal barrier to entry and clearly stifles competition. There is not much chance of “creative destruction” if an elite group of clubs can entrench their position by trousering the bulk of the TV receipts that their matches will generate. Owners of the clubs are classic rentier capitalists.

Where the free-market critics of the ESL are wrong is in thinking the ESL is some sort of aberration, a one-off deviation from established practice, rather than a metaphor for what global capitalism has become: an edifice built on piles of debt where the owners of businesses say they love competition but do everything they can to avoid it. Just as the top European clubs have feeder teams that they can exploit for new talent, so the US tech giants have been busy buying up anything that looks like providing competition. It is why Google has bought a slew of rival online advertising vendors and why Facebook bought Instagram and WhatsApp.

For those who want to understand how the economics of football have changed, a good starting point is The Glory Game, a book Hunter Davies wrote about his life behind the scenes with Tottenham Hotspur, one of the wannabe members of the ESL, in the 1971-72 season. (Full disclosure: I am a Spurs season ticket holder.)

Davies’s book devotes a chapter to the directors of Spurs in the early 1970s, who were all lifelong supporters of the club and who received no payment for their services. They lived in Enfield, not in the Bahamas, which is where the current owner, Joe Lewis, resides as a tax exile. These were not radical men. They could not conceive of there ever being women on the board; they opposed advertising inside the ground and were only just coming round to the idea of a club shop to sell official Spurs merchandise. They were conservative in all senses of the word.

In the intervening half century, the men who made their money out of nuts and bolts and waste paper firms in north London have been replaced by oligarchs and hedge funds. TV, barely mentioned in the Glory Game, has arrived with its billions of pounds in revenue. Facilities have improved and the players are fitter, stronger and much better paid than those of the early 1970s. In very few sectors of modern Britain can it be said that the workers receive the full fruits of their labours: the Premier League is one of them.

Even so, the model is not really working and would have worked even less well had the ESL come about. And it goes a lot deeper than greed, something that can hardly be said to be new to football.

No question, greed is part of the story, because for some clubs the prospect of sharing an initial €3.5bn (£3bn) pot of money was just too tempting given their debts, but there was also a problem with the product on offer.

Some of the competitive verve has already been sucked out of football thanks to the concentration of wealth. In the 1970s, there was far more chance of a less prosperous club having their moment of glory: not only did Derby and Forest win the league, but Sunderland, Southampton and Ipswich won the FA Cup. Fans can accept the despair of defeat if they can occasionally hope for the thrill of victory, but the ESL was essentially a way for an elite to insulate itself against the risk of failure.

By presenting their half-baked idea in the way they did, the ESL clubs committed one of capitalism’s cardinal sins: they damaged their own brand. Companies – especially those that rely on loyalty to their product – do that at their peril, not least because it forces politicians to respond. Supporters have power and so do governments, if they choose to exercise it.

The ESL has demonstrated that global capitalism operates on the basis of rigged markets not free markets, and those running the show are only interested in entrenching existing inequalities. It was a truly bad idea, but by providing a lesson in economics to millions of fans it may have performed a public service.

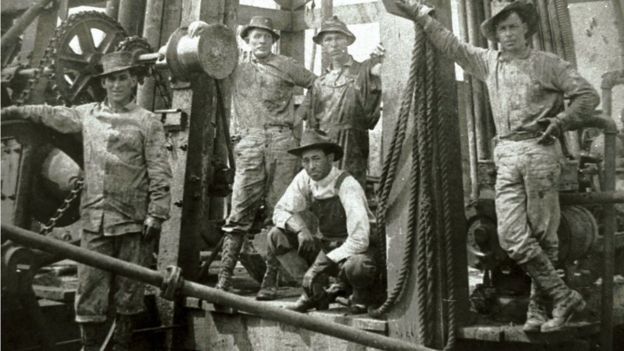

Image copyrightGETTY IMAGESImage caption A drilling crew poses for a photograph at Spindletop Hill in Beaumont, Texas where the first Texas oil gusher was discovered in 1901.

Image copyrightGETTY IMAGESImage caption A drilling crew poses for a photograph at Spindletop Hill in Beaumont, Texas where the first Texas oil gusher was discovered in 1901. Image copyrightGETTY IMAGESImage captionServers for data storage in Hafnarfjordur, Iceland, which is trying to make a name for itself in the business of data centres - warehouses that consume enormous amounts of energy to store the information of 3.2 billion internet users.

Image copyrightGETTY IMAGESImage captionServers for data storage in Hafnarfjordur, Iceland, which is trying to make a name for itself in the business of data centres - warehouses that consume enormous amounts of energy to store the information of 3.2 billion internet users.