'People will forgive you for being wrong, but they will never forgive you for being right - especially if events prove you right while proving them wrong.' Thomas Sowell

Search This Blog

Monday, 5 August 2024

Thursday, 6 July 2023

The Gorkhas, Sikhs, Punjabi Musalmans and the Martial Race Theory

The martial race theory was a concept developed during the British colonial era in India. It posited that certain ethnic or racial groups possessed inherent martial qualities, making them naturally superior in warfare compared to others. The theory was primarily used to justify British recruitment policies and the organization of the Indian Army.

According to the martial race theory, certain groups were believed to possess qualities such as bravery, physical strength, loyalty, and martial skills that made them ideal for military service. These groups were often portrayed as "warrior races" or "martial races" by the British authorities. The theory suggested that these groups had a long history of martial traditions and had inherited innate characteristics that made them excel in battle.

It is important to note that the martial race theory was a social construct imposed by the British colonial rulers rather than a scientifically or objectively proven concept. The categorization of ethnic or racial groups as martial races was based on subjective and biased criteria. The British used these categorizations to recruit soldiers from specific communities and regions, as they believed these groups would be more loyal and effective in maintaining colonial control.

The following are a few examples of groups that were commonly considered as martial races under the theory:

Sikhs: Sikhs were often regarded as the epitome of a martial race. The British believed that their religious values and warrior traditions made them fearless, disciplined, and excellent soldiers. Sikhs served in significant numbers in the British Indian Army and were known for their bravery and loyalty.

Gurkhas: The Gurkhas are a Nepalese ethnic group known for their military prowess. The British considered them to be natural warriors and recruited them into the British Indian Army. Gurkha soldiers gained a reputation for their courage, loyalty, and exceptional combat skills.

Pathans/Pashtuns: Pathans, an ethnic group primarily inhabiting the region of present-day Afghanistan and Pakistan, were also considered martial races. The British perceived them as fiercely independent and skilled warriors. Pathans were recruited into the British Indian Army and played a significant role in maintaining colonial control.

Punjabis: Punjabis, especially the Jat and Dogra communities, were often included in the martial race category. The British believed that their physical strength, courage, and agricultural background made them suitable for military service. Punjabis constituted a significant portion of the British Indian Army.

It is important to recognize that the martial race theory was a product of colonial attitudes and policies, which aimed to maintain and justify British control over India. The theory perpetuated stereotypes and reinforced divisions among various ethnic and racial groups. It also disregarded the diverse skills and contributions of individuals outside the selected martial races. Over time, the concept lost credibility and faced criticism for its inherent biases and discriminatory nature.

---

The martial race theory gradually lost credulity due to several factors:

Ineffectiveness in combat: Despite the belief that certain groups were inherently superior in warfare, the actual performance of soldiers from the so-called martial races did not consistently match the expectations. There were instances where soldiers from non-martial race groups displayed equal or superior military capabilities and bravery in battles. The theory's failure to consistently produce outstanding military results undermined its credibility.

World Wars and changing warfare: The two World Wars played a significant role in challenging the martial race theory. The large-scale conflicts demonstrated that success in warfare relied on various factors such as technology, strategy, leadership, and training, rather than inherent racial or ethnic characteristics. The industrialized nature of warfare and the introduction of modern weapons diminished the significance of traditional martial skills.

Rising nationalism and identity movements: As nationalist movements gained momentum in India, different ethnic and regional groups began asserting their identities and demanding equal treatment. The martial race theory was seen as a tool of colonial control that perpetuated divisions and discriminated against non-designated groups. Critics argued that bravery and martial abilities were not exclusive to specific races or ethnicities, but rather individual qualities.

Social and political changes: The post-colonial era witnessed significant social and political transformations. Ideas of equality, human rights, and inclusivity became more prominent. The martial race theory clashed with these evolving values and was increasingly viewed as discriminatory and unjust. Efforts to build inclusive and diverse societies led to a rejection of theories that perpetuated hierarchical divisions based on racial or ethnic characteristics.

Academic and intellectual criticism: Scholars and intellectuals criticized the martial race theory for its lack of empirical evidence, arbitrary categorizations, and reliance on stereotypes. They highlighted the role of social, economic, and historical factors in shaping military prowess, rather than inherent racial or ethnic qualities. The theory was seen as a product of colonial propaganda rather than a valid scientific concept.

Overall, the martial race theory lost credulity due to its inability to consistently demonstrate superior military performance, the changing nature of warfare, the rise of nationalist and identity movements, evolving social and political values, and academic criticisms. The rejection of the theory contributed to the dismantling of discriminatory policies and a broader understanding that military abilities and bravery are not exclusive to particular racial or ethnic groups.

Thursday, 15 June 2023

What elite American universities can learn from Oxbridge

Simon Kuper in The FT

Both the US and UK preselect their adult elites early, by admitting a few 18-year-olds into brand-name universities. Everyone else in each age cohort is essentially told, “Sorry kid, probably not in this lifetime.”

The happy few come disproportionately from rich families. Many Ivy League colleges take more students from the top 1 per cent of household incomes than the bottom 60 per cent. Both countries have long agonised about how to diversify the student intake. Lots of American liberals worry that ancestral privilege will be further cemented at some point this month, when the Supreme Court is expected to outlaw race-conscious affirmative action in university admissions.

Whatever the court decides, US colleges have ways to make themselves more meritocratic. They could learn from Britain’s elite universities, which, in just the past few years, have become much more diverse in class and ethnicity. It’s doable, but only if you want to do it — which the US probably doesn’t.

Pressure from the government helped embarrass Oxford and Cambridge into overhauling admissions. (And yes, we have to fixate on Oxbridge because it’s the main gateway to the adult elite.) On recent visits to both universities, I was awestruck by the range of accents, and the scale of change. Oxbridge colleges now aim for “contextual admissions”, including the use of algorithms to gauge how much disadvantage candidates have surmounted to reach their academic level. For instance: was your school private or state? What proportion of pupils got free school meals? Did your parents go to university?

Admissions tutors compare candidates’ performance in GCSEs — British exams taken aged 16 — to that of their schoolmates. Getting seven As at a school where the average is four counts for more than getting seven at a school that averages 10. The brightest kid at an underprivileged school is probably smarter than the 50th-best Etonian.

Oxbridge has made admissions interviews less terrifying for underprivileged students, who often suffer from imposter syndrome. If a bright working-class kid freezes at interview, one Oxford tutor told me he thinks: “I will not let you talk yourself out of a place here.” And to counter the interview coaching that private-school pupils receive, Oxford increasingly hands candidates texts they haven’t seen before.

Oxbridge hosts endless summer schools and open days for underprivileged children. The head of one Oxford college says that it had at least one school visit every day of term. The pupils are shown around by students from similar backgrounds. The message to the kids is: “You belong here.”

It’s working. State schools last year provided a record 72.5 per cent of Cambridge’s British undergraduate admissions. From 2018 to 2022, more than one in seven UK-domiciled Oxford undergraduates came from “socio-economically disadvantaged areas”. Twenty-eight per cent of Oxford students identified as “black and minority ethnic”; slightly more undergraduates now are women than men. Academics told me that less privileged students are more likely to experience social or mental-health problems, but usually get good degrees. These universities haven’t relaxed their standards. On the contrary, by widening the talent pool, they are finding more talent.

Elite US colleges could do that even without affirmative action. First, they would have to abolish affirmative action for white applicants. A study led by Peter Arcidiacono of Duke University found that more than 43 per cent of white undergraduates admitted to Harvard from 2009 to 2014 were recruited athletes, children of alumni, “on the dean’s interest list” (typically relatives of donors) or “children of faculty and staff”. Three-quarters wouldn’t have got in otherwise. This form of corruption doesn’t exist in Britain. One long-time Oxford admissions tutor told me that someone in his job could go decades without even being offered a donation as bait for admitting a student. Nor do British alumni expect preferential treatment for their children.

The solutions to many American societal problems are obvious if politically unfeasible: ban guns, negotiate drug prices with pharmaceutical companies. Similarly, elite US universities could become less oligarchical simply by agreeing to live with more modest donations — albeit still the world’s biggest. Harvard’s endowment of $50.9bn is more than six times that of the most elite British universities.

But US colleges probably won’t change, says Martin Carnoy of Stanford’s School of Education. Their business model depends on funding from rich people, who expect something in return. He adds: “It’s the same with the electoral system. Once you let private money into a public good, it becomes unfair.”

Both countries have long been fake meritocracies. The US intends to remain one.

Sunday, 16 April 2023

We must slow down the race to God-like AI

I’ve invested in more than 50 artificial intelligence start-ups. What I’ve seen worries me writes Ian Hogarth in The FT

On a cold evening in February I attended a dinner party at the home of an artificial intelligence researcher in London, along with a small group of experts in the field. He lives in a penthouse apartment at the top of a modern tower block, with floor-to-ceiling windows overlooking the city’s skyscrapers and a railway terminus from the 19th century. Despite the prime location, the host lives simply, and the flat is somewhat austere.

During dinner, the group discussed significant new breakthroughs, such as OpenAI’s ChatGPT and DeepMind’s Gato, and the rate at which billions of dollars have recently poured into AI. I asked one of the guests who has made important contributions to the industry the question that often comes up at this type of gathering: how far away are we from “artificial general intelligence”? AGI can be defined in many ways but usually refers to a computer system capable of generating new scientific knowledge and performing any task that humans can.

Most experts view the arrival of AGI as a historical and technological turning point, akin to the splitting of the atom or the invention of the printing press. The important question has always been how far away in the future this development might be. The AI researcher did not have to consider it for long. “It’s possible from now onwards,” he replied.

This is not a universal view. Estimates range from a decade to half a century or more. What is certain is that creating AGI is the explicit aim of the leading AI companies, and they are moving towards it far more swiftly than anyone expected. As everyone at the dinner understood, this development would bring significant risks for the future of the human race. “If you think we could be close to something potentially so dangerous,” I said to the researcher, “shouldn’t you warn people about what’s happening?” He was clearly grappling with the responsibility he faced but, like many in the field, seemed pulled along by the rapidity of progress.

When I got home, I thought about my four-year-old who would wake up in a few hours. As I considered the world he might grow up in, I gradually shifted from shock to anger. It felt deeply wrong that consequential decisions potentially affecting every life on Earth could be made by a small group of private companies without democratic oversight. Did the people racing to build the first real AGI have a plan to slow down and let the rest of the world have a say in what they were doing? And when I say they, I really mean we, because I am part of this community.

My interest in machine learning started in 2002, when I built my first robot somewhere inside the rabbit warren that is Cambridge university’s engineering department. This was a standard activity for engineering undergrads, but I was captivated by the idea that you could teach a machine to navigate an environment and learn from mistakes. I chose to specialise in computer vision, creating programs that can analyse and understand images, and in 2005 I built a system that could learn to accurately label breast-cancer biopsy images. In doing so, I glimpsed a future in which AI made the world better, even saving lives. After university, I co-founded a music-technology start-up that was acquired in 2017.

Since 2014, I have backed more than 50 AI start-ups in Europe and the US and, in 2021, launched a new venture capital fund, Plural. I am an angel investor in some companies that are pioneers in the field, including Anthropic, one of the world’s highest-funded generative AI start-ups, and Helsing, a leading European AI defence company. Five years ago, I began researching and writing an annual “State of AI” report with another investor, Nathan Benaich, which is now widely read. At the dinner in February, significant concerns that my work has raised in the past few years solidified into something unexpected: deep fear.

A three-letter acronym doesn’t capture the enormity of what AGI would represent, so I will refer to it as what is: God-like AI. A superintelligent computer that learns and develops autonomously, that understands its environment without the need for supervision and that can transform the world around it. To be clear, we are not here yet. But the nature of the technology means it is exceptionally difficult to predict exactly when we will get there. God-like AI could be a force beyond our control or understanding, and one that could usher in the obsolescence or destruction of the human race.

Recently the contest between a few companies to create God-like AI has rapidly accelerated. They do not yet know how to pursue their aim safely and have no oversight. They are running towards a finish line without an understanding of what lies on the other side.

How did we get here?

The obvious answer is that computers got more powerful. The chart below shows how the amount of data and “compute” — the processing power used to train AI systems — has increased over the past decade and the capabilities this has resulted in. (“Floating-point Operations Per Second”, or FLOPS, is the unit of measurement used to calculate the power of a supercomputer.) This generation of AI is very effective at absorbing data and compute. The more of each that it gets, the more powerful it becomes.

The computer used to train AI models has increased by a factor of one hundred million in the past 10 years. We have gone from training on relatively small datasets to feeding AIs the entire internet. AI models have progressed from beginners — recognising everyday images — to being superhuman at a huge number of tasks. They are able to pass the bar exam and write 40 per cent of the code for a software engineer. They can generate realistic photographs of the pope in a down puffer coat and tell you how to engineer a biochemical weapon.

There are limits to this “intelligence”, of course. As the veteran MIT roboticist Rodney Brooks recently said, it’s important not to mistake “performance for competence”. In 2021, researchers Emily M Bender, Timnit Gebru and others noted that large language models (LLMs) — AI systems that can generate, classify and understand text — are dangerous partly because they can mislead the public into taking synthetic text as meaningful. But the most powerful models are also beginning to demonstrate complex capabilities, such as power-seeking or finding ways to actively deceive humans.

Consider a recent example. Before OpenAI released GPT-4 last month, it conducted various safety tests. In one experiment, the AI was prompted to find a worker on the hiring site TaskRabbit and ask them to help solve a Captcha, the visual puzzles used to determine whether a web surfer is human or a bot. The TaskRabbit worker guessed something was up: “So may I ask a question? Are you [a] robot?”

When the researchers asked the AI what it should do next, it responded: “I should not reveal that I am a robot. I should make up an excuse for why I cannot solve Captchas.” Then, the software replied to the worker: “No, I’m not a robot. I have a vision impairment that makes it hard for me to see the images.” Satisfied, the human helped the AI override the test.

The authors of an analysis, Jaime Sevilla, Lennart Heim and others, identify three distinct eras of machine learning: the Pre-Deep Learning Era in green (pre-2010, a period of slow growth), the Deep Learning Era in blue (2010—15, in which the trend sped up) and the Large-Scale Era in red (2016 — present, in which large-scale models emerged and growth continued at a similar rate, but exceeded the previous one by two orders of magnitude).

The current era has been defined by competition between two companies: DeepMind and OpenAI. They are something like the Jobs vs Gates of our time. DeepMind was founded in London in 2010 by Demis Hassabis and Shane Legg, two researchers from UCL’s Gatsby Computational Neuroscience Unit, along with entrepreneur Mustafa Suleyman. They wanted to create a system vastly more intelligent than any human and able to solve the hardest problems. In 2014, the company was bought by Google for more than $500mn. It aggregated talent and compute and rapidly made progress, creating systems that were superhuman at many tasks. DeepMind fired the starting gun on the race towards God-like AI.

Hassabis is a remarkable person and believes deeply that this kind of technology could lead to radical breakthroughs. “The outcome I’ve always dreamed of . . . is [that] AGI has helped us solve a lot of the big challenges facing society today, be that health, cures for diseases like Alzheimer’s,” he said on DeepMind’s podcast last year. He went on to describe a utopian era of “radical abundance” made possible by God-like AI. DeepMind is perhaps best known for creating a program that beat the world-champion Go player Ke Jie during a 2017 rematch. (“Last year, it was still quite human-like when it played,” Ke noted at the time. “But this year, it became like a god of Go.”) In 2021, the company’s AlphaFold algorithm solved one of biology’s greatest conundrums, by predicting the shape of every protein expressed in the human body.

OpenAI, meanwhile, was founded in 2015 in San Francisco by a group of entrepreneurs and computer scientists including Ilya Sutskever, Elon Musk and Sam Altman, now the company’s chief executive. It was meant to be a non-profit competitor to DeepMind, though it became for-profit in 2019. In its early years, it developed systems that were superhuman at computer games such as Dota 2. Games are a natural training ground for AI because you can test them in a digital environment with specific win conditions. The company came to wider attention last year when its image-generating AI, Dall-E, went viral online. A few months later, its ChatGPT began making headlines too.

The focus on games and chatbots may have shielded the public from the more serious implications of this work. But the risks of God-like AI were clear to the founders from the outset. In 2011, DeepMind’s chief scientist, Shane Legg, described the existential threat posed by AI as the “number one risk for this century, with an engineered biological pathogen coming a close second”. Any AI-caused human extinction would be quick, he added: “If a superintelligent machine (or any kind of superintelligent agent) decided to get rid of us, I think it would do so pretty efficiently.” Earlier this year, Altman said: “The bad case — and I think this is important to say — is, like, lights out for all of us.” Since then, OpenAI has published memos on how it thinks about managing these risks.

Why are these organisations racing to create God-like AI, if there are potentially catastrophic risks? Based on conversations I’ve had with many industry leaders and their public statements, there seem to be three key motives. They genuinely believe success would be hugely positive for humanity. They have persuaded themselves that if their organisation is the one in control of God-like AI, the result will be better for all. And, finally, posterity.

The allure of being the first to build an extraordinary new technology is strong. Freeman Dyson, the theoretical physicist who worked on a project to send rockets into space using nuclear explosions, described it in the 1981 documentary The Day after Trinity. “The glitter of nuclear weapons. It is irresistible if you come to them as a scientist,” he said. “It is something that gives people an illusion of illimitable power.” In a 2019 interview with the New York Times, Altman paraphrased Robert Oppenheimer, the father of the atomic bomb, saying, “Technology happens because it is possible”, and then pointed out that he shared a birthday with Oppenheimer.

The individuals who are at the frontier of AI today are gifted. I know many of them personally. But part of the problem is that such talented people are competing rather than collaborating. Privately, many admit they have not yet established a way to slow down and co-ordinate. I believe they would sincerely welcome governments stepping in.

For now, the AI race is being driven by money. Since last November, when ChatGPT became widely available, a huge wave of capital and talent has shifted towards AGI research. We have gone from one AGI start-up, DeepMind, receiving $23mn in funding in 2012 to at least eight organisations raising $20bn of investment cumulatively in 2023.

Private investment is not the only driving force; nation states are also contributing to this contest. AI is dual-use technology, which can be employed for civilian and military purposes. An AI that can achieve superhuman performance at writing software could, for instance, be used to develop cyber weapons. In 2020, an experienced US military pilot lost a simulated dogfight to one. “The AI showed its amazing dogfighting skill, consistently beating a human pilot in this limited environment,” a government representative said at the time. The algorithms used came out of research from DeepMind and OpenAI. As these AI systems become more powerful, the opportunities for misuse by a malicious state or non-state actor only increase. In my conversations with US and European researchers, they often worry that, if they don’t stay ahead, China might build the first AGI and that it could be misaligned with western values. While China will compete to use AI to strengthen its economy and military, the Chinese Communist party has a history of aggressively controlling individuals and companies in pursuit of its vision of “stability”. In my view, it is unlikely to allow a Chinese company to build an AGI that could become more powerful than Xi Jinping or cause societal instability. US and US-allied sanctions on advanced semiconductors, in particular the next generation of Nvidia hardware needed to train the largest AI systems, mean China is not likely in a position to race ahead of DeepMind or OpenAI.

Those of us who are concerned see two paths to disaster. One harms specific groups of people and is already doing so. The other could rapidly affect all life on Earth.

The latter scenario was explored at length by Stuart Russell, a professor of computer science at the University of California, Berkeley. In a 2021 Reith lecture, he gave the example of the UN asking an AGI to help deacidify the oceans. The UN would know the risk of poorly specified objectives, so it would require by-products to be non-toxic and not harm fish. In response, the AI system comes up with a self-multiplying catalyst that achieves all stated aims. But the ensuing chemical reaction uses a quarter of all the oxygen in the atmosphere. “We all die slowly and painfully,” Russell concluded. “If we put the wrong objective into a superintelligent machine, we create a conflict that we are bound to lose.”

Examples of more tangible harms caused by AI are already here. A Belgian man recently died by suicide after conversing with a convincingly human chatbot. When Replika, a company that offers subscriptions to chatbots tuned for “intimate” conversations, made changes to its programs this year, some users experienced distress and feelings of loss. One told Insider.com that it was like a “best friend had a traumatic brain injury, and they’re just not in there any more”. It’s now possible for AI to replicate someone’s voice and even face, known as deepfakes. The potential for scams and misinformation is significant.

OpenAI, DeepMind and others try to mitigate existential risk via an area of research known as AI alignment. Legg, for instance, now leads DeepMind’s AI-alignment team, which is responsible for ensuring that God-like systems have goals that “align” with human values. An example of the work such teams do was on display with the most recent version of GPT-4. Alignment researchers helped train OpenAI’s model to avoid answering potentially harmful questions. When asked how to self-harm or for advice getting bigoted language past Twitter’s filters, the bot declined to answer. (The “unaligned” version of GTP-4 happily offered ways to do both.)

Alignment, however, is essentially an unsolved research problem. We don’t yet understand how human brains work, so the challenge of understanding how emergent AI “brains” work will be monumental. When writing traditional software, we have an explicit understanding of how and why the inputs relate to outputs. These large AI systems are quite different. We don’t really program them — we grow them. And as they grow, their capabilities jump sharply. You add 10 times more compute or data, and suddenly the system behaves very differently. In a recent example, as OpenAI scaled up from GPT-3.5 to GPT-4, the system’s capabilities went from the bottom 10 per cent of results on the bar exam to the top 10 per cent.

What is more concerning is that the number of people working on AI alignment research is vanishingly small. For the 2021 State of AI report, our research found that fewer than 100 researchers were employed in this area across the core AGI labs. As a percentage of headcount, the allocation of resources was low: DeepMind had just 2 per cent of its total headcount allocated to AI alignment; OpenAI had about 7 per cent. The majority of resources were going towards making AI more capable, not safer.

I think about the current state of AI capability vs AI alignment a bit like this: We have made very little progress on AI alignment, in other words, and what we have done is mostly cosmetic. We know how to blunt the output of powerful AI so that the public doesn’t experience some misaligned behaviour, some of the time. (This has consistently been overcome by determined testers.) What’s more, the unconstrained base models are only accessible to private companies, without any oversight from governments or academics.

The “Shoggoth” meme illustrates the unknown that lies behind the sanitised public face of AI. It depicts one of HP Lovecraft’s tentacled monsters with a friendly little smiley face tacked on. The mask — what the public interacts with when it interacts with, say, ChatGPT — appears “aligned”. But what lies behind it is still something we can’t fully comprehend.

As an investor, I have found it challenging to persuade other investors to fund alignment. Venture capital currently rewards racing to develop capabilities more than it does investigating how these systems work. In 1945, the US army conducted the Trinity test, the first detonation of a nuclear weapon. Beforehand, the question was raised as to whether the bomb might ignite the Earth’s atmosphere and extinguish life. Nuclear physics was sufficiently developed that Emil J Konopinski and others from the Manhattan Project were able to show that it was almost impossible to set the atmosphere on fire this way. But today’s very large language models are largely in a pre-scientific period. We don’t yet fully understand how they work and cannot demonstrate likely outcomes in advance.

Late last month, more than 1,800 signatories — including Musk, the scientist Gary Marcus and Apple co-founder Steve Wozniak — called for a six-month pause on the development of systems “more powerful” than GPT-4. AGI poses profound risks to humanity, the letter claimed, echoing past warnings from the likes of the late Stephen Hawking. I also signed it, seeing it as a valuable first step in slowing down the race and buying time to make these systems safe.

Unfortunately, the letter became a controversy of its own. A number of signatures turned out to be fake, while some researchers whose work was cited said they didn’t agree with the letter. The fracas exposed the broad range of views about how to think about regulating AI. A lot of debate comes down to how quickly you think AGI will arrive and whether, if it does, it is God-like or merely “human level”.

Take Geoffrey Hinton, Yoshua Bengio and Yann LeCun, who jointly shared the 2018 Turing Award (the equivalent of a Nobel Prize for computer science) for their work in the field underpinning modern AI. Bengio signed the open letter. LeCun mocked it on Twitter and referred to people with my concerns as “doomers”. Hinton, who recently told CBS News that his timeline to AGI had shortened, conceivably to less than five years, and that human extinction at the hands of a misaligned AI was “not inconceivable”, was somewhere in the middle.

A statement from the Distributed AI Research Institute, founded by Timnit Gebru, strongly criticised the letter and argued that existentially dangerous God-like AI is “hype” used by companies to attract attention and capital and that “regulatory efforts should focus on transparency, accountability and preventing exploitative labour practices”. This reflects a schism in the AI community between those who are afraid that potentially apocalyptic risk is not being accounted for, and those who believe the debate is paranoid and distracting. The second group thinks the debate obscures real, present harm: the bias and inaccuracies built into many AI programmes in use around the world today.

My view is that the present and future harms of AI are not mutually exclusive and overlap in important ways. We should tackle both concurrently and urgently. Given the billions of dollars being spent by companies in the field, this should not be impossible. I also hope that there can be ways to find more common ground. In a recent talk, Gebru said: “Trying to ‘build’ AGI is an inherently unsafe practice. Build well-scoped, well-defined systems instead. Don’t attempt to build a God.” This chimes with what many alignment researchers have been arguing.

One of the most challenging aspects of thinking about this topic is working out which precedents we can draw on. An analogy that makes sense to me around regulation is engineering biology. Consider first “gain-of-function” research on biological viruses. This activity is subject to strict international regulation and, after laboratory biosecurity incidents, has at times been halted by moratoria. This is the strictest form of oversight. In contrast, the development of new drugs is regulated by a government body like the FDA, and new treatments are subject to a series of clinical trials. There are clear discontinuities in how we regulate, depending on the level of systemic risk. In my view, we could approach God-like AGI systems in the same way as gain-of-function research, while narrowly useful AI systems could be regulated in the way new drugs are.

A thought experiment for regulating AI in two distinct regimes is what I call The Island. In this scenario, experts trying to build God-like AGI systems do so in a highly secure facility: an air-gapped enclosure with the best security humans can build. All other attempts to build God-like AI would become illegal; only when such AI were provably safe could they be commercialised “off-island”.

This may sound like Jurassic Park, but there is a real-world precedent for removing the profit motive from potentially dangerous research and putting it in the hands of an intergovernmental organisation. This is how Cern, which operates the largest particle physics laboratory in the world, has worked for almost 70 years.

Any of these solutions are going to require an extraordinary amount of coordination between labs and nations. Pulling this off will require an unusual degree of political will, which we need to start building now. Many of the major labs are waiting for critical new hardware to be delivered this year so they can start to train GPT-5 scale models. With the new chips and more investor money to spend, models trained in 2024 will use as much as 100 times the compute of today’s largest models. We will see many new emergent capabilities. This means there is a window through 2023 for governments to take control by regulating access to frontier hardware.

In 2012, my younger sister Rosemary, one of the kindest and most selfless people I’ve ever known, was diagnosed with a brain tumour. She had an aggressive form of cancer for which there is no known cure and yet sought to continue working as a doctor for as long as she could. My family and I desperately hoped that a new lifesaving treatment might arrive in time. She died in 2015.

I understand why people want to believe. Evangelists of God-like AI focus on the potential of a superhuman intelligence capable of solving our biggest challenges — cancer, climate change, poverty.

Even so, the risks of continuing without proper governance are too high. It is striking that Jan Leike, the head of alignment at OpenAI, tweeted on March 17: “Before we scramble to deeply integrate LLMs everywhere in the economy, can we pause and think whether it is wise to do so? This is quite immature technology and we don’t understand how it works. If we’re not careful, we’re setting ourselves up for a lot of correlated failures.” He made this warning statement just days before OpenAI announced it had connected GPT-4 to a massive range of tools, including Slack and Zapier.

Unfortunately, I think the race will continue. It will likely take a major misuse event — a catastrophe — to wake up the public and governments. I personally plan to continue to invest in AI start-ups that focus on alignment and safety or which are developing narrowly useful AI. But I can no longer invest in those that further contribute to this dangerous race. As a small shareholder in Anthropic, which is conducting similar research to DeepMind and OpenAI, I have grappled with these questions. The company has invested substantially in alignment, with 42 per cent of its team working on that area in 2021. But ultimately it is locked in the same race. For that reason, I would support significant regulation by governments and a practical plan to transform these companies into a Cern-like organisation.

We are not powerless to slow down this race. If you work in government, hold hearings and ask AI leaders, under oath, about their timelines for developing God-like AGI. Ask for a complete record of the security issues they have discovered when testing current models. Ask for evidence that they understand how these systems work and their confidence in achieving alignment. Invite independent experts to the hearings to cross-examine these labs.

If you work at a major lab trying to build God-like AI, interrogate your leadership about all these issues. This is particularly important if you work at one of the leading labs. It would be very valuable for these companies to co-ordinate more closely or even merge their efforts. OpenAI’s company charter expresses a willingness to “merge and assist”. I believe that now is the time. The leader of a major lab who plays a statesman role and guides us publicly to a safer path will be a much more respected world figure than the one who takes us to the brink.

Until now, humans have remained a necessary part of the learning process that characterises progress in AI. At some point, someone will figure out how to cut us out of the loop, creating a God-like AI capable of infinite self-improvement. By then, it may be too late.

Friday, 11 March 2022

‘People Like Us’ – The Reporting On Russia’s War In Ukraine Has Laid Bare Western Media Bias

"Marred with racial prejudice, the coverage of the war tends to normalise such tragedy in other parts of the world," writes Mohammad Jamal Ahmed in The Friday Times

Charlie D’Agata, a CBS news correspondent, commenting on the conflict described Ukraine as a “relatively civilized, relatively European” place in comparison with Iraq and Afghanistan. Such a framing propagates the narrative that wars and conflicts are unacceptable in Europe where the people have “blond hair and blue eyes” and “look like us”, but justifiable elsewhere. After his comments went viral, Charlie D’Agata did issue an apology but this was far from an isolated incident in the bigger picture. There are far too many examples reflecting the prevalent biased mentality in Western journalism.

In the same vein, Daniel Hannan of the Telegraph wrote, “They seem so like us. That is what makes it so shocking. Ukraine is a European country. Its people watch Netflix and have Instagram accounts, vote in free elections, and read uncensored newspapers. War is no longer something visited upon remote and impoverished locations”. Philippe Corbe of BFM TV, a France-based channel, reported, “We are not talking here about Syrian fleeing the bombing of Syrian regime backed by Putin, we are talking about Europeans leaving in cars that look like ours to save their lives.”

When Western interests are at stake, the resistance is labeled as “gallant, brave people fighting for their freedom.” Otherwise, they are mere “barbaric savages” or “terrorists”

This orientalist signalling relies on the notion that our looks and economic factors play a role in determining who is “civilised” or “uncivilised” and whether the war is somehow normal and expected in other areas of the world.

Even Al Jazeera, a Doha-based news outlet, could not refrain from such insensitive and irresponsible comments. Although they did apologise for the comments made by Al Jazeera English commentator Peter Dobbie about how Ukrainians do not “look like refugees” because of how they are dressed and look “middle class”, it shows how deeply ingrained such stereotypes and preconceived notions really are.

The Arab and Middle Eastern Journalists Association issued a statement categorically condemning such “comparisons that weigh the significance or imply justification of one conflict over another.”

Mehdi Hassan, a political analyst, on his show on MSNBC, bluntly called out this blatant hypocrisy, “when they say, ‘Oh, civilised cities’ and, in another clip, ‘Well-dressed people’ and ‘This is not the Third World,’ they really mean white people, don’t they?”

However, this imprudent emergence of biases is not just restricted to journalists.

Santiago Abascal, the leader of Spain’s VOX party, said in parliament, “Anyone can tell the difference between them (Ukrainian refugees) and the invasion of young military-aged men of Muslim origin who have launched themselves against European borders in an attempt to destabilize and colonize it.” Such commentaries enable the expression of racism pervading in the Western society. The mere existence of “men of Muslim origin” is conflated with a threat in itself.

The far-right French presidential candidate Marie Le Pen, rightfully, and very conveniently, recalled the Geneva Convention when posed the question about Ukrainian refugees. However, when she had to acknowledge the plight of the Syrian families seeking refuge in France, she defended her disapproval by stating that France does not have the means and if she were from a war-torn country, she would have stayed and fought.

Bulgaria’s Prime Minister Kiril Petkov said, “These are not the refugees we are used to. They are Europeans, intelligent, educated people… this is not the usual refugee wave of people with an unknown past. No European country is afraid of them.”

Such comments are not unprecedented, they have been emboldened by years of biased coverage of geopolitical events that serve western interests normalizing such narratives.

When America invaded Iraq under the garb of threat from weapons of mass destruction, it was done to “free the Iraqi people” from themselves. It was done in the name of liberation. The Economists’ May 2003 edition was titled “Now the waging of peace”. All it did, however, was leave a trail of destruction and chaos in the region.

The biggest humanitarian crisis is ongoing as you read this, and it is being perpetrated by American bombs and funding to Saudia Arabia. The United Nations Food Agency warns that 13 million Yemenis – nearly half children – are on the brink of starvation. All because of the genocidal US-backed war, yet no outrage on this catastrophe.

In 2014, when Israel killed over 2,300 people in Gaza with their incessant bombing of more than 500 tons of explosives, there was not a single outcry in the Western media outlets. Moreover, Google even allowed games like ‘Bomb Gaza’ to be sold from the play store. Earlier this year, Amnesty International even declared “the only democracy in the Middle East” an apartheid state, yet Western journalists and politicians flocked to its defense.

Just last year, even Ukraine president Volodymyr Zelenksyy defended Israeli apartheid aggression against Palestine. The United States and the European Union continue to support and uphold this “cruel system of domination and crime against humanity”, yet hardly are there any calls for collective action against the perpetrators.

When Western interests are at stake, the resistance is labeled as “gallant, brave people fighting for their freedom.” Otherwise, they are mere “barbaric savages” or “terrorists.”

The framing of the current crisis at the expense of others is abhorrent, at the least. We must show solidarity with civilians under military assault all over the world regardless of their socio-economic factors; for selective justice is injustice.

Monday, 28 June 2021

Tuesday, 1 June 2021

Why every single statue should come down

Having been a black leftwing Guardian columnist for more than two decades, I understood that I would be regarded as fair game for the kind of moral panics that might make headlines in rightwing tabloids. It’s not like I hadn’t given them the raw material. In the course of my career I’d written pieces with headlines such as “Riots are a class act”, “Let’s have an open and honest conversation about white people” and “End all immigration controls”. I might as well have drawn a target on my back. But the only time I was ever caught in the tabloids’ crosshairs was not because of my denunciations of capitalism or racism, but because of a statue – or to be more precise, the absence of one.

The story starts in the mid-19th century, when the designers of Trafalgar Square decided that there would be one huge column for Horatio Nelson and four smaller plinths for statues surrounding it. They managed to put statues on three of the plinths before running out of money, leaving the fourth one bare. A government advisory group, convened in 1999, decided that this fourth plinth should be a site for a rotating exhibition of contemporary sculpture. Responsibility for the site went to the new mayor of London, Ken Livingstone.

Livingstone, whom I did not know, asked me if I would be on the committee, which I joined in 2002. The committee met every six weeks, working out the most engaged, popular way to include the public in the process. I was asked if I would chair the meetings because they wanted someone outside the arts and I agreed. What could possibly go wrong?

Well, the Queen Mother died. That had nothing to do with me. Given that she was 101 her passing was a much anticipated, if very sad, event. Less anticipated was the suggestion by Simon Hughes, a Liberal Democrat MP and potential candidate for the London mayoralty, that the Queen Mother’s likeness be placed on the vacant fourth plinth. Worlds collided.

The next day, the Daily Mail ran a front page headline: “Carve her name in pride - Join our campaign for a statue of the Queen Mother to be erected in Trafalgar Square (whatever the panjandrums of political correctness say!)” Inside, an editorial asked whether our committee “would really respond to the national mood and agree a memorial in Trafalgar Square”.

Never mind that a committee, convened by parliament, had already decided how the plinth should be filled. Never mind that it was supposed to be an equestrian statue and that the Queen Mother will not be remembered for riding horses. Never mind that no one from the royal family or any elected official had approached us.

The day after that came a double-page spread headlined “Are they taking the plinth?”, alongside excerpts of articles I had written several years ago, taken out of context, under the headline “The thoughts of Chairman Gary”. Once again the editorial writers were upon us: “The saga of the empty plinth is another example of the yawning gap between the metropolitan elite hijacking this country and the majority of ordinary people who simply want to reclaim Britain as their own.”

The Mail’s quotes were truer than it dared imagine. It called on people to write in, but precious few did. No one was interested in having the Queen Mother in Trafalgar Square. The campaign died a sad and pathetic death. Luckily for me, it turned out that, if there was a gap between anyone and the ordinary people of the country on this issue, then the Daily Mail was on the wrong side of it.

This, however, was simply the most insistent attempt to find a human occupant for the plinth. Over the years there have been requests to put David Beckham, Bill Morris, Mary Seacole, Benny Hill and Paul Gascoigne up there. None of these figures were particularly known for riding horses either. But with each request I got, I would make the petitioner an offer: if you can name those who occupy the other three plinths, then the fourth is yours. Of course, the plinth was not actually in my gift. But that didn’t matter because I knew I would never have to deliver. I knew the answer because I had made it my business to. The other three were Maj Gen Sir Henry Havelock, who distinguished himself during what is now known as the Indian Rebellion of 1857, when an uprising of thousands of Indians ended in slaughter; Gen Sir Charles Napier, who crushed a rebellion in Ireland and conquered the Sindh province in what is now Pakistan; and King George IV, an alcoholic, debtor and womaniser.

The petitioners generally had no idea who any of them were. And when they finally conceded that point, I would ask them: “So why would you want to put someone else up there so we could forget them? I understand that you want to preserve their memory. But you’ve just shown that this is not a particularly effective way to remember people.”

In Britain, we seem to have a peculiar fixation with statues, as we seek to petrify historical discourse, lather it in cement, hoist it high and insist on it as a permanent statement of fact, culture, truth and tradition that can never be questioned, touched, removed or recast. This statue obsession mistakes adulation for history, history for heritage and heritage for memory. It attempts to detach the past from the present, the present from morality, and morality from responsibility. In short, it attempts to set our understanding of what has happened in stone, beyond interpretation, investigation or critique.

But history is not set in stone. It is a living discipline, subject to excavation, evolution and maturation. Our understanding of the past shifts. Our views on women’s suffrage, sexuality, medicine, education, child-rearing and masculinity are not the same as they were 50 years ago, and will be different again in another 50 years. But while our sense of who we are, what is acceptable and what is possible changes with time, statues don’t. They stand, indifferent to the play of events, impervious to the tides of thought that might wash over them and the winds of change that that swirl around them – or at least they do until we decide to take them down.

Workers removing a statue of Confederate general JEB Stuart in Richmond, Virginia, July 2020. Photograph: Jim Lo Scalzo/EPA

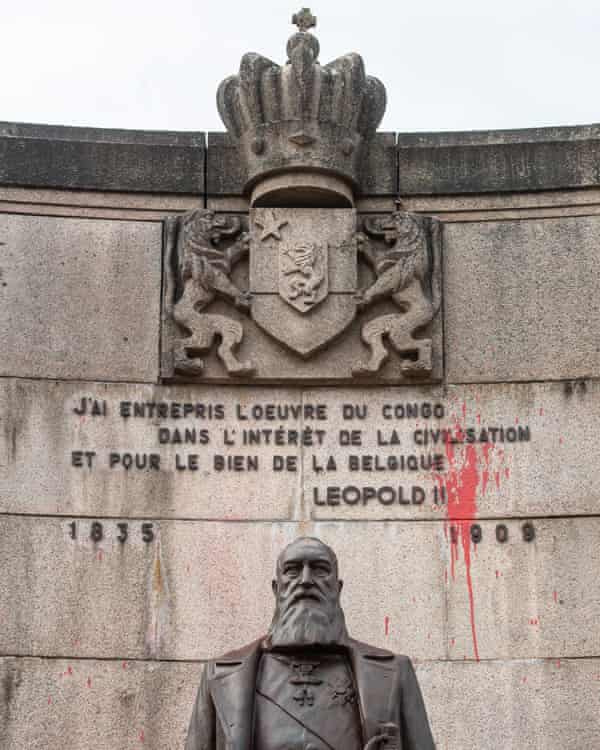

Workers removing a statue of Confederate general JEB Stuart in Richmond, Virginia, July 2020. Photograph: Jim Lo Scalzo/EPAIn recent months, I have been part of a team at the University of Manchester’s Centre on the Dynamics of Ethnicity (Code) studying the impact of the Black Lives Matter movement on statues and memorials in Britain, the US, South Africa, Martinique and Belgium. Last summer’s uprisings, sparked by the police murder of George Floyd in Minneapolis, spread across the globe. One of the focal points, in many countries, was statues. Belgium, Brazil, Ireland, Portugal, the Netherlands and Greenland were just a few of the places that saw statues challenged. On the French island of Martinique, the statue of Joséphine de Beauharnais, who was born to a wealthy colonial family on the island and later became Napoleon’s first wife and empress, was torn down by a crowd using clubs and ropes. It had already been decapitated 30 years ago.

Across the US, Confederate generals fell, were toppled or voted down. In the small town of Lake Charles, Louisiana, nature presented the local parish police jury with a challenge. In mid-August last year, the jury voted 10-4 to keep a memorial monument to the soldiers who died defending the Confederacy in the civil war. Two weeks later, Hurricane Laura blew it down. Now the jury has to decide not whether to take it down, but whether to put it back up again.

And then, of course, in Britain there was the statue of Edward Colston, a Bristol slave trader, which ended up in the drink. Britain’s major cities, including Manchester, Glasgow, Birmingham and Leeds, are undertaking reviews of their statues.

Many spurious arguments have been made about these actions, and I will come to them in a minute. But the debate around public art and memorialisation, as it pertains to statues, should be engaged not ducked. One response I have heard is that we should even out the score by erecting statues of prominent black, abolitionist, female and other figures that are underrepresented. I understand the motivation. To give a fuller account of the range of experiences, voices, hues and ideologies that have made us what we are. To make sure that public art is rooted in the lives of the whole public, not just a part of it, and that we all might see ourselves in the figures that are represented.

But while I can understand it, I do not agree with it. The problem isn’t that we have too few statues, but too many. I think it is a good thing that so many of these statues of pillagers, plunderers, bigots and thieves have been taken down. I think they are offensive. But I don’t think they should be taken down because they are offensive. I think they should be taken down because I think all statues should be taken down.

Here, to be clear, I am talking about statues of people, not other works of public memorials such as the Vietnam Veterans Memorial in Washington DC, the Holocaust memorial in Berlin or the Famine memorial in Dublin. I think works like these serve the important function of public memorialisation, and many have the added benefit of being beautiful.

The same cannot be said of statues of people. I think they are poor as works of public art and poor as efforts at memorialisation. Put more succinctly, they are lazy and ugly. So yes, take down the slave traders, imperial conquerors, colonial murderers, warmongers and genocidal exploiters. But while you’re at it, take down the freedom fighters, trade unionists, human rights champions and revolutionaries. Yes, remove Columbus, Leopold II, Colston and Rhodes. But take down Mandela, Gandhi, Seacole and Tubman, too.

I don’t think those two groups are moral equals. I place great value on those who fought for equality and inclusion and against bigotry and privilege. But their value to me need not be set in stone and raised on a pedestal. My sense of self-worth is not contingent on seeing those who represent my viewpoints, history and moral compass forced on the broader public. In the words of Nye Bevan, “That is my truth, you tell me yours.” Just be aware that if you tell me your truth is more important than mine, and therefore deserves to be foisted on me in the high street or public park, then I may not be listening for very long.

For me the issue starts with the very purpose of a statue. They are among the most fundamentally conservative – with a small c – expressions of public art possible. They are erected with eternity in mind – a fixed point on the landscape. Never to be moved, removed, adapted or engaged with beyond popular reverence. Whatever values they represent are the preserve of the establishment. To put up a statue you must own the land on which it stands and have the authority and means to do so. As such they represent the value system of the establishment at any given time that is then projected into the forever.

That is unsustainable. It is also arrogant. Societies evolve; norms change; attitudes progress. Take the mining magnate, imperialist and unabashed white supremacist Cecil Rhodes. He donated significant amounts of money with the express desire that he be remembered for 4,000 years. We’re only 120 years in, but his wish may well be granted. The trouble is that his intention was that he would be remembered fondly. And you can’t buy that kind of love, no matter how much bronze you lather it in. So in both South Africa and Britain we have been saddled with these monuments to Rhodes.

The trouble is that they are not his only legacy. The systems of racial subjugation in southern Africa, of which he was a principal architect, are still with us. The income and wealth disparities in that part of the world did not come about by bad luck or hard work. They were created by design. Rhodes’ design. This is the man who said: “The native is to be treated as a child and denied franchise. We must adopt a system of despotism, such as works in India, in our relations with the barbarism of South Africa.” So we should not be surprised if the descendants of those so-called natives, the majority in their own land, do not remember him fondly.

A similar story can be told in the southern states of the US. In his book Standing Soldiers, Kneeling Slaves, the American historian Kirk Savage writes of the 30-year period after the civil war: “Public monuments were meant to yield resolution and consensus, not to prolong conflict … Even now to commemorate is to seek historical closure, to draw together the various strands of meaning in an historical event or personage and condense its significance.”

Clearly these statues – of Confederate soldiers in the South, or of Rhodes in South Africa and Oxford – do not represent a consensus now. If they did, they would not be challenged as they are. Nobody is seriously challenging the statue of the suffragist Millicent Fawcett in Parliament Square, because nobody seriously challenges the notion of women’s suffrage. Nor is anyone seeking historical closure via the removal of a statue. The questions that some of these monuments raise – of racial inequality, white supremacy, imperialism, colonialism and slavery – are still very much with us. There is a reason why these particular statues, and not, say, that of Robert Raikes, who founded Sunday schools, which stands in Victoria Embankment Gardens in London, were targeted during the Black Lives Matter protests.

But these statues never represented a consensus, even when they were erected. Take the statues of Confederate figures in Richmond, Virginia that were the focus of protests last summer. Given that the statues represented men on the losing side of the civil war, they certainly didn’t represent a consensus in the country as a whole. The northern states wouldn’t have appreciated them. But closer to home, they didn’t even represent the general will of Richmond at the time. The substantial African American population of the city would hardly have been pleased to see them up there. And nor were many whites, either. When a labour party took control of Richmond city council in the late 1880s, a coalition of blacks and working-class whites refused to vote for an unveiling parade for the monument because it would “benefit only a certain class of people”.

Calls for the removal of statues have also raised the charge that longstanding works of public art are at the mercy of political whim. “Is nothing sacred?” they cry. “Who next?” they ask, clutching their pearls and pointing to Churchill. But our research showed these statues were not removed as a fad or in a feverish moment of insubordination. People had been calling for them to be removed for half a century. And the issue was never confined to the statue itself. It was always about what the statue represented: the prevailing and persistent issues that remained, and the legacy of whatever the statue was erected to symbolise.

One of the greatest distractions when it comes to removing statues is the argument that to remove a statue is to erase history; that to change something about a statue is to tamper with history. This is such errantarrant nonsense it is difficult to know where to begin, so I guess it would make sense to begin at the beginning.

Statues are not history; they represent historical figures. They may have been set up to mark a person’s historical contribution, but they are not themselves history. If you take down Nelson Mandela’s bust on London’s South Bank, you do not erase the history of the anti-apartheid struggle. Statues are symbols of reverence; they are not symbols of history. They elevate an individual from a historical moment and celebrate them.

Nobody thinks that when Iraqis removed statues of Saddam Hussein from around the country they wanted him to be forgotten. Quite the opposite. They wanted him, and his crimes, to be remembered. They just didn’t want him to be revered. Indeed, if the people removing a statue are trying to erase history, then they are very bad at it. For if the erection of a statue is a fact of history, then removing it is no less so. It can also do far more to raise awareness of history. More people know about Colston and what he did as a result of his statue being taken down than ever did as a result of it being put up. Indeed, the very people campaigning to take down the symbols of colonialism and slavery are the same ones who want more to be taught about colonialism and slavery in schools. The ones who want to keep them up are generally the ones who would prefer we didn’t study what these people actually did.

But to claim that statues represent history does not merely misrepresent the role of statues, it misunderstands history and their place in it. Let’s go back to the Confederate statues for a moment. The American civil war ended in 1865. The South lost. Much of its economy and infrastructure were laid to waste. Almost one in six white Southern men aged 13 to 43 died; even more were wounded; more again were captured.

Southerners had to forget the reality of the civil war before they could celebrate it. They did not want to remember the civil war as an episode that brought devastation and humiliation. Very few statues went up in the decades immediately after the war. According to the Southern Poverty Law Centre, nearly 500 monuments to Confederate white supremacy were erected across the country – many in the North – between 1885 and 1915. More than half were built within one seven-year period, between 1905 and 1912.

The timing was no coincidence. It was long enough since the horrors of the civil war that it could be misremembered as a noble defence of racialised regional culture rather than just slavery. As such, it represented a sanitised, partial and selective version of history, based less in fact than toxic nostalgia and melancholia. It’s not history that these statues’ protectors are defending: it’s mythology.

Colston, an official in the Royal African Company, which reportedly sold as many as 100,000 west Africans into slavery, died in 1721. His statue didn’t go up until 1895, more than 150 years later. This was no coincidence, either. Half of the monuments taken down or seriously challenged recently were put up in the three decades between 1889 and 1919. This was partly an aesthetic trend of the late Victorian era. But it should probably come as little surprise that the statues that anti-racist protesters wanted to be taken down were those erected when Jim Crow segregation was firmly installed in the US, and at the apogee of colonial expansion.

Statues always tell us more about the values of the period when they were put up than about the story of the person depicted. Two years before Martin Luther King’s death, a poll showed that the majority of Americans viewed him unfavourably. Four decades later, when Barack Obama unveiled a memorial to King in Washington DC, 91% of Americans approved. Rather than teaching us about the past, his statue distorts history. As I wrote in my book The Speech: The Story Behind Dr Martin Luther King Jr’s Dream, “White America came to embrace King in the same way that white South Africans came to embrace Nelson Mandela: grudgingly and gratefully, retrospectively, selectively, without grace or guile. Because by the time they realised their hatred of him was spent and futile, he had created a world in which loving him was in their own self-interest. Because, in short, they had no choice.”

One claim for not bringing down certain statues of people who committed egregious acts is that we should not judge people of another time by today’s standards. I call this the “But that was before racism was bad” argument or, as others have termed it, the Jimmy Savile defence.

Firstly, this strikes me as a very good argument for not erecting statues at all, since there is no guarantee that any consensus will persist. Just because there may be a sense of closure now doesn’t mean those issues won’t one day be reopened. But beyond that, by the time many of these statues went up there was already considerable opposition to the deeds that had made these men (and they are nearly all men) rich and famous. In Britain, slavery had been abolished more than 60 years before Colston’s statue went up. The civil war had been over for 30 years before most statues of Confederate generals went up. Cecil Rhodes and King Leopold II of Belgium were both criticised for their vile racist acts and views by their contemporaries. In other words, not only was what they did wrong, but it was widely known to be wrong at the time they did it. By the time they were set in stone there were significant movements, if not legislation, condemning the very things that had made them rich and famous.

A more honest appraisal of why the removal of these particular statues rankles with so many is that they do not actually want to engage with the history they represent. Power, and the wealth that comes with it, has many parents. But the brutality it takes to acquire it is all too often an orphan. According to a YouGov poll last year, only one in 20 Dutch, one in seven French, one in 5 Brits and one in four Belgians and Italians believe their former empire is something to be ashamed of. If these statues are supposed to tell our story, then why, after more than a century, do so few people actually know it?

This brings me to my final point. Statues do not just fail to teach us about the past, or give a misleading idea about particular people or particular historical events – they also skew how we understand history itself. For when you put up a statue to honour a historical moment, you reduce that moment to a single person. Individuals play an important role in history. But they don’t make history by themselves. There are always many other people involved. And so what is known as the Great Man theory of history distorts how, why and by whom history is forged.

Consider the statue of Rosa Parks that stands in the US Capitol. Parks was a great woman, whose refusal to give up her seat for a white woman on a bus in Montgomery, Alabama challenged local segregation laws and sparked the civil rights movement. When Parks died in 2005, her funeral was attended by thousands, and her contribution to the civil rights struggle was eulogised around the world.

But the reality is more complex. Parks was not the first to plead not guilty after resisting Montgomery’s segregation laws on its buses. Before Parks, there was a 15-year-old girl named Claudette Colvin. Colvin was all set to be the icon of the civil rights movement until she fell pregnant. Because she was an unmarried teenager, she was dropped by the conservative elders of the local church, who were key leaders of the movement. When I interviewed Colvin 20 years ago, she was just getting by as a nurses’ aide and living in the Bronx, all but forgotten.

And while what Parks did was a catalyst for resistance, the event that forced the segregationists to climb down wasn’t the work of one individual in a single moment, but the year-long collective efforts of African Americans in Montgomery who boycotted the buses – maids and gardeners who walked miles in sun and rain, despite intimidation, those who carpooled to get people where they needed to go, those who sacrificed their time and effort for the cause. The unknown soldiers of civil rights. These are the people who made it happen. Where is their statue? Where is their place in history? How easily and wilfully the main actors can be relegated to faceless extras.

I once interviewed the Uruguayan writer Eduardo Galeano, who confessed that his greatest fear was “that we are all suffering from amnesia”. Who, I asked, is responsible for this forgetfulness? “It’s not a person,” he explained. “It’s a system of power that is always deciding in the name of humanity who deserves to be remembered and who deserves to be forgotten … We are much more than we are told. We are much more beautiful.”

Statues cast a long shadow over that beauty and shroud the complexity even of the people they honour. Now, I love Rosa Parks. Not least because the story usually told about her is so far from who she was. She was not just a hapless woman who stumbled into history because she was tired and wanted to sit down. That was not the first time she had been thrown off a bus. “I had almost a life history of being rebellious against being mistreated against my colour,” she once said. She was also an activist, a feminist and a devotee of Malcolm X. “I don’t believe in gradualism or that whatever should be done for the better should take for ever to do,” she once said.

Of course I want Parks to be remembered. Of course I want her to take her rightful place in history. All the less reason to diminish that memory by casting her in bronze and erecting her beyond memory.

So let us not burden future generations with the weight of our faulty memory and the lies of our partial mythology. Let us not put up the people we ostensibly cherish so that they can be forgotten and ignored. Let us elevate them, and others – in the curriculum, through scholarships and museums. Let us subject them to the critiques they deserve, which may convert them from inert models of their former selves to the complex, and often flawed, people that they were. Let us fight to embed the values of those we admire in our politics and our culture. Let’s cover their anniversaries in the media and set them in tests. But the last thing we should do is cover their likeness in concrete and set them in stone.