'People will forgive you for being wrong, but they will never forgive you for being right - especially if events prove you right while proving them wrong.' Thomas Sowell

Search This Blog

Thursday, 9 May 2019

Wednesday, 8 May 2019

Why doesn’t Britain have a Huawei of its own?

Aditya Chakrabortty in The Guardian

Chances are that you have learned rather a lot about Huawei. That the Chinese giant is one of the world’s most controversial companies. That security experts, those people we pay to be paranoid on our behalf, warn its telecoms kit could be used by Beijing to spy on us. That Theresa May was begged by cabinet colleagues to keep the firm well away from our 5G network – yet ignored them. And that one or more senior ministers were so eager to prove their concern for national security that they leaked details of their meeting, thus breaching national security.

So you can already guess what will happen when Donald Trump’s secretary of state, Mike Pompeo, meets May and her foreign secretary, Jeremy Hunt, on Wednesday. Once the pleasantries about Harry and Meghan’s baby are over, top of America’s agenda will be to warn No 10 of the threat Huawei poses to British privacy – and to restate that Washington may retaliate by freezing London out of its intelligence network.

Maybe you recall whistleblower Edward Snowden and his revelations, published in this paper, about how US surveillance services are themselves harvesting millions of people’s phone calls and internet usage. Or possibly you are too busy gasping at the haplessness with which a Conservative-run government has allowed itself to be dragged into an escalating trade war between Washington and Beijing. Many are the questions raised by this affair, but among the largest is one I have not seen asked. Namely, where is Britain’s Huawei? How does one of the world’s most advanced economies end up without any major telecoms equipment maker of its own, and having to buy the vital stuff from a company that enjoys, according to the FBI, strong links with both the Chinese Communist party and the People’s Liberation Army?

Well, the answer is that the UK did have one. It was one of the largest and most famous industrial companies in the world. And it was finally killed off within the lifetime of every person reading this article, just over a decade ago. It was called the General Electric Company, or GEC, and the story of how it came to die explains and illuminates much of the mess the country is in today.

At its height, in the early 80s, GEC was not a company at all. It was an empire comprising around 180 different firms and employing about 250,000 people. It built everything from x-ray machines to ships, and it was huge in telecoms and defence electronics. At the helm was Arnold Weinstock, who took the reins in 1963 and spent the next three decades building it into a colossus, securing his place as postwar Britain’s most renowned industrialist.

The son of Jewish Polish immigrants, Weinstock never quite slotted into his role in the British establishment. He was known to be fanatical about cost-cutting, terrible at managing people, and only really lit up by breeding racehorses and visiting Milan’s La Scala opera house. Journalists visiting his Mayfair headquarters found the carpet threadbare and the paint peeling off the walls. A correspondent for the Economist more used to convivial three-bottle lunches with captains of industry came away complaining that GEC’s guests “have never been known to receive so much as a glass of water”.

That Economist profile was headlined Lord of Dullest Virtue, which sums up how both boss and business were seen: steadily profitable yet cautious and utterly unfashionable in the Britain of the 80s, which fancied a turbocharge. Weinstock went unloved by Margaret Thatcher, who preferred the corporate-raiding asset-stripper James Hanson (or Lord Moneybags, as he was dubbed). The post-Big Bang City bankers glanced at GEC’s vast spread of unglamorous businesses, out of step in an era of specialisation, and its shy boss better suited to a Rhineland boardroom – and buried both in plump-vowelled disdain.

Perhaps the pinstriped jeering got to Weinstock. Even as he protested “we’re not a company to render excitement”, he too began indulging in the 1980s business culture of “if it moves, buy it”. Between 1988 and 1998, academics found that GEC did no fewer than 79 “major restructuring events”: buying or selling units, or setting up joint ventures. But it was after Weinstock stepped down in 1996 that all hell broke loose. His replacement was an accountant, George Simpson, who had made his name, as the Guardian sniffed, “selling Rover to the Germans”. The new finance director, John Mayo, came from the merchant-banking world detested by Weinstock. Together the two men looked at the giant cash pile salted away by their predecessor – and set about spending it, and then some.

They sold the old businesses and bought shiny new ones; they flogged off dowdy and snapped up exciting. In just one financial year, 1999-2000, they bought no fewer than 15 companies, from America to Australia. Suddenly, GEC – or Marconi, as the rump was rebranded – was beloved by the bankers, who marvelled at the commissions coming their way, and the reporters, who had headlines to write.

Then came the dotcom bust, and the new purchases went south. A company that had been trading at £12.50 a share was now worth only four pence a pop. In the mid-2000s, Marconi’s most vital client, BT, passed it over for a contract that went instead to … Huawei. Weinstock didn’t live to see the death of his beloved firm but among his last reported remarks was: “I’d like to string [Simpson and Mayo] up from a high tree and let them swing there for a long time.”

This is not a story about genius versus idiocy, let alone good against evil. Weinstock was not quite as dull as made out, nor did he avoid all errors. But it is one of the most important episodes in recent British history – because it highlights the clash between two business cultures. On the one hand is Weinstock, building an institution over decades; on the other is the frenetic wheeler-dealing of Simpson and Mayo, mesmerised by quarterly figures and handing shareholders a fast buck. The road GEC took is the one also taken by ICI and other household names. It is also the one opted for by Britain as a whole, whose political class decided it cared neither who owned our industrial giants or venerable banks or Fleet Street newspapers, nor what they did with them. That is why our capitalism is today dominated by unsavoury, get-rich-quick merchants in the Philip Green mould.

Firms such as GEC and ICI used to invest heavily in research and development, notes Sheffield University’s pro-vice-chancellor for innovation, Richard Jones. Now the UK has been overtaken in R&D by all major western competitors. Even China, a vastly poorer economy in terms of GDP per capita, is more research-intensive than the UK.

Now Britons laud businessmen such as James Dyson who make most of their stuff in Asia. As a result, we rely on the rest of the world to come here and buy our assets. And even on something as relatively simple as telecoms equipment, we can’t help but be pulled into other countries’ strategic battles.

Chances are that you have learned rather a lot about Huawei. That the Chinese giant is one of the world’s most controversial companies. That security experts, those people we pay to be paranoid on our behalf, warn its telecoms kit could be used by Beijing to spy on us. That Theresa May was begged by cabinet colleagues to keep the firm well away from our 5G network – yet ignored them. And that one or more senior ministers were so eager to prove their concern for national security that they leaked details of their meeting, thus breaching national security.

So you can already guess what will happen when Donald Trump’s secretary of state, Mike Pompeo, meets May and her foreign secretary, Jeremy Hunt, on Wednesday. Once the pleasantries about Harry and Meghan’s baby are over, top of America’s agenda will be to warn No 10 of the threat Huawei poses to British privacy – and to restate that Washington may retaliate by freezing London out of its intelligence network.

Maybe you recall whistleblower Edward Snowden and his revelations, published in this paper, about how US surveillance services are themselves harvesting millions of people’s phone calls and internet usage. Or possibly you are too busy gasping at the haplessness with which a Conservative-run government has allowed itself to be dragged into an escalating trade war between Washington and Beijing. Many are the questions raised by this affair, but among the largest is one I have not seen asked. Namely, where is Britain’s Huawei? How does one of the world’s most advanced economies end up without any major telecoms equipment maker of its own, and having to buy the vital stuff from a company that enjoys, according to the FBI, strong links with both the Chinese Communist party and the People’s Liberation Army?

Well, the answer is that the UK did have one. It was one of the largest and most famous industrial companies in the world. And it was finally killed off within the lifetime of every person reading this article, just over a decade ago. It was called the General Electric Company, or GEC, and the story of how it came to die explains and illuminates much of the mess the country is in today.

At its height, in the early 80s, GEC was not a company at all. It was an empire comprising around 180 different firms and employing about 250,000 people. It built everything from x-ray machines to ships, and it was huge in telecoms and defence electronics. At the helm was Arnold Weinstock, who took the reins in 1963 and spent the next three decades building it into a colossus, securing his place as postwar Britain’s most renowned industrialist.

The son of Jewish Polish immigrants, Weinstock never quite slotted into his role in the British establishment. He was known to be fanatical about cost-cutting, terrible at managing people, and only really lit up by breeding racehorses and visiting Milan’s La Scala opera house. Journalists visiting his Mayfair headquarters found the carpet threadbare and the paint peeling off the walls. A correspondent for the Economist more used to convivial three-bottle lunches with captains of industry came away complaining that GEC’s guests “have never been known to receive so much as a glass of water”.

That Economist profile was headlined Lord of Dullest Virtue, which sums up how both boss and business were seen: steadily profitable yet cautious and utterly unfashionable in the Britain of the 80s, which fancied a turbocharge. Weinstock went unloved by Margaret Thatcher, who preferred the corporate-raiding asset-stripper James Hanson (or Lord Moneybags, as he was dubbed). The post-Big Bang City bankers glanced at GEC’s vast spread of unglamorous businesses, out of step in an era of specialisation, and its shy boss better suited to a Rhineland boardroom – and buried both in plump-vowelled disdain.

Perhaps the pinstriped jeering got to Weinstock. Even as he protested “we’re not a company to render excitement”, he too began indulging in the 1980s business culture of “if it moves, buy it”. Between 1988 and 1998, academics found that GEC did no fewer than 79 “major restructuring events”: buying or selling units, or setting up joint ventures. But it was after Weinstock stepped down in 1996 that all hell broke loose. His replacement was an accountant, George Simpson, who had made his name, as the Guardian sniffed, “selling Rover to the Germans”. The new finance director, John Mayo, came from the merchant-banking world detested by Weinstock. Together the two men looked at the giant cash pile salted away by their predecessor – and set about spending it, and then some.

They sold the old businesses and bought shiny new ones; they flogged off dowdy and snapped up exciting. In just one financial year, 1999-2000, they bought no fewer than 15 companies, from America to Australia. Suddenly, GEC – or Marconi, as the rump was rebranded – was beloved by the bankers, who marvelled at the commissions coming their way, and the reporters, who had headlines to write.

Then came the dotcom bust, and the new purchases went south. A company that had been trading at £12.50 a share was now worth only four pence a pop. In the mid-2000s, Marconi’s most vital client, BT, passed it over for a contract that went instead to … Huawei. Weinstock didn’t live to see the death of his beloved firm but among his last reported remarks was: “I’d like to string [Simpson and Mayo] up from a high tree and let them swing there for a long time.”

This is not a story about genius versus idiocy, let alone good against evil. Weinstock was not quite as dull as made out, nor did he avoid all errors. But it is one of the most important episodes in recent British history – because it highlights the clash between two business cultures. On the one hand is Weinstock, building an institution over decades; on the other is the frenetic wheeler-dealing of Simpson and Mayo, mesmerised by quarterly figures and handing shareholders a fast buck. The road GEC took is the one also taken by ICI and other household names. It is also the one opted for by Britain as a whole, whose political class decided it cared neither who owned our industrial giants or venerable banks or Fleet Street newspapers, nor what they did with them. That is why our capitalism is today dominated by unsavoury, get-rich-quick merchants in the Philip Green mould.

Firms such as GEC and ICI used to invest heavily in research and development, notes Sheffield University’s pro-vice-chancellor for innovation, Richard Jones. Now the UK has been overtaken in R&D by all major western competitors. Even China, a vastly poorer economy in terms of GDP per capita, is more research-intensive than the UK.

Now Britons laud businessmen such as James Dyson who make most of their stuff in Asia. As a result, we rely on the rest of the world to come here and buy our assets. And even on something as relatively simple as telecoms equipment, we can’t help but be pulled into other countries’ strategic battles.

Tuesday, 7 May 2019

Red Meat Republic - The Story of Beef

Exploitation and predatory pricing drove the transformation of the US beef industry – and created the model for modern agribusiness. By Joshua Specht in The Guardian

The meatpacking mogul Jonathan Ogden Armour could not abide socialist agitators. It was 1906, and Upton Sinclair had just published The Jungle, an explosive novel revealing the grim underside of the American meatpacking industry. Sinclair’s book told the tale of an immigrant family’s toil in Chicago’s slaughterhouses, tracing the family’s physical, financial and emotional collapse. The Jungle was not Armour’s only concern. The year before, the journalist Charles Edward Russell’s book The Greatest Trust in the World had detailed the greed and exploitation of a packing industry that came to the American dining table “three times a day … and extorts its tribute”.

In response to these attacks, Armour, head of the enormous Chicago-based meatpacking firm Armour & Co, took to the Saturday Evening Post to defend himself and his industry. Where critics saw filth, corruption and exploitation, Armour saw cleanliness, fairness and efficiency. If it were not for “the professional agitators of the country”, he claimed, the nation would be free to enjoy an abundance of delicious and affordable meat.

Armour and his critics could agree on this much: they lived in a world unimaginable 50 years before. In 1860, most cattle lived, died and were consumed within a few hundred miles’ radius. By 1906, an animal could be born in Texas, slaughtered in Chicago and eaten in New York. Americans rich and poor could expect to eat beef for dinner. The key aspects of modern beef production – highly centralised, meatpacker-dominated and low-cost – were all pioneered during that period.

For Armour, cheap beef and a thriving centralised meatpacking industry were the consequence of emerging technologies such as the railroad and refrigeration coupled with the business acumen of a set of honest and hard-working men like his father, Philip Danforth Armour. According to critics, however, a capitalist cabal was exploiting technological change and government corruption to bankrupt traditional butchers, sell diseased meat and impoverish the worker.

Ultimately, both views were correct. The national market for fresh beef was the culmination of a technological revolution, but it was also the result of collusion and predatory pricing. The industrial slaughterhouse was a triumph of human ingenuity as well as a site of brutal labour exploitation. Industrial beef production, with all its troubling costs and undeniable benefits, reflected seemingly contradictory realities.

Beef production would also help drive far-reaching changes in US agriculture. Fresh-fruit distribution began with the rise of the meatpackers’ refrigerator cars, which they rented to fruit and vegetable growers. Production of wheat, perhaps the US’s greatest food crop, bore the meatpackers’ mark. In order to manage animal feed costs, Armour & Co and Swift & Co invested heavily in wheat futures and controlled some of the country’s largest grain elevators. In the early 20th century, an Armour & Co promotional map announced that “the greatness of the United States is founded on agriculture”, and depicted the agricultural products of each US state, many of which moved through Armour facilities.

Beef was a paradigmatic industry for the rise of modern industrial agriculture, or agribusiness. As much as a story of science or technology, modern agriculture is a compromise between the unpredictability of nature and the rationality of capital. This was a lurching, violent process that sawmeatpackers displace the risks of blizzards, drought, disease and overproduction on to cattle ranchers. Today’s agricultural system works similarly. In poultry, processors like Perdue and Tyson use an elaborate system of contracts and required equipment and feed purchases to maximise their own profits while displacing risk on to contract farmers. This is true with crop production as well. As with 19th-century meatpacking, relatively small actors conduct the actual growing and production, while companies like Monsanto and Cargill control agricultural inputs and market access.

The transformations that remade beef production between the end of the American civil war in 1865 and the passage of the Federal Meat Inspection Act in 1906 stretched from the Great Plains to the kitchen table. Before the civil war, cattle raising was largely regional, and in most cases, the people who managed cattle out west were the same people who owned them. Then, in the 1870s and 80s, improved transport, bloody victories over the Plains Indians, and the American west’s integration into global capital markets sparked a ranching boom. Meanwhile, Chicago meatpackers pioneered centralised food processing. Using an innovative system of refrigerator cars and distribution centres, they began to distribute fresh beef nationwide. Millions of cattle were soon passing through Chicago’s slaughterhouses each year. By 1890, the Big Four meatpacking companies – Armour & Co, Swift & Co, Morris & Co and the GH Hammond Co – directly or indirectly controlled the majority of the nation’s beef and pork.

But in the 1880s, the big Chicago meatpackers faced determined opposition at every stage from slaughter to sale. Meatpackers fought with workers as they imposed a brutally exploitative labour regime. Meanwhile, attempts to transport freshly butchered beef faced opposition from railroads who found higher profits transporting live cattle east out of Chicago and to local slaughterhouses in eastern cities. Once pre-slaughtered and partially processed beef – known as “dressed beef” – reached the nation’s many cities and towns, the packers fought to displace traditional butchers and woo consumers sceptical of eating meat from an animal slaughtered a continent away.

The consequences of each of these struggles persist today. A small number of firms still control most of the country’s – and by now the world’s – beef. They draw from many comparatively small ranchers and cattle feeders, and depend on a low-paid, mostly invisible workforce. The fact that this set of relationships remains so stable, despite the public’s abstract sense that something is not quite right, is not the inevitable consequence of technological change but the direct result of the political struggles of the late 19th century.

In the slaughterhouse, someone was always willing to take your place. This could not have been far from the mind of 14-year-old Vincentz Rutkowski as he stooped, knife in hand, in a Swift & Co facility in summer 1892. For up to 10 hours each day, Vincentz trimmed tallow from cattle paunches. The job required strong workers who were low to the ground, making it ideal for boys like Rutkowski, who had the beginnings of the strength but not the size of grown men. For the first two weeks of his employment, Rutkowski shared his job with two other boys. As they became more skilled, one of the boys was fired. Another few weeks later, the other was also removed, and Rutkowski was expected to do the work of three people.

The morning that final co-worker left, on 30 June, Rutkowski fell behind the disassembly line’s frenetic pace. After just three hours of working alone, the boy failed to dodge a carcass swinging toward him. It struck his knife hand, driving the tool into his left arm near the elbow. The knife cut muscle and tendon, leaving Rutkowski with lifelong injuries.

The labour regime that led to Rutkowski’s injury was integral to large-scale meatpacking. A packinghouse was a masterpiece of technological and organisational achievement, but that was not enough to slaughter millions of cattle annually. Packing plants needed cheap, reliable and desperate labour. They found it via the combination of mass immigration and a legal regime that empowered management, checked the nascent power of unions and provided limited liability for worker injury. The Big Four’s output depended on worker quantity over worker quality.

Meatpacking lines, pioneered in the 1860s in Cincinnati’s pork packinghouses, were the first modern production lines. The innovation was that they kept products moving continuously, eliminating downtime and requiring workers to synchronise their movements to keep pace. This idea was enormously influential. In his memoirs, Henry Ford explained that his idea for continuous motion assembly “came in a general way from the overhead trolley that the Chicago packers use in dressing beef”.

A Swift and Company meatpacking house in Chicago, circa 1906. Photograph: Granger Historical Picture Archive/Alamy

Packing plants relied on a brilliant intensification of the division of labour. This division increased productivity because it simplified slaughter tasks. Workers could then be trained quickly, and because the tasks were also synchronised, everyone had to match the pace of the fastest worker.

When cattle first entered one of these slaughterhouses, they encountered an armed man walking toward them on an overhead plank. Whether by a hammer swing to the skull or a spear thrust to the animal’s spinal column, the (usually achieved) goal was to kill with a single blow. Assistants chained the animal’s legs and dragged the carcass from the room. The carcass was hoisted into the air and brought from station to station along an overhead rail.

Next, a worker cut the animal’s throat and drained and collected its blood while another group began skinning the carcass. Even this relatively simple process was subdivided throughout the period. Initially the work of a pair, nine different workers handled skinning by 1904. Once the carcass was stripped, gutted and drained of blood, it went into another room, where highly trained butchers cut the carcass into quarters. These quarters were stored in giant refrigerated rooms to await distribution.

But profitability was not just about what happened inside slaughterhouses. It also depended on what was outside: throngs of men and women hoping to find a day’s or a week’s employment. An abundant labour supply meant the packers could easily replace anyone who balked at paltry salaries or, worse yet, tried to unionise. Similarly, productivity increases heightened the risk of worker injury, and therefore were only effective if people could be easily replaced. Fortunately for the packers, late 19th-century Chicago was full of people desperate for work.

Seasonal fluctuations and the vagaries of the nation’s cattle markets further conspired to marginalise slaughterhouse labour. Though refrigeration helped the meatpackers “defeat the seasons” and secure year-round shipping, packing remained seasonal. Packers had to reckon with cattle’s reproductive cycles, and distribution in hot weather was more expensive. The number of animals processed varied day to day and month to month. For packinghouse workers, the effect was a world in which an individual day’s labour might pay relatively well but busy days were punctuated with long stretches of little or no work. The least skilled workers might only find a few weeks or months of employment at a time.

The work was so competitive and the workers so desperate that, even when they had jobs, they often had to wait, without pay, if there were no animals to slaughter. Workers would be fired if they did not show up at a specified time before 9am, but then might wait, unpaid, until 10am or 11am for a shipment. If the delivery was very late, work might continue until late into the night.

Though the division of labour and throngs of unemployed people were crucial to operating the Big Four’s disassembly lines, these factors were not sufficient to maintain a relentless production pace. This required intervention directly on the line. Fortunately for the packers, they could exploit a core aspect of continuous-motion processing: if one person went faster, everyone had to go faster. The meatpackers used pace-setters to force other workers to increase their speed. The packers would pay this select group – roughly one in 10 workers – higher wages and offer secure positions that they only kept if they maintained a rapid pace, forcing the rest of the line to keep up. These pace-setters were resented by their co-workers, and were a vital management tool.

Close supervision of foremen was equally important. Management kept statistics on production-line output, and overseers who slipped in production could lose their jobs. This encouraged foremen to use tactics that management did not want to explicitly support. According to one retired foreman, he was “always trying to cut down wages in every possible way … some of [the foremen] got a commission on all expenses they could save below a certain point”. Though union officials vilified foremen, their jobs were only marginally less tenuous than those of their underlings.

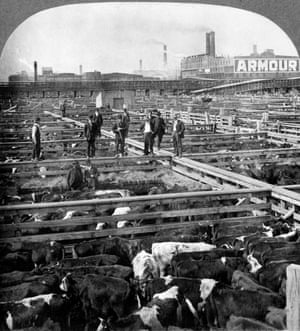

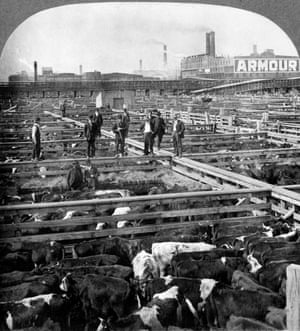

Union Stock Yard in Chicago in 1909. Photograph: Science History Images/Alamy

The effectiveness of de-skilling on the disassembly line rested on an increase in the wages of a few highly skilled positions. Though these workers individually made more money, their employers secured a precipitous decrease in average wages. Previously, a gang composed entirely of general-purpose butchers might all be paid 35 cents an hour. In the new regime, a few highly specialised butchers would receive 50 cents or more an hour, but the majority of other workers would be paid much less than 35 cents. Highly paid workers were given the only jobs in which costly mistakes could be made – damage to hides or expensive cuts of meat – protecting against mistakes or sabotage from the irregularly employed workers. The packers also believed (sometimes erroneously) that the highly paid workers – popularly known as the “butcher aristocracy” – would be more loyal to management and less willing to cooperate with unionisation attempts.

The overall trend was an incredible intensification of output. Splitters, one of the most skilled positions, provide a good example. The economist John Commons wrote that in 1884, “five splitters in a certain gang would get out 800 cattle in 10 hours, or 16 per hour for each man, the wages being 45 cents. In 1894 the speed had been increased so that four splitters got out 1,200 in 10 hours, or 30 per hour for each man – an increase of nearly 100% in 10 years.” Even as the pace increased, the process of de-skilling ensured that wages were constantly moving downward, forcing employees to work harder for less money.

The fact that meatpacking’s profitability depended on a brutal labour regime meant conflicts between labour and management were ongoing, and at times violent. For workers, strikes during the 1880s and 90s were largely unsuccessful. This was the result of state support for management, a willing pool of replacement workers and extreme hostility to any attempts to organise. At the first sign of unrest, Chicago packers would recruit replacement workers from across the US and threaten to permanently fire and blacklist anyone associated with labour organisers. But state support mattered most of all; during an 1886 fight, for instance, authorities “garrisoned over 1,000 men … to preserve order and protect property”. Even when these troops did not clash with strikers, it had a chilling effect on attempts to organise. Ultimately, packinghouse workers could not organise effectively until the very end of the 19th century.

The genius of the disassembly line was not merely in creating productivity gains through the division of labour; it was also that it simplified labour enough that the Big Four could benefit from a growing surplus of workers and a business-friendly legal regime. If the meatpackers needed purely skilled labour, they could not exploit desperate throngs outside their gates. If a new worker could be trained in hours and government was willing to break strikes and limit injury liability, workers became disposable. This enabled the dangerous – and profitable – increases in production speed that maimed Vincentz Rutkowski.

Centralisation of cattle slaughter in Chicago promised high profits. Chicago’s stockyards had started as a clearinghouse for cattle – a point from which animals were shipped live to cities around the country. But when an animal is shipped live, almost 40% of the travelling weight is blood, bones, hide and other inedible parts. The small slaughterhouses and butchers that bought live animals in New York or Boston could sell some of these by-products to tanners or fertiliser manufacturers, but their ability to do so was limited. If the animals could be slaughtered in Chicago, the large packinghouses could realise massive economies of scale on the by-products. In fact, these firms could undersell local slaughterhouses on the actual meat and make their profits on the by-products.

This model only became possible with refinements in refrigerated shipping technology, starting in the 1870s. Yet simply because technology created a possibility did not make its adoption inevitable. Refrigeration sparked a nearly decade-long conflict between the meatpackers and the railroads. American railroads had invested heavily in railcars and other equipment for shipping live cattle, and fought dressed-beef shipment tonne by tonne, charging different prices for moving a given weight of dressed beef from a similar weight of live cattle. They justified this difference by claiming their goal was to provide the same final cost for beef to consumers – what the railroads called a “principle of neutrality”.

Since beef from animals slaughtered locally was more expensive than Chicago dressed beef, the railroads would charge the Chicago packers more to even things out. This would protect railroad investments by eliminating the packers’ edge, and it could all be justified as “neutral”. Though this succeeded for a time, the packers would defeat this strategy by taking a circuitous route along Canada’s Grand Trunk Railway, a line that was happy to accept dressed-beef business it had no chance of securing otherwise.

Eventually, American railroads abandoned their differential pricing as they saw the collapse of live cattle shipping and became greedy for a piece of the burgeoning dressed-beef trade. But even this was not enough to secure the dominance of the Chicago houses. They also had to contend with local butchers.

In 1889 Henry Barber entered Ramsey County, Minnesota, with 100lb of contraband: fresh beef from an animal slaughtered in Chicago. Barber was no fly-by-night butcher, and was well aware of an 1889 law requiring all meat sold in Minnesota to be inspected locally prior to slaughter. Shortly after arriving, he was arrested, convicted and sentenced to 30 days in jail. But with the support of his employer, Armour & Co, Barber aggressively challenged the local inspection measure.

‘Cows carry flesh, but they carry personality too’: the hard lessons of farming

Today, most local butchers have gone bankrupt and marginal ranchers have had little choice but to accept their marginality. In the US, an increasingly punitive immigration regime makes slaughterhouse work ever more precarious, and “ag-gag” laws that define animal-rights activism as terrorism keep slaughterhouses out of the public eye. The result is that our means of producing our food can seem inevitable, whatever creeping sense of unease consumers might feel. But the history of the beef industry reminds us that this method of producing food is a question of politics and political economy, rather than technology and demographics. Alternate possibilities remain hazy, but if we understand this story as one of political economy, we might be able to fulfil Armour & Company’s old credo – “We feed the world”– using a more equitable system.

The meatpacking mogul Jonathan Ogden Armour could not abide socialist agitators. It was 1906, and Upton Sinclair had just published The Jungle, an explosive novel revealing the grim underside of the American meatpacking industry. Sinclair’s book told the tale of an immigrant family’s toil in Chicago’s slaughterhouses, tracing the family’s physical, financial and emotional collapse. The Jungle was not Armour’s only concern. The year before, the journalist Charles Edward Russell’s book The Greatest Trust in the World had detailed the greed and exploitation of a packing industry that came to the American dining table “three times a day … and extorts its tribute”.

In response to these attacks, Armour, head of the enormous Chicago-based meatpacking firm Armour & Co, took to the Saturday Evening Post to defend himself and his industry. Where critics saw filth, corruption and exploitation, Armour saw cleanliness, fairness and efficiency. If it were not for “the professional agitators of the country”, he claimed, the nation would be free to enjoy an abundance of delicious and affordable meat.

Armour and his critics could agree on this much: they lived in a world unimaginable 50 years before. In 1860, most cattle lived, died and were consumed within a few hundred miles’ radius. By 1906, an animal could be born in Texas, slaughtered in Chicago and eaten in New York. Americans rich and poor could expect to eat beef for dinner. The key aspects of modern beef production – highly centralised, meatpacker-dominated and low-cost – were all pioneered during that period.

For Armour, cheap beef and a thriving centralised meatpacking industry were the consequence of emerging technologies such as the railroad and refrigeration coupled with the business acumen of a set of honest and hard-working men like his father, Philip Danforth Armour. According to critics, however, a capitalist cabal was exploiting technological change and government corruption to bankrupt traditional butchers, sell diseased meat and impoverish the worker.

Ultimately, both views were correct. The national market for fresh beef was the culmination of a technological revolution, but it was also the result of collusion and predatory pricing. The industrial slaughterhouse was a triumph of human ingenuity as well as a site of brutal labour exploitation. Industrial beef production, with all its troubling costs and undeniable benefits, reflected seemingly contradictory realities.

Beef production would also help drive far-reaching changes in US agriculture. Fresh-fruit distribution began with the rise of the meatpackers’ refrigerator cars, which they rented to fruit and vegetable growers. Production of wheat, perhaps the US’s greatest food crop, bore the meatpackers’ mark. In order to manage animal feed costs, Armour & Co and Swift & Co invested heavily in wheat futures and controlled some of the country’s largest grain elevators. In the early 20th century, an Armour & Co promotional map announced that “the greatness of the United States is founded on agriculture”, and depicted the agricultural products of each US state, many of which moved through Armour facilities.

Beef was a paradigmatic industry for the rise of modern industrial agriculture, or agribusiness. As much as a story of science or technology, modern agriculture is a compromise between the unpredictability of nature and the rationality of capital. This was a lurching, violent process that sawmeatpackers displace the risks of blizzards, drought, disease and overproduction on to cattle ranchers. Today’s agricultural system works similarly. In poultry, processors like Perdue and Tyson use an elaborate system of contracts and required equipment and feed purchases to maximise their own profits while displacing risk on to contract farmers. This is true with crop production as well. As with 19th-century meatpacking, relatively small actors conduct the actual growing and production, while companies like Monsanto and Cargill control agricultural inputs and market access.

The transformations that remade beef production between the end of the American civil war in 1865 and the passage of the Federal Meat Inspection Act in 1906 stretched from the Great Plains to the kitchen table. Before the civil war, cattle raising was largely regional, and in most cases, the people who managed cattle out west were the same people who owned them. Then, in the 1870s and 80s, improved transport, bloody victories over the Plains Indians, and the American west’s integration into global capital markets sparked a ranching boom. Meanwhile, Chicago meatpackers pioneered centralised food processing. Using an innovative system of refrigerator cars and distribution centres, they began to distribute fresh beef nationwide. Millions of cattle were soon passing through Chicago’s slaughterhouses each year. By 1890, the Big Four meatpacking companies – Armour & Co, Swift & Co, Morris & Co and the GH Hammond Co – directly or indirectly controlled the majority of the nation’s beef and pork.

But in the 1880s, the big Chicago meatpackers faced determined opposition at every stage from slaughter to sale. Meatpackers fought with workers as they imposed a brutally exploitative labour regime. Meanwhile, attempts to transport freshly butchered beef faced opposition from railroads who found higher profits transporting live cattle east out of Chicago and to local slaughterhouses in eastern cities. Once pre-slaughtered and partially processed beef – known as “dressed beef” – reached the nation’s many cities and towns, the packers fought to displace traditional butchers and woo consumers sceptical of eating meat from an animal slaughtered a continent away.

The consequences of each of these struggles persist today. A small number of firms still control most of the country’s – and by now the world’s – beef. They draw from many comparatively small ranchers and cattle feeders, and depend on a low-paid, mostly invisible workforce. The fact that this set of relationships remains so stable, despite the public’s abstract sense that something is not quite right, is not the inevitable consequence of technological change but the direct result of the political struggles of the late 19th century.

In the slaughterhouse, someone was always willing to take your place. This could not have been far from the mind of 14-year-old Vincentz Rutkowski as he stooped, knife in hand, in a Swift & Co facility in summer 1892. For up to 10 hours each day, Vincentz trimmed tallow from cattle paunches. The job required strong workers who were low to the ground, making it ideal for boys like Rutkowski, who had the beginnings of the strength but not the size of grown men. For the first two weeks of his employment, Rutkowski shared his job with two other boys. As they became more skilled, one of the boys was fired. Another few weeks later, the other was also removed, and Rutkowski was expected to do the work of three people.

The morning that final co-worker left, on 30 June, Rutkowski fell behind the disassembly line’s frenetic pace. After just three hours of working alone, the boy failed to dodge a carcass swinging toward him. It struck his knife hand, driving the tool into his left arm near the elbow. The knife cut muscle and tendon, leaving Rutkowski with lifelong injuries.

The labour regime that led to Rutkowski’s injury was integral to large-scale meatpacking. A packinghouse was a masterpiece of technological and organisational achievement, but that was not enough to slaughter millions of cattle annually. Packing plants needed cheap, reliable and desperate labour. They found it via the combination of mass immigration and a legal regime that empowered management, checked the nascent power of unions and provided limited liability for worker injury. The Big Four’s output depended on worker quantity over worker quality.

Meatpacking lines, pioneered in the 1860s in Cincinnati’s pork packinghouses, were the first modern production lines. The innovation was that they kept products moving continuously, eliminating downtime and requiring workers to synchronise their movements to keep pace. This idea was enormously influential. In his memoirs, Henry Ford explained that his idea for continuous motion assembly “came in a general way from the overhead trolley that the Chicago packers use in dressing beef”.

A Swift and Company meatpacking house in Chicago, circa 1906. Photograph: Granger Historical Picture Archive/Alamy

Packing plants relied on a brilliant intensification of the division of labour. This division increased productivity because it simplified slaughter tasks. Workers could then be trained quickly, and because the tasks were also synchronised, everyone had to match the pace of the fastest worker.

When cattle first entered one of these slaughterhouses, they encountered an armed man walking toward them on an overhead plank. Whether by a hammer swing to the skull or a spear thrust to the animal’s spinal column, the (usually achieved) goal was to kill with a single blow. Assistants chained the animal’s legs and dragged the carcass from the room. The carcass was hoisted into the air and brought from station to station along an overhead rail.

Next, a worker cut the animal’s throat and drained and collected its blood while another group began skinning the carcass. Even this relatively simple process was subdivided throughout the period. Initially the work of a pair, nine different workers handled skinning by 1904. Once the carcass was stripped, gutted and drained of blood, it went into another room, where highly trained butchers cut the carcass into quarters. These quarters were stored in giant refrigerated rooms to await distribution.

But profitability was not just about what happened inside slaughterhouses. It also depended on what was outside: throngs of men and women hoping to find a day’s or a week’s employment. An abundant labour supply meant the packers could easily replace anyone who balked at paltry salaries or, worse yet, tried to unionise. Similarly, productivity increases heightened the risk of worker injury, and therefore were only effective if people could be easily replaced. Fortunately for the packers, late 19th-century Chicago was full of people desperate for work.

Seasonal fluctuations and the vagaries of the nation’s cattle markets further conspired to marginalise slaughterhouse labour. Though refrigeration helped the meatpackers “defeat the seasons” and secure year-round shipping, packing remained seasonal. Packers had to reckon with cattle’s reproductive cycles, and distribution in hot weather was more expensive. The number of animals processed varied day to day and month to month. For packinghouse workers, the effect was a world in which an individual day’s labour might pay relatively well but busy days were punctuated with long stretches of little or no work. The least skilled workers might only find a few weeks or months of employment at a time.

The work was so competitive and the workers so desperate that, even when they had jobs, they often had to wait, without pay, if there were no animals to slaughter. Workers would be fired if they did not show up at a specified time before 9am, but then might wait, unpaid, until 10am or 11am for a shipment. If the delivery was very late, work might continue until late into the night.

Though the division of labour and throngs of unemployed people were crucial to operating the Big Four’s disassembly lines, these factors were not sufficient to maintain a relentless production pace. This required intervention directly on the line. Fortunately for the packers, they could exploit a core aspect of continuous-motion processing: if one person went faster, everyone had to go faster. The meatpackers used pace-setters to force other workers to increase their speed. The packers would pay this select group – roughly one in 10 workers – higher wages and offer secure positions that they only kept if they maintained a rapid pace, forcing the rest of the line to keep up. These pace-setters were resented by their co-workers, and were a vital management tool.

Close supervision of foremen was equally important. Management kept statistics on production-line output, and overseers who slipped in production could lose their jobs. This encouraged foremen to use tactics that management did not want to explicitly support. According to one retired foreman, he was “always trying to cut down wages in every possible way … some of [the foremen] got a commission on all expenses they could save below a certain point”. Though union officials vilified foremen, their jobs were only marginally less tenuous than those of their underlings.

Union Stock Yard in Chicago in 1909. Photograph: Science History Images/Alamy

The effectiveness of de-skilling on the disassembly line rested on an increase in the wages of a few highly skilled positions. Though these workers individually made more money, their employers secured a precipitous decrease in average wages. Previously, a gang composed entirely of general-purpose butchers might all be paid 35 cents an hour. In the new regime, a few highly specialised butchers would receive 50 cents or more an hour, but the majority of other workers would be paid much less than 35 cents. Highly paid workers were given the only jobs in which costly mistakes could be made – damage to hides or expensive cuts of meat – protecting against mistakes or sabotage from the irregularly employed workers. The packers also believed (sometimes erroneously) that the highly paid workers – popularly known as the “butcher aristocracy” – would be more loyal to management and less willing to cooperate with unionisation attempts.

The overall trend was an incredible intensification of output. Splitters, one of the most skilled positions, provide a good example. The economist John Commons wrote that in 1884, “five splitters in a certain gang would get out 800 cattle in 10 hours, or 16 per hour for each man, the wages being 45 cents. In 1894 the speed had been increased so that four splitters got out 1,200 in 10 hours, or 30 per hour for each man – an increase of nearly 100% in 10 years.” Even as the pace increased, the process of de-skilling ensured that wages were constantly moving downward, forcing employees to work harder for less money.

The fact that meatpacking’s profitability depended on a brutal labour regime meant conflicts between labour and management were ongoing, and at times violent. For workers, strikes during the 1880s and 90s were largely unsuccessful. This was the result of state support for management, a willing pool of replacement workers and extreme hostility to any attempts to organise. At the first sign of unrest, Chicago packers would recruit replacement workers from across the US and threaten to permanently fire and blacklist anyone associated with labour organisers. But state support mattered most of all; during an 1886 fight, for instance, authorities “garrisoned over 1,000 men … to preserve order and protect property”. Even when these troops did not clash with strikers, it had a chilling effect on attempts to organise. Ultimately, packinghouse workers could not organise effectively until the very end of the 19th century.

The genius of the disassembly line was not merely in creating productivity gains through the division of labour; it was also that it simplified labour enough that the Big Four could benefit from a growing surplus of workers and a business-friendly legal regime. If the meatpackers needed purely skilled labour, they could not exploit desperate throngs outside their gates. If a new worker could be trained in hours and government was willing to break strikes and limit injury liability, workers became disposable. This enabled the dangerous – and profitable – increases in production speed that maimed Vincentz Rutkowski.

Centralisation of cattle slaughter in Chicago promised high profits. Chicago’s stockyards had started as a clearinghouse for cattle – a point from which animals were shipped live to cities around the country. But when an animal is shipped live, almost 40% of the travelling weight is blood, bones, hide and other inedible parts. The small slaughterhouses and butchers that bought live animals in New York or Boston could sell some of these by-products to tanners or fertiliser manufacturers, but their ability to do so was limited. If the animals could be slaughtered in Chicago, the large packinghouses could realise massive economies of scale on the by-products. In fact, these firms could undersell local slaughterhouses on the actual meat and make their profits on the by-products.

This model only became possible with refinements in refrigerated shipping technology, starting in the 1870s. Yet simply because technology created a possibility did not make its adoption inevitable. Refrigeration sparked a nearly decade-long conflict between the meatpackers and the railroads. American railroads had invested heavily in railcars and other equipment for shipping live cattle, and fought dressed-beef shipment tonne by tonne, charging different prices for moving a given weight of dressed beef from a similar weight of live cattle. They justified this difference by claiming their goal was to provide the same final cost for beef to consumers – what the railroads called a “principle of neutrality”.

Since beef from animals slaughtered locally was more expensive than Chicago dressed beef, the railroads would charge the Chicago packers more to even things out. This would protect railroad investments by eliminating the packers’ edge, and it could all be justified as “neutral”. Though this succeeded for a time, the packers would defeat this strategy by taking a circuitous route along Canada’s Grand Trunk Railway, a line that was happy to accept dressed-beef business it had no chance of securing otherwise.

Eventually, American railroads abandoned their differential pricing as they saw the collapse of live cattle shipping and became greedy for a piece of the burgeoning dressed-beef trade. But even this was not enough to secure the dominance of the Chicago houses. They also had to contend with local butchers.

In 1889 Henry Barber entered Ramsey County, Minnesota, with 100lb of contraband: fresh beef from an animal slaughtered in Chicago. Barber was no fly-by-night butcher, and was well aware of an 1889 law requiring all meat sold in Minnesota to be inspected locally prior to slaughter. Shortly after arriving, he was arrested, convicted and sentenced to 30 days in jail. But with the support of his employer, Armour & Co, Barber aggressively challenged the local inspection measure.

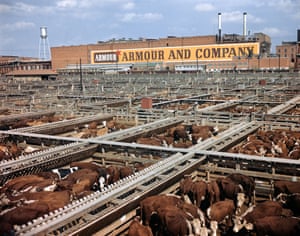

A cattle stockyard in Texas in the 1960s. Photograph: ClassicStock/Alamy

Barber’s arrest was part of a plan to provoke a fight over the Minnesota law, which Armour & Co had lobbied against since it was first drawn up. In federal court, Barber’s lawyers alleged that the statute under which he was convicted violated federal authority over interstate commerce, as well as the US constitution’s privileges and immunities clause. The case would eventually reach the supreme court.

At trial, the state argued that without local, on-the-hoof inspection it was impossible to know if meat had come from a diseased animal. Local inspection was therefore a reasonable part of the state’s police power. Of course, if this argument was upheld, the Chicago houses would no longer be able to ship their goods to any unfriendly state. In response, Barber’s counsel argued that the Minnesota law was a protectionist measure that discriminated against out-of-state butchers. There was no reason meat could not be adequately inspected in Chicago before being sold elsewhere. In Minnesota v Barber (1890), the supreme court ruled the statute unconstitutional and ordered Barber’s release. Armour & Co would go on to dominate the local market.

The Barber ruling was a pivotal moment in a longer fight on the part of the Big Four to secure national distribution. The Minnesota law, and others like it across the country, were fronts in a war waged by local butchers to protect their trade against the encroachment of the “dressed-beef men”. The rise of the Chicago meatpackers was not a gradual process of newer practices displacing old, but a wrenching process of big packers strong-arming and bankrupting smaller competitors. The Barber decision made these fights possible, but it did not make victory inevitable. It was on the back of hundreds of small victories – in rural and urban communities across the US – that the packers built their enormous profits.

Armour and the other big packers did not want to deal directly with customers. That required knowledge of local markets and represented a considerable amount of risk. Instead, they hoped to replace wholesalers, who slaughtered cattle for sale to retail butchers. The Chicago houses wanted local butchers to focus exclusively on selling meat; the packers would handle the rest.

When the packers first entered an area, they wooed a respected butcher. If the butcher would agree to buy from the Chicago houses, he could secure extremely generous rates. But if the local butcher refused these advances, the packers declared war. For example, when the Chicago houses entered Pittsburgh, they approached the veteran butcher William Peters. When he refused to work with Armour & Co, Peters later explained, the Chicago firm’s agent told him: “Mr Peters, if you butchers don’t take hold of it [dressed beef], we are going to open shops throughout the city.” Still, Peters resisted and Armour went on to open its own shops, underselling Pittsburgh’s butchers. Peters told investigators that he and his colleagues “are working for glory now. We do not work for any profit … we have been working for glory for the past three or four years, ever since those fellows came into our town”. Meanwhile, Armour’s share of the Pittsburgh market continued to grow.

Facing these kinds of tactics in cities around the country, local butchers formed protective associations to fight the Chicago houses. Though many associations were local, the Butchers’ National Protective Association of the United States of America aspired to “unite in one brotherhood all butchers and persons engaged in dealing in butchers’ stock”. Organised in 1887, the association pledged to “protect their common interests and those of the general public” through a focus on sanitary conditions. Health concerns were an issue on which traditional butchers could oppose the Chicago houses while appealing to consumers’ collective good. They argued that the Big Four “disregard the public good and endanger the health of the people by selling, for human food, diseased, tainted and other unwholesome meat”. The association further promised to oppose price manipulation of a “staple and indispensable article of human food”.

These associations pushed what amounted to a protectionist agenda using food contamination as a justification. On the state and local level, associations demanded local inspection before slaughter, as was the case with the Minnesota law that Henry Barber challenged. Decentralising slaughter would make wholesale butchering again dependent on local knowledge that the packers could not acquire from Chicago.

But again the packers successfully challenged these measures in the courts. Though the specifics varied by case, judges generally affirmed the argument that local, on-the-hoof inspection violated the constitution’s interstate commerce clause, and often accepted that inspection did not need to be local to ensure safe food. Animals could be inspected in Chicago before slaughter and then the meat itself could be inspected locally. This approach would address public fears about sanitary meat, but without a corresponding benefit to local butchers. Lacking legal recourse and finding little support from consumers excited about low-cost beef, local wholesalers lost more and more ground to the Chicago houses until they disappeared almost entirely.

Upton Sinclair’s The Jungle would become the most famous protest novel of the 20th century. By revealing brutal labour exploitation and stomach-turning slaughterhouse filth, the novel helped spur the passage of the Federal Meat Inspection Act and the Pure Food and Drug Act in 1906. But The Jungle’s heart-wrenching critique of industrial capitalism was lost on readers more worried about the rat faeces that, according to Sinclair, contaminated their sausage. Sinclair later observed: “I aimed at the public’s heart, and by accident I hit it in the stomach.” He hoped for socialist revolution, but had to settle for accurate food labelling.

The industry’s defence against striking workers, angry butchers and bankrupt ranchers – namely, that the new system of industrial production served a higher good – resonated with the public. Abstractly, Americans were worried about the plight of slaughterhouse workers, but they were also wary of those same workers marching in the streets. Similarly, they cared about the struggles of ranchers and local butchers, but also had to worry about their wallets. If packers could provide low prices and reassure the public that their meat was safe, consumers would be happy.

The Big Four meatpacking firms came to control the majority of the US’s beef within a fairly brief period –about 15 years – as a set of relationships that once appeared unnatural began to appear inevitable. Intense de-skilling in slaughterhouse labour only became accepted once organised labour was thwarted, leaving packinghouse labour largely invisible to this day. The slaughter of meat in one place for consumption and sale elsewhere only ceased to appear “artificial and abnormal” once butchers’ protective associations disbanded, and once lawmakers and the public accepted that this centralised industrial system was necessary to provide cheap beef to the people.

These developments are taken for granted now, but they were the product of struggles that could have resulted in radically different standards of production. The beef industry that was established in this period would shape food production throughout the 20th century. There were more major shifts – ranging from trucking-driven decentralisation to the rise of fast food – but the broad strokes would remain the same. Much of the environmental and economic risk of food production would be displaced on to struggling ranchers and farmers, while processors and packers would make money in good times and bad. Benefit to an abstract consumer good would continue to justify the industry’s high environmental and social costs.

Barber’s arrest was part of a plan to provoke a fight over the Minnesota law, which Armour & Co had lobbied against since it was first drawn up. In federal court, Barber’s lawyers alleged that the statute under which he was convicted violated federal authority over interstate commerce, as well as the US constitution’s privileges and immunities clause. The case would eventually reach the supreme court.

At trial, the state argued that without local, on-the-hoof inspection it was impossible to know if meat had come from a diseased animal. Local inspection was therefore a reasonable part of the state’s police power. Of course, if this argument was upheld, the Chicago houses would no longer be able to ship their goods to any unfriendly state. In response, Barber’s counsel argued that the Minnesota law was a protectionist measure that discriminated against out-of-state butchers. There was no reason meat could not be adequately inspected in Chicago before being sold elsewhere. In Minnesota v Barber (1890), the supreme court ruled the statute unconstitutional and ordered Barber’s release. Armour & Co would go on to dominate the local market.

The Barber ruling was a pivotal moment in a longer fight on the part of the Big Four to secure national distribution. The Minnesota law, and others like it across the country, were fronts in a war waged by local butchers to protect their trade against the encroachment of the “dressed-beef men”. The rise of the Chicago meatpackers was not a gradual process of newer practices displacing old, but a wrenching process of big packers strong-arming and bankrupting smaller competitors. The Barber decision made these fights possible, but it did not make victory inevitable. It was on the back of hundreds of small victories – in rural and urban communities across the US – that the packers built their enormous profits.

Armour and the other big packers did not want to deal directly with customers. That required knowledge of local markets and represented a considerable amount of risk. Instead, they hoped to replace wholesalers, who slaughtered cattle for sale to retail butchers. The Chicago houses wanted local butchers to focus exclusively on selling meat; the packers would handle the rest.

When the packers first entered an area, they wooed a respected butcher. If the butcher would agree to buy from the Chicago houses, he could secure extremely generous rates. But if the local butcher refused these advances, the packers declared war. For example, when the Chicago houses entered Pittsburgh, they approached the veteran butcher William Peters. When he refused to work with Armour & Co, Peters later explained, the Chicago firm’s agent told him: “Mr Peters, if you butchers don’t take hold of it [dressed beef], we are going to open shops throughout the city.” Still, Peters resisted and Armour went on to open its own shops, underselling Pittsburgh’s butchers. Peters told investigators that he and his colleagues “are working for glory now. We do not work for any profit … we have been working for glory for the past three or four years, ever since those fellows came into our town”. Meanwhile, Armour’s share of the Pittsburgh market continued to grow.

Facing these kinds of tactics in cities around the country, local butchers formed protective associations to fight the Chicago houses. Though many associations were local, the Butchers’ National Protective Association of the United States of America aspired to “unite in one brotherhood all butchers and persons engaged in dealing in butchers’ stock”. Organised in 1887, the association pledged to “protect their common interests and those of the general public” through a focus on sanitary conditions. Health concerns were an issue on which traditional butchers could oppose the Chicago houses while appealing to consumers’ collective good. They argued that the Big Four “disregard the public good and endanger the health of the people by selling, for human food, diseased, tainted and other unwholesome meat”. The association further promised to oppose price manipulation of a “staple and indispensable article of human food”.

These associations pushed what amounted to a protectionist agenda using food contamination as a justification. On the state and local level, associations demanded local inspection before slaughter, as was the case with the Minnesota law that Henry Barber challenged. Decentralising slaughter would make wholesale butchering again dependent on local knowledge that the packers could not acquire from Chicago.

But again the packers successfully challenged these measures in the courts. Though the specifics varied by case, judges generally affirmed the argument that local, on-the-hoof inspection violated the constitution’s interstate commerce clause, and often accepted that inspection did not need to be local to ensure safe food. Animals could be inspected in Chicago before slaughter and then the meat itself could be inspected locally. This approach would address public fears about sanitary meat, but without a corresponding benefit to local butchers. Lacking legal recourse and finding little support from consumers excited about low-cost beef, local wholesalers lost more and more ground to the Chicago houses until they disappeared almost entirely.

Upton Sinclair’s The Jungle would become the most famous protest novel of the 20th century. By revealing brutal labour exploitation and stomach-turning slaughterhouse filth, the novel helped spur the passage of the Federal Meat Inspection Act and the Pure Food and Drug Act in 1906. But The Jungle’s heart-wrenching critique of industrial capitalism was lost on readers more worried about the rat faeces that, according to Sinclair, contaminated their sausage. Sinclair later observed: “I aimed at the public’s heart, and by accident I hit it in the stomach.” He hoped for socialist revolution, but had to settle for accurate food labelling.

The industry’s defence against striking workers, angry butchers and bankrupt ranchers – namely, that the new system of industrial production served a higher good – resonated with the public. Abstractly, Americans were worried about the plight of slaughterhouse workers, but they were also wary of those same workers marching in the streets. Similarly, they cared about the struggles of ranchers and local butchers, but also had to worry about their wallets. If packers could provide low prices and reassure the public that their meat was safe, consumers would be happy.

The Big Four meatpacking firms came to control the majority of the US’s beef within a fairly brief period –about 15 years – as a set of relationships that once appeared unnatural began to appear inevitable. Intense de-skilling in slaughterhouse labour only became accepted once organised labour was thwarted, leaving packinghouse labour largely invisible to this day. The slaughter of meat in one place for consumption and sale elsewhere only ceased to appear “artificial and abnormal” once butchers’ protective associations disbanded, and once lawmakers and the public accepted that this centralised industrial system was necessary to provide cheap beef to the people.

These developments are taken for granted now, but they were the product of struggles that could have resulted in radically different standards of production. The beef industry that was established in this period would shape food production throughout the 20th century. There were more major shifts – ranging from trucking-driven decentralisation to the rise of fast food – but the broad strokes would remain the same. Much of the environmental and economic risk of food production would be displaced on to struggling ranchers and farmers, while processors and packers would make money in good times and bad. Benefit to an abstract consumer good would continue to justify the industry’s high environmental and social costs.

‘Cows carry flesh, but they carry personality too’: the hard lessons of farming

Today, most local butchers have gone bankrupt and marginal ranchers have had little choice but to accept their marginality. In the US, an increasingly punitive immigration regime makes slaughterhouse work ever more precarious, and “ag-gag” laws that define animal-rights activism as terrorism keep slaughterhouses out of the public eye. The result is that our means of producing our food can seem inevitable, whatever creeping sense of unease consumers might feel. But the history of the beef industry reminds us that this method of producing food is a question of politics and political economy, rather than technology and demographics. Alternate possibilities remain hazy, but if we understand this story as one of political economy, we might be able to fulfil Armour & Company’s old credo – “We feed the world”– using a more equitable system.

Saturday, 4 May 2019

Najam Sethi on Pakistan Military's Truths

Najam Sethi in The Friday Times

The world according to Al Bakistan

In a wide ranging and far reaching “briefing”, Maj-Gen Asif Ghafoor, DG-ISPR, has laid down the grundnorm of state realism. But consider.

He says there is no organized terrorist infrastructure in Pakistan. True, the military has knocked out Al Qaeda/Tehrik-e-Taliban Pakistan and degraded the Lashkar-e-Jhangvi. But a question mark still hangs over the fate of our “freedom fighter” jihadi organisations which are deemed to be “terrorist” by the international community. That is why Pakistan is struggling to remain off the FATF black list. The Maj-Gen says Pakistan has paid a huge price in the martyrdom of 81,000 citizens in the war against terror. True, but the world couldn’t care less: these homegrown terrorists were the outcome of our own misguided policies. He says that “radicalization” took root in Pakistan due to the Afghan jihad. True, but we were more than willing partners in that project. He says that terrorism came to Pakistan after the international community intervened in Afghanistan. True, but we provided safe haven to the Taliban for nearly twenty years and allowed them to germinate in our womb. He says it was decided last January to “mainstream” proscribed organisations. True, but what took us so long to tackle a troubling problem for twenty years when we were not busy in “kinetic operations”?

Maj-Gen Ghafoor says madrassahs will be mainstreamed under the Education Ministry. A noble thought. However, far from being mainstreamed, the madrassahs have so far refused to even get themselves properly registered as per the National Action Plan. Now the Punjab government and religious parties have refused to comply. Indeed, the Khyber-Pakhtunkhwa government is actively funding some big ones which have provided the backbone of the terrorists.

But it is Maj-Gen Ghafoor’s briefing on the Pashtun Tahaffuz Movement (PTM) that has generated the most controversy. He says the military has responded positively to its demand to de-mine FATA and reduce check posts but is constrained by lack of civil administration in the area and resurfacing of terrorists from across the border. Fair enough. But most of the “disappeared” are still “disappeared” and extra-judicial killings, like those of Naqeebullah Mehsud, are not being investigated. He wants to know why the PTM asked the Afghan government not to hand over the body of Dawar to the Pakistan government. He has accused PTM of receiving funds from hostile intel agencies. If that is proven it would be a damning indictment of PTM.

The PTM has responded by accusing the military of being unaccountable and repressive, a view that is echoed by many rights groups, media and political parties across the country.

In response, Major General Ghafoor has threatened: “Time is up”. Presumably, the military wants to detain and charge some PTM leaders as “traitors”. That would be most inadvisable. It will only serve to swell the PTM ranks. It may even precipitate an armed resistance, given the propensity of foreign intel agencies to fish in troubled waters. We also know how the various “traitors” in Pakistani history have ended up acquiring heroic proportions while “state realism” dictated otherwise. The list is long and impressive: Fatima Jinnah, Hussein Shaheed Suharwardi, Mujeebur Rahman, G.M. Syed, Khan Abdul Wali Khan, Khair Bux Marri, Ataullah Mengal, Akbar Bugti, etc. etc. We also know the fate of “banned” organisations – they simply reappear under another name.

The PTM has arisen because of the trials and tribulations of the tribal areas in the last decade of terrorism. The Pashtun populace has been caught in the crossfire of insurgency and counter insurgency. The insurgents were once state assets with whom the populace was expected to cooperate. Those who didn’t suffered at the hands of both. But when these “assets” became “liabilities”, those who didn’t cooperate with the one were targeted by the other. In consequence, from racial profiling to disappearances, a whole generation of tribal Pashtuns is scarred by state policies. The PTM is voicing that protest. If neighbouring foreign intel agencies are exploiting their sentiments, it is to be expected as a “realistic” quid pro quo for what Pakistani intel agencies have been serving its neighbours in the past.

If the Pakistani Miltablishment has been compelled by the force of new circumstances to undo its own old misguided policies, it should at least recognize the legitimate grievances of those who have paid the price of its miscalculations and apply balm to their wounds. Every other household in FATA is adversely affected one way or the other by the “war against terrorism”. The PTM is their voice. It needs to be heard. The media should be allowed to cross-examine it. In turn, the PTM must be wary of being tainted by the “foreign hand” and stop abusing the army.

The civilian government and opposition in parliament should sit down with the leaders of the PTM and find an honourable and equitable way to address mutually legitimate and “realistic” concerns. The military’s self-righteous, authoritarian tone must give way to a caring and sympathetic approach. Time’s not up. It has just arrived.

The world according to Al Bakistan

In a wide ranging and far reaching “briefing”, Maj-Gen Asif Ghafoor, DG-ISPR, has laid down the grundnorm of state realism. But consider.

He says there is no organized terrorist infrastructure in Pakistan. True, the military has knocked out Al Qaeda/Tehrik-e-Taliban Pakistan and degraded the Lashkar-e-Jhangvi. But a question mark still hangs over the fate of our “freedom fighter” jihadi organisations which are deemed to be “terrorist” by the international community. That is why Pakistan is struggling to remain off the FATF black list. The Maj-Gen says Pakistan has paid a huge price in the martyrdom of 81,000 citizens in the war against terror. True, but the world couldn’t care less: these homegrown terrorists were the outcome of our own misguided policies. He says that “radicalization” took root in Pakistan due to the Afghan jihad. True, but we were more than willing partners in that project. He says that terrorism came to Pakistan after the international community intervened in Afghanistan. True, but we provided safe haven to the Taliban for nearly twenty years and allowed them to germinate in our womb. He says it was decided last January to “mainstream” proscribed organisations. True, but what took us so long to tackle a troubling problem for twenty years when we were not busy in “kinetic operations”?

Maj-Gen Ghafoor says madrassahs will be mainstreamed under the Education Ministry. A noble thought. However, far from being mainstreamed, the madrassahs have so far refused to even get themselves properly registered as per the National Action Plan. Now the Punjab government and religious parties have refused to comply. Indeed, the Khyber-Pakhtunkhwa government is actively funding some big ones which have provided the backbone of the terrorists.

But it is Maj-Gen Ghafoor’s briefing on the Pashtun Tahaffuz Movement (PTM) that has generated the most controversy. He says the military has responded positively to its demand to de-mine FATA and reduce check posts but is constrained by lack of civil administration in the area and resurfacing of terrorists from across the border. Fair enough. But most of the “disappeared” are still “disappeared” and extra-judicial killings, like those of Naqeebullah Mehsud, are not being investigated. He wants to know why the PTM asked the Afghan government not to hand over the body of Dawar to the Pakistan government. He has accused PTM of receiving funds from hostile intel agencies. If that is proven it would be a damning indictment of PTM.

The PTM has responded by accusing the military of being unaccountable and repressive, a view that is echoed by many rights groups, media and political parties across the country.

In response, Major General Ghafoor has threatened: “Time is up”. Presumably, the military wants to detain and charge some PTM leaders as “traitors”. That would be most inadvisable. It will only serve to swell the PTM ranks. It may even precipitate an armed resistance, given the propensity of foreign intel agencies to fish in troubled waters. We also know how the various “traitors” in Pakistani history have ended up acquiring heroic proportions while “state realism” dictated otherwise. The list is long and impressive: Fatima Jinnah, Hussein Shaheed Suharwardi, Mujeebur Rahman, G.M. Syed, Khan Abdul Wali Khan, Khair Bux Marri, Ataullah Mengal, Akbar Bugti, etc. etc. We also know the fate of “banned” organisations – they simply reappear under another name.