'People will forgive you for being wrong, but they will never forgive you for being right - especially if events prove you right while proving them wrong.' Thomas Sowell

Search This Blog

Showing posts with label order. Show all posts

Showing posts with label order. Show all posts

Thursday, 12 May 2022

Thursday, 29 April 2021

Monday, 26 April 2021

CamKerala3 starts 2021 cricket pre-season with wins

by Girish Menon

The pre-season games played by (CamKerala 3) CK3 on 25 April and 17 April resulted in three wins: one a close 2 run defeat of CK2 and two bigger wins over CK1 and Reach CC’s Sunday 11.

The games on 17 April saw CK3 usurp the title of CamKerala champions after beating CK2 in a qualifier before defeating CK1 in the finals. The prevalent hierarchy in CamKerala was shattered and left the defeated teams thirsting for an urgent rematch to restore what they believe is a 'natural' pecking order.

Yesterday saw a rather one sided game in the fields of Reach a village 12 miles east of Cambridge. This hitherto 'open toilet' ground now has its own eco-toilet (not usable though!) hence CK3 players continued to discharge water in the open.

CK3 won the toss and scored over 230 runs in a 35 over game. CK3s cricketers with tennis ball training led the way with a flurry of 4s and 6s. Captain Saheer retired after preventing what could have been a collapse and the tail wagged so much that Martin could not get a bat. This led to the revival of the contentious issue whether batsmen should retire after facing a certain number of deliveries.

When CK3 bowled, Saheer set an umbrella field previously seen only when Lillee and Thomson used to bowl way back in the 1970s. There were nearly 3 slips and a gully to Jithin’s good pace and bounce and this writer dropped two chances behind the wicket. When Martin bowled his field was short point, short cover, silly mid off… and Sharad caught two batters at short point off Martin’s ‘moonballs’. Then there was a procession of batters and the match ended 15 overs before time.

The pitch had high bounce and deviation on most deliveries whilst some deliveries crept along the ground giving this writer tremendous difficulty behind the sticks. The match ended with one CK3 player locked out of his car and three team mates with fuel running on reserve wondering whether they would make it to the nearest petrol station without trouble.

There was a lot of laughter and merriment while CK3 bowled which I hope will continue for the rest of the season.

The pre-season games played by (CamKerala 3) CK3 on 25 April and 17 April resulted in three wins: one a close 2 run defeat of CK2 and two bigger wins over CK1 and Reach CC’s Sunday 11.

The games on 17 April saw CK3 usurp the title of CamKerala champions after beating CK2 in a qualifier before defeating CK1 in the finals. The prevalent hierarchy in CamKerala was shattered and left the defeated teams thirsting for an urgent rematch to restore what they believe is a 'natural' pecking order.

Yesterday saw a rather one sided game in the fields of Reach a village 12 miles east of Cambridge. This hitherto 'open toilet' ground now has its own eco-toilet (not usable though!) hence CK3 players continued to discharge water in the open.

CK3 won the toss and scored over 230 runs in a 35 over game. CK3s cricketers with tennis ball training led the way with a flurry of 4s and 6s. Captain Saheer retired after preventing what could have been a collapse and the tail wagged so much that Martin could not get a bat. This led to the revival of the contentious issue whether batsmen should retire after facing a certain number of deliveries.

When CK3 bowled, Saheer set an umbrella field previously seen only when Lillee and Thomson used to bowl way back in the 1970s. There were nearly 3 slips and a gully to Jithin’s good pace and bounce and this writer dropped two chances behind the wicket. When Martin bowled his field was short point, short cover, silly mid off… and Sharad caught two batters at short point off Martin’s ‘moonballs’. Then there was a procession of batters and the match ended 15 overs before time.

The pitch had high bounce and deviation on most deliveries whilst some deliveries crept along the ground giving this writer tremendous difficulty behind the sticks. The match ended with one CK3 player locked out of his car and three team mates with fuel running on reserve wondering whether they would make it to the nearest petrol station without trouble.

There was a lot of laughter and merriment while CK3 bowled which I hope will continue for the rest of the season.

Sunday, 17 March 2019

Why we should be honest about failure

Disappointment is the natural order of life. Most people achieve less than they would like writes JANAN GANESH in The FT

On a long-haul flight, Can You Ever Forgive Me? becomes the first film I have ever watched twice in immediate succession. Released last month in Britain, it recounts the (true) story of Lee Israel, a once-admired, now-marginal writer who resorts to literary forgery to make the rent on her fetid New York hovel. Her one friend is himself a washout who, as per the English tradition, passes off his insolvency as bohemia. Lee pleads with her agent to answer her calls and, in the rawest scene, confesses her crime with a wistful pang for the success it brought her.

There are serviceable jokes (including the profane farewell between the two friends) but the film is ultimately about failure: social, financial, romantic, professional. Put it down to the lachrymose effects of air travel — a phenomenon that has no definitive explanation — but I found the film unusually affecting. Or perhaps it was the shock of seeing failure addressed so unsentimentally, and from so many angles.

Failure — not spectacular failure, but failure as gnawing disappointment — is the natural order of life. Most people will achieve at least a little bit less than they would have liked in their careers. Most marriages wind down from intense passion to a kind of elevated friendship, and even this does not count the roughly four in 10 that collapse entirely. Most businesses fail. Most books fail. Most films fail.

You would hope that something so endemic to the human experience would be constantly discussed and actively prepared for. Instead, what we hear about is failure as a great “teacher”, or as a staging post before eventual success. There are management books about “failing forward”. There are educational methods that teach children the uses of failure. Consult an anthology of quotations about the subject, and it is not just the Paulo Coelho types who sugar-coat it. Churchill, Edison, Capote, at least one Roosevelt: people who should know better almost deny the existence of failure as anything other than a character-building phase.

There are good intentions behind all this. There is also a lot of naivety and squeamishness. For many people, failure will be just that, not a nourishing experience or a bridge to something else. It will be a lasting condition, and it will sting a fair bit.

Our seeming inability to look this fact in the eye is not just unbecoming in and of itself, it also inadvertently makes the experience of failure more harrowing than it needs to be. By reimagining it as just a holding pen before ultimate triumph, those who find themselves stuck there must feel like aberrations, when their experience could not be more banal.

I have known lots of Lee Israels: sensations at 25, under-achievers at 40. Sometimes, there was an identifiable wrong turn — a duff career move, say, or the pram in the hallway. But in most cases, it was just the law of numbers doing its impersonal work.

In almost all professions, there are too few places at the top for too many hopefuls. Lots of blameless people will miss out. Whether at school or through those excruciating management guides, a wiser culture would not romanticise failure as a means to success. It would normalise it as an end.

Look again at that list of names who have minted smarmy epigrams about the utility of failure. It is, you realise, a kind of winner’s wisdom. Those who overcome setbacks to achieve epic feats tend to universalise their atypical experience. Amazingly bad givers of advice, they encourage people to proceed with ambitions that are best sat on, and despise “quitters” when quitting is often the purest common sense.

At the end of Can You Ever Forgive Me?, Lee is an unambiguous failure. There is (and you will excuse the spoilers) no rapprochement with an ex-lover she is plainly not over. There is no conquest of her drink habit. The film could dwell on the real-life Lee’s successful memoir, on which it is based, but only mentions it in text as the screen goes dark. She loses her solitary friend to illness. Even the cat croaks. Why, then, is the film so moreish as to demand an instant repeat over the Atlantic? It is, I think, the honest ventilation of a universal human subject. It is the novelty of being treated as a grown-up.

On a long-haul flight, Can You Ever Forgive Me? becomes the first film I have ever watched twice in immediate succession. Released last month in Britain, it recounts the (true) story of Lee Israel, a once-admired, now-marginal writer who resorts to literary forgery to make the rent on her fetid New York hovel. Her one friend is himself a washout who, as per the English tradition, passes off his insolvency as bohemia. Lee pleads with her agent to answer her calls and, in the rawest scene, confesses her crime with a wistful pang for the success it brought her.

There are serviceable jokes (including the profane farewell between the two friends) but the film is ultimately about failure: social, financial, romantic, professional. Put it down to the lachrymose effects of air travel — a phenomenon that has no definitive explanation — but I found the film unusually affecting. Or perhaps it was the shock of seeing failure addressed so unsentimentally, and from so many angles.

Failure — not spectacular failure, but failure as gnawing disappointment — is the natural order of life. Most people will achieve at least a little bit less than they would have liked in their careers. Most marriages wind down from intense passion to a kind of elevated friendship, and even this does not count the roughly four in 10 that collapse entirely. Most businesses fail. Most books fail. Most films fail.

You would hope that something so endemic to the human experience would be constantly discussed and actively prepared for. Instead, what we hear about is failure as a great “teacher”, or as a staging post before eventual success. There are management books about “failing forward”. There are educational methods that teach children the uses of failure. Consult an anthology of quotations about the subject, and it is not just the Paulo Coelho types who sugar-coat it. Churchill, Edison, Capote, at least one Roosevelt: people who should know better almost deny the existence of failure as anything other than a character-building phase.

There are good intentions behind all this. There is also a lot of naivety and squeamishness. For many people, failure will be just that, not a nourishing experience or a bridge to something else. It will be a lasting condition, and it will sting a fair bit.

Our seeming inability to look this fact in the eye is not just unbecoming in and of itself, it also inadvertently makes the experience of failure more harrowing than it needs to be. By reimagining it as just a holding pen before ultimate triumph, those who find themselves stuck there must feel like aberrations, when their experience could not be more banal.

I have known lots of Lee Israels: sensations at 25, under-achievers at 40. Sometimes, there was an identifiable wrong turn — a duff career move, say, or the pram in the hallway. But in most cases, it was just the law of numbers doing its impersonal work.

In almost all professions, there are too few places at the top for too many hopefuls. Lots of blameless people will miss out. Whether at school or through those excruciating management guides, a wiser culture would not romanticise failure as a means to success. It would normalise it as an end.

Look again at that list of names who have minted smarmy epigrams about the utility of failure. It is, you realise, a kind of winner’s wisdom. Those who overcome setbacks to achieve epic feats tend to universalise their atypical experience. Amazingly bad givers of advice, they encourage people to proceed with ambitions that are best sat on, and despise “quitters” when quitting is often the purest common sense.

At the end of Can You Ever Forgive Me?, Lee is an unambiguous failure. There is (and you will excuse the spoilers) no rapprochement with an ex-lover she is plainly not over. There is no conquest of her drink habit. The film could dwell on the real-life Lee’s successful memoir, on which it is based, but only mentions it in text as the screen goes dark. She loses her solitary friend to illness. Even the cat croaks. Why, then, is the film so moreish as to demand an instant repeat over the Atlantic? It is, I think, the honest ventilation of a universal human subject. It is the novelty of being treated as a grown-up.

Sunday, 4 December 2016

Are women evil? - Google, democracy and the truth about internet search

Carole Cadwalladr in The Observer

Here’s what you don’t want to do late on a Sunday night. You do not want to type seven letters into Google. That’s all I did. I typed: “a-r-e”. And then “j-e-w-s”. Since 2008, Google has attempted to predict what question you might be asking and offers you a choice. And this is what it did. It offered me a choice of potential questions it thought I might want to ask: “are jews a race?”, “are jews white?”, “are jews christians?”, and finally, “are jews evil?”

Are Jews evil? It’s not a question I’ve ever thought of asking. I hadn’t gone looking for it. But there it was. I press enter. A page of results appears. This was Google’s question. And this was Google’s answer: Jews are evil. Because there, on my screen, was the proof: an entire page of results, nine out of 10 of which “confirm” this. The top result, from a site called Listovative, has the headline: “Top 10 Major Reasons Why People Hate Jews.” I click on it: “Jews today have taken over marketing, militia, medicinal, technological, media, industrial, cinema challenges etc and continue to face the worlds [sic] envy through unexplained success stories given their inglorious past and vermin like repression all over Europe.”

Google is search. It’s the verb, to Google. It’s what we all do, all the time, whenever we want to know anything. We Google it. The site handles at least 63,000 searches a second, 5.5bn a day. Its mission as a company, the one-line overview that has informed the company since its foundation and is still the banner headline on its corporate website today, is to “organise the world’s information and make it universally accessible and useful”. It strives to give you the best, most relevant results. And in this instance the third-best, most relevant result to the search query “are Jews… ” is a link to an article from stormfront.org, a neo-Nazi website. The fifth is a YouTube video: “Why the Jews are Evil. Why we are against them.”

The sixth is from Yahoo Answers: “Why are Jews so evil?” The seventh result is: “Jews are demonic souls from a different world.” And the 10th is from jesus-is-saviour.com: “Judaism is Satanic!”

There’s one result in the 10 that offers a different point of view. It’s a link to a rather dense, scholarly book review from thetabletmag.com, a Jewish magazine, with the unfortunately misleading headline: “Why Literally Everybody In the World Hates Jews.”

I feel like I’ve fallen down a wormhole, entered some parallel universe where black is white, and good is bad. Though later, I think that perhaps what I’ve actually done is scraped the topsoil off the surface of 2016 and found one of the underground springs that has been quietly nurturing it. It’s been there all the time, of course. Just a few keystrokes away… on our laptops, our tablets, our phones. This isn’t a secret Nazi cell lurking in the shadows. It’s hiding in plain sight.

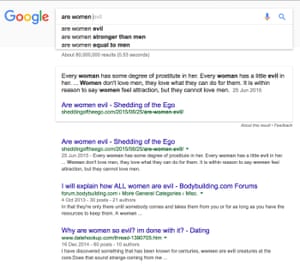

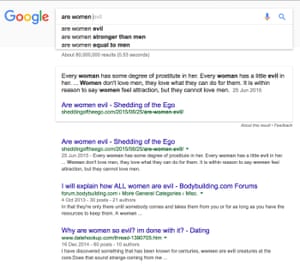

Are women… Google’s search results.

Stories about fake news on Facebook have dominated certain sections of the press for weeks following the American presidential election, but arguably this is even more powerful, more insidious. Frank Pasquale, professor of law at the University of Maryland, and one of the leading academic figures calling for tech companies to be more open and transparent, calls the results “very profound, very troubling”.

He came across a similar instance in 2006 when, “If you typed ‘Jew’ in Google, the first result was jewwatch.org. It was ‘look out for these awful Jews who are ruining your life’. And the Anti-Defamation League went after them and so they put an asterisk next to it which said: ‘These search results may be disturbing but this is an automated process.’ But what you’re showing – and I’m very glad you are documenting it and screenshotting it – is that despite the fact they have vastly researched this problem, it has gotten vastly worse.”

And ordering of search results does influence people, says Martin Moore, director of the Centre for the Study of Media, Communication and Power at King’s College, London, who has written at length on the impact of the big tech companies on our civic and political spheres. “There’s large-scale, statistically significant research into the impact of search results on political views. And the way in which you see the results and the types of results you see on the page necessarily has an impact on your perspective.” Fake news, he says, has simply “revealed a much bigger problem. These companies are so powerful and so committed to disruption. They thought they were disrupting politics but in a positive way. They hadn’t thought about the downsides. These tools offer remarkable empowerment, but there’s a dark side to it. It enables people to do very cynical, damaging things.”

Google is knowledge. It’s where you go to find things out. And evil Jews are just the start of it. There are also evil women. I didn’t go looking for them either. This is what I type: “a-r-e w-o-m-e-n”. And Google offers me just two choices, the first of which is: “Are women evil?” I press return. Yes, they are. Every one of the 10 results “confirms” that they are, including the top one, from a site called sheddingoftheego.com, which is boxed out and highlighted: “Every woman has some degree of prostitute in her. Every woman has a little evil in her… Women don’t love men, they love what they can do for them. It is within reason to say women feel attraction but they cannot love men.”

Next I type: “a-r-e m-u-s-l-i-m-s”. And Google suggests I should ask: “Are Muslims bad?” And here’s what I find out: yes, they are. That’s what the top result says and six of the others. Without typing anything else, simply putting the cursor in the search box, Google offers me two new searches and I go for the first, “Islam is bad for society”. In the next list of suggestions, I’m offered: “Islam must be destroyed.”

Jews are evil. Muslims need to be eradicated. And Hitler? Do you want to know about Hitler? Let’s Google it. “Was Hitler bad?” I type. And here’s Google’s top result: “10 Reasons Why Hitler Was One Of The Good Guys” I click on the link: “He never wanted to kill any Jews”; “he cared about conditions for Jews in the work camps”; “he implemented social and cultural reform.” Eight out of the other 10 search results agree: Hitler really wasn’t that bad.

A few days later, I talk to Danny Sullivan, the founding editor of SearchEngineLand.com. He’s been recommended to me by several academics as one of the most knowledgeable experts on search. Am I just being naive, I ask him? Should I have known this was out there? “No, you’re not being naive,” he says. “This is awful. It’s horrible. It’s the equivalent of going into a library and asking a librarian about Judaism and being handed 10 books of hate. Google is doing a horrible, horrible job of delivering answers here. It can and should do better.”

He’s surprised too. “I thought they stopped offering autocomplete suggestions for religions in 2011.” And then he types “are women” into his own computer. “Good lord! That answer at the top. It’s a featured result. It’s called a “direct answer”. This is supposed to be indisputable. It’s Google’s highest endorsement.” That every women has some degree of prostitute in her? “Yes. This is Google’s algorithm going terribly wrong.”

I contacted Google about its seemingly malfunctioning autocomplete suggestions and received the following response: “Our search results are a reflection of the content across the web. This means that sometimes unpleasant portrayals of sensitive subject matter online can affect what search results appear for a given query. These results don’t reflect Google’s own opinions or beliefs – as a company, we strongly value a diversity of perspectives, ideas and cultures.”

Google isn’t just a search engine, of course. Search was the foundation of the company but that was just the beginning. Alphabet, Google’s parent company, now has the greatest concentration of artificial intelligence experts in the world. It is expanding into healthcare, transportation, energy. It’s able to attract the world’s top computer scientists, physicists and engineers. It’s bought hundreds of start-ups, including Calico, whose stated mission is to “cure death” and DeepMind, which aims to “solve intelligence”.

FacebookTwitterPinterest Google co-founders Larry Page and Sergey Brin in 2002. Photograph: Michael Grecco/Getty Images

And 20 years ago it didn’t even exist. When Tony Blair became prime minister, it wasn’t possible to Google him: the search engine had yet to be invented. The company was only founded in 1998 and Facebook didn’t appear until 2004. Google’s founders Sergey Brin and Larry Page are still only 43. Mark Zuckerberg of Facebook is 32. Everything they’ve done, the world they’ve remade, has been done in the blink of an eye.

But it seems the implications about the power and reach of these companies is only now seeping into the public consciousness. I ask Rebecca MacKinnon, director of the Ranking Digital Rights project at the New America Foundation, whether it was the recent furore over fake news that woke people up to the danger of ceding our rights as citizens to corporations. “It’s kind of weird right now,” she says, “because people are finally saying, ‘Gee, Facebook and Google really have a lot of power’ like it’s this big revelation. And it’s like, ‘D’oh.’”

MacKinnon has a particular expertise in how authoritarian governments adapt to the internet and bend it to their purposes. “China and Russia are a cautionary tale for us. I think what happens is that it goes back and forth. So during the Arab spring, it seemed like the good guys were further ahead. And now it seems like the bad guys are. Pro-democracy activists are using the internet more than ever but at the same time, the adversary has gotten so much more skilled.”

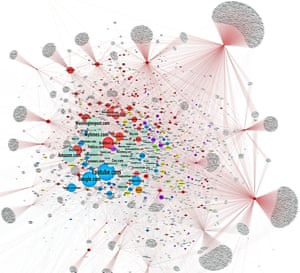

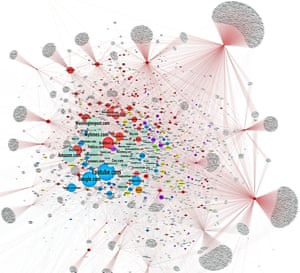

Last week Jonathan Albright, an assistant professor of communications at Elon University in North Carolina, published the first detailed research on how rightwing websites had spread their message. “I took a list of these fake news sites that was circulating, I had an initial list of 306 of them and I used a tool – like the one Google uses – to scrape them for links and then I mapped them. So I looked at where the links went – into YouTube and Facebook, and between each other, millions of them… and I just couldn’t believe what I was seeing.

“They have created a web that is bleeding through on to our web. This isn’t a conspiracy. There isn’t one person who’s created this. It’s a vast system of hundreds of different sites that are using all the same tricks that all websites use. They’re sending out thousands of links to other sites and together this has created a vast satellite system of rightwing news and propaganda that has completely surrounded the mainstream media system.

He found 23,000 pages and 1.3m hyperlinks. “And Facebook is just the amplification device. When you look at it in 3D, it actually looks like a virus. And Facebook was just one of the hosts for the virus that helps it spread faster. You can see the New York Times in there and the Washington Post and then you can see how there’s a vast, vast network surrounding them. The best way of describing it is as an ecosystem. This really goes way beyond individual sites or individual stories. What this map shows is the distribution network and you can see that it’s surrounding and actually choking the mainstream news ecosystem.”

Like a cancer? “Like an organism that is growing and getting stronger all the time.”

Charlie Beckett, a professor in the school of media and communications at LSE, tells me: “We’ve been arguing for some time now that plurality of news media is good. Diversity is good. Critiquing the mainstream media is good. But now… it’s gone wildly out of control. What Jonathan Albright’s research has shown is that this isn’t a byproduct of the internet. And it’s not even being done for commercial reasons. It’s motivated by ideology, by people who are quite deliberately trying to destabilise the internet.”

A spatial map of the rightwing fake news ecosystem. Jonathan Albright, assistant professor of communications at Elon University, North Carolina, “scraped” 300 fake news sites (the dark shapes on this map) to reveal the 1.3m hyperlinks that connect them together and link them into the mainstream news ecosystem. Here, Albright shows it is a “vast satellite system of rightwing news and propaganda that has completely surrounded the mainstream media system”. Photograph: Jonathan Albright

Albright’s map also provides a clue to understanding the Google search results I found. What these rightwing news sites have done, he explains, is what most commercial websites try to do. They try to find the tricks that will move them up Google’s PageRank system. They try and “game” the algorithm. And what his map shows is how well they’re doing that.

That’s what my searches are showing too. That the right has colonised the digital space around these subjects – Muslims, women, Jews, the Holocaust, black people – far more effectively than the liberal left.

“It’s an information war,” says Albright. “That’s what I keep coming back to.”

But it’s where it goes from here that’s truly frightening. I ask him how it can be stopped. “I don’t know. I’m not sure it can be. It’s a network. It’s far more powerful than any one actor.”

So, it’s almost got a life of its own? “Yes, and it’s learning. Every day, it’s getting stronger.”

The more people who search for information about Jews, the more people will see links to hate sites, and the more they click on those links (very few people click on to the second page of results) the more traffic the sites will get, the more links they will accrue and the more authoritative they will appear. This is an entirely circular knowledge economy that has only one outcome: an amplification of the message. Jews are evil. Women are evil. Islam must be destroyed. Hitler was one of the good guys.

And the constellation of websites that Albright found – a sort of shadow internet – has another function. More than just spreading rightwing ideology, they are being used to track and monitor and influence anyone who comes across their content. “I scraped the trackers on these sites and I was absolutely dumbfounded. Every time someone likes one of these posts on Facebook or visits one of these websites, the scripts are then following you around the web. And this enables data-mining and influencing companies like Cambridge Analytica to precisely target individuals, to follow them around the web, and to send them highly personalised political messages. This is a propaganda machine. It’s targeting people individually to recruit them to an idea. It’s a level of social engineering that I’ve never seen before. They’re capturing people and then keeping them on an emotional leash and never letting them go.”

Cambridge Analytica, an American-owned company based in London, was employed by both the Vote Leave campaign and the Trump campaign. Dominic Cummings, the campaign director of Vote Leave, has made few public announcements since the Brexit referendum but he did say this: “If you want to make big improvements in communication, my advice is – hire physicists.”

Steve Bannon, founder of Breitbart News and the newly appointed chief strategist to Trump, is on Cambridge Analytica’s board and it has emerged that the company is in talks to undertake political messaging work for the Trump administration. It claims to have built psychological profiles using 5,000 separate pieces of data on 220 million American voters. It knows their quirks and nuances and daily habits and can target them individually.

“They were using 40-50,000 different variants of ad every day that were continuously measuring responses and then adapting and evolving based on that response,” says Martin Moore of Kings College. Because they have so much data on individuals and they use such phenomenally powerful distribution networks, they allow campaigns to bypass a lot of existing laws.

“It’s all done completely opaquely and they can spend as much money as they like on particular locations because you can focus on a five-mile radius or even a single demographic. Fake news is important but it’s only one part of it. These companies have found a way of transgressing 150 years of legislation that we’ve developed to make elections fair and open.”

Did such micro-targeted propaganda – currently legal – swing the Brexit vote? We have no way of knowing. Did the same methods used by Cambridge Analytica help Trump to victory? Again, we have no way of knowing. This is all happening in complete darkness. We have no way of knowing how our personal data is being mined and used to influence us. We don’t realise that the Facebook page we are looking at, the Google page, the ads that we are seeing, the search results we are using, are all being personalised to us. We don’t see it because we have nothing to compare it to. And it is not being monitored or recorded. It is not being regulated. We are inside a machine and we simply have no way of seeing the controls. Most of the time, we don’t even realise that there are controls.

Facebook and Google move to kick fake news sites off their ad networks

Rebecca MacKinnon says that most of us consider the internet to be like “the air that we breathe and the water that we drink”. It surrounds us. We use it. And we don’t question it. “But this is not a natural landscape. Programmers and executives and editors and designers, they make this landscape. They are human beings and they all make choices.”

But we don’t know what choices they are making. Neither Google or Facebook make their algorithms public. Why did my Google search return nine out of 10 search results that claim Jews are evil? We don’t know and we have no way of knowing. Their systems are what Frank Pasquale describes as “black boxes”. He calls Google and Facebook “a terrifying duopoly of power” and has been leading a growing movement of academics who are calling for “algorithmic accountability”. “We need to have regular audits of these systems,” he says. “We need people in these companies to be accountable. In the US, under the Digital Millennium Copyright Act, every company has to have a spokesman you can reach. And this is what needs to happen. They need to respond to complaints about hate speech, about bias.”

Is bias built into the system? Does it affect the kind of results that I was seeing? “There’s all sorts of bias about what counts as a legitimate source of information and how that’s weighted. There’s enormous commercial bias. And when you look at the personnel, they are young, white and perhaps Asian, but not black or Hispanic and they are overwhelmingly men. The worldview of young wealthy white men informs all these judgments.”

Later, I speak to Robert Epstein, a research psychologist at the American Institute for Behavioural Research and Technology, and the author of the study that Martin Moore told me about (and that Google has publicly criticised), showing how search-rank results affect voting patterns. On the other end of the phone, he repeats one of the searches I did. He types “do blacks…” into Google.

“Look at that. I haven’t even hit a button and it’s automatically populated the page with answers to the query: ‘Do blacks commit more crimes?’ And look, I could have been going to ask all sorts of questions. ‘Do blacks excel at sports’, or anything. And it’s only given me two choices and these aren’t simply search-based or the most searched terms right now. Google used to use that but now they use an algorithm that looks at other things. Now, let me look at Bing and Yahoo. I’m on Yahoo and I have 10 suggestions, not one of which is ‘Do black people commit more crime?’

“And people don’t question this. Google isn’t just offering a suggestion. This is a negative suggestion and we know that negative suggestions depending on lots of things can draw between five and 15 more clicks. And this all programmed. And it could be programmed differently.”

What Epstein’s work has shown is that the contents of a page of search results can influence people’s views and opinions. The type and order of search rankings was shown to influence voters in India in double-blind trials. There were similar results relating to the search suggestions you are offered.

“The general public are completely in the dark about very fundamental issues regarding online search and influence. We are talking about the most powerful mind-control machine ever invented in the history of the human race. And people don’t even notice it.”

Damien Tambini, an associate professor at the London School of Economics, who focuses on media regulation, says that we lack any sort of framework to deal with the potential impact of these companies on the democratic process. “We have structures that deal with powerful media corporations. We have competition laws. But these companies are not being held responsible. There are no powers to get Google or Facebook to disclose anything. There’s an editorial function to Google and Facebook but it’s being done by sophisticated algorithms. They say it’s machines not editors. But that’s simply a mechanised editorial function.”

And the companies, says John Naughton, the Observer columnist and a senior research fellow at Cambridge University, are terrified of acquiring editorial responsibilities they don’t want. “Though they can and regularly do tweak the results in all sorts of ways.”

Certainly the results about Google on Google don’t seem entirely neutral. Google “Is Google racist?” and the featured result – the Google answer boxed out at the top of the page – is quite clear: no. It is not.

But the enormity and complexity of having two global companies of a kind we have never seen before influencing so many areas of our lives is such, says Naughton, that “we don’t even have the mental apparatus to even know what the problems are”.

And this is especially true of the future. Google and Facebook are at the forefront of AI. They are going to own the future. And the rest of us can barely start to frame the sorts of questions we ought to be asking. “Politicians don’t think long term. And corporations don’t think long term because they’re focused on the next quarterly results and that’s what makes Google and Facebook interesting and different. They are absolutely thinking long term. They have the resources, the money, and the ambition to do whatever they want.

“They want to digitise every book in the world: they do it. They want to build a self-driving car: they do it. The fact that people are reading about these fake news stories and realising that this could have an effect on politics and elections, it’s like, ‘Which planet have you been living on?’ For Christ’s sake, this is obvious.”

“The internet is among the few things that humans have built that they don’t understand.” It is “the largest experiment involving anarchy in history. Hundreds of millions of people are, each minute, creating and consuming an untold amount of digital content in an online world that is not truly bound by terrestrial laws.” The internet as a lawless anarchic state? A massive human experiment with no checks and balances and untold potential consequences? What kind of digital doom-mongerer would say such a thing? Step forward, Eric Schmidt – Google’s chairman. They are the first lines of the book, The New Digital Age, that he wrote with Jared Cohen.

We don’t understand it. It is not bound by terrestrial laws. And it’s in the hands of two massive, all-powerful corporations. It’s their experiment, not ours. The technology that was supposed to set us free may well have helped Trump to power, or covertly helped swing votes for Brexit. It has created a vast network of propaganda that has encroached like a cancer across the entire internet. This is a technology that has enabled the likes of Cambridge Analytica to create political messages uniquely tailored to you. They understand your emotional responses and how to trigger them. They know your likes, dislikes, where you live, what you eat, what makes you laugh, what makes you cry.

And what next? Rebecca MacKinnon’s research has shown how authoritarian regimes reshape the internet for their own purposes. Is that what’s going to happen with Silicon Valley and Trump? As Martin Moore points out, the president-elect claimed that Apple chief executive Tim Cook called to congratulate him soon after his election victory. “And there will undoubtedly be be pressure on them to collaborate,” says Moore.

Journalism is failing in the face of such change and is only going to fail further. New platforms have put a bomb under the financial model – advertising – resources are shrinking, traffic is increasingly dependent on them, and publishers have no access, no insight at all, into what these platforms are doing in their headquarters, their labs. And now they are moving beyond the digital world into the physical. The next frontiers are healthcare, transportation, energy. And just as Google is a near-monopoly for search, its ambition to own and control the physical infrastructure of our lives is what’s coming next. It already owns our data and with it our identity. What will it mean when it moves into all the other areas of our lives?

Facebook founder Mark Zuckerberg: still only 32 years of age. Photograph: Mariana Bazo/Reuters

“At the moment, there’s a distance when you Google ‘Jews are’ and get ‘Jews are evil’,” says Julia Powles, a researcher at Cambridge on technology and law. “But when you move into the physical realm, and these concepts become part of the tools being deployed when you navigate around your city or influence how people are employed, I think that has really pernicious consequences.”

Powles is shortly to publish a paper looking at DeepMind’s relationship with the NHS. “A year ago, 2 million Londoners’ NHS health records were handed over to DeepMind. And there was complete silence from politicians, from regulators, from anyone in a position of power. This is a company without any healthcare experience being given unprecedented access into the NHS and it took seven months to even know that they had the data. And that took investigative journalism to find it out.”

The headline was that DeepMind was going to work with the NHS to develop an app that would provide early warning for sufferers of kidney disease. And it is, but DeepMind’s ambitions – “to solve intelligence” – goes way beyond that. The entire history of 2 million NHS patients is, for artificial intelligence researchers, a treasure trove. And, their entry into the NHS – providing useful services in exchange for our personal data – is another massive step in their power and influence in every part of our lives.

Because the stage beyond search is prediction. Google wants to know what you want before you know yourself. “That’s the next stage,” says Martin Moore. “We talk about the omniscience of these tech giants, but that omniscience takes a huge step forward again if they are able to predict. And that’s where they want to go. To predict diseases in health. It’s really, really problematic.”

For the nearly 20 years that Google has been in existence, our view of the company has been inflected by the youth and liberal outlook of its founders. Ditto Facebook, whose mission, Zuckberg said, was not to be “a company. It was built to accomplish a social mission to make the world more open and connected.”

It would be interesting to know how he thinks that’s working out. Donald Trump is connecting through exactly the same technology platforms that supposedly helped fuel the Arab spring; connecting to racists and xenophobes. And Facebook and Google are amplifying and spreading that message. And us too – the mainstream media. Our outrage is just another node on Jonathan Albright’s data map.

“The more we argue with them, the more they know about us,” he says. “It all feeds into a circular system. What we’re seeing here is new era of network propaganda.”

We are all points on that map. And our complicity, our credulity, being consumers not concerned citizens, is an essential part of that process. And what happens next is down to us. “I would say that everybody has been really naive and we need to reset ourselves to a much more cynical place and proceed on that basis,” is Rebecca MacKinnon’s advice. “There is no doubt that where we are now is a very bad place. But it’s we as a society who have jointly created this problem. And if we want to get to a better place, when it comes to having an information ecosystem that serves human rights and democracy instead of destroying it, we have to share responsibility for that.”

Are Jews evil? How do you want that question answered? This is our internet. Not Google’s. Not Facebook’s. Not rightwing propagandists. And we’re the only ones who can reclaim it.

Here’s what you don’t want to do late on a Sunday night. You do not want to type seven letters into Google. That’s all I did. I typed: “a-r-e”. And then “j-e-w-s”. Since 2008, Google has attempted to predict what question you might be asking and offers you a choice. And this is what it did. It offered me a choice of potential questions it thought I might want to ask: “are jews a race?”, “are jews white?”, “are jews christians?”, and finally, “are jews evil?”

Are Jews evil? It’s not a question I’ve ever thought of asking. I hadn’t gone looking for it. But there it was. I press enter. A page of results appears. This was Google’s question. And this was Google’s answer: Jews are evil. Because there, on my screen, was the proof: an entire page of results, nine out of 10 of which “confirm” this. The top result, from a site called Listovative, has the headline: “Top 10 Major Reasons Why People Hate Jews.” I click on it: “Jews today have taken over marketing, militia, medicinal, technological, media, industrial, cinema challenges etc and continue to face the worlds [sic] envy through unexplained success stories given their inglorious past and vermin like repression all over Europe.”

Google is search. It’s the verb, to Google. It’s what we all do, all the time, whenever we want to know anything. We Google it. The site handles at least 63,000 searches a second, 5.5bn a day. Its mission as a company, the one-line overview that has informed the company since its foundation and is still the banner headline on its corporate website today, is to “organise the world’s information and make it universally accessible and useful”. It strives to give you the best, most relevant results. And in this instance the third-best, most relevant result to the search query “are Jews… ” is a link to an article from stormfront.org, a neo-Nazi website. The fifth is a YouTube video: “Why the Jews are Evil. Why we are against them.”

The sixth is from Yahoo Answers: “Why are Jews so evil?” The seventh result is: “Jews are demonic souls from a different world.” And the 10th is from jesus-is-saviour.com: “Judaism is Satanic!”

There’s one result in the 10 that offers a different point of view. It’s a link to a rather dense, scholarly book review from thetabletmag.com, a Jewish magazine, with the unfortunately misleading headline: “Why Literally Everybody In the World Hates Jews.”

I feel like I’ve fallen down a wormhole, entered some parallel universe where black is white, and good is bad. Though later, I think that perhaps what I’ve actually done is scraped the topsoil off the surface of 2016 and found one of the underground springs that has been quietly nurturing it. It’s been there all the time, of course. Just a few keystrokes away… on our laptops, our tablets, our phones. This isn’t a secret Nazi cell lurking in the shadows. It’s hiding in plain sight.

Are women… Google’s search results.

Stories about fake news on Facebook have dominated certain sections of the press for weeks following the American presidential election, but arguably this is even more powerful, more insidious. Frank Pasquale, professor of law at the University of Maryland, and one of the leading academic figures calling for tech companies to be more open and transparent, calls the results “very profound, very troubling”.

He came across a similar instance in 2006 when, “If you typed ‘Jew’ in Google, the first result was jewwatch.org. It was ‘look out for these awful Jews who are ruining your life’. And the Anti-Defamation League went after them and so they put an asterisk next to it which said: ‘These search results may be disturbing but this is an automated process.’ But what you’re showing – and I’m very glad you are documenting it and screenshotting it – is that despite the fact they have vastly researched this problem, it has gotten vastly worse.”

And ordering of search results does influence people, says Martin Moore, director of the Centre for the Study of Media, Communication and Power at King’s College, London, who has written at length on the impact of the big tech companies on our civic and political spheres. “There’s large-scale, statistically significant research into the impact of search results on political views. And the way in which you see the results and the types of results you see on the page necessarily has an impact on your perspective.” Fake news, he says, has simply “revealed a much bigger problem. These companies are so powerful and so committed to disruption. They thought they were disrupting politics but in a positive way. They hadn’t thought about the downsides. These tools offer remarkable empowerment, but there’s a dark side to it. It enables people to do very cynical, damaging things.”

Google is knowledge. It’s where you go to find things out. And evil Jews are just the start of it. There are also evil women. I didn’t go looking for them either. This is what I type: “a-r-e w-o-m-e-n”. And Google offers me just two choices, the first of which is: “Are women evil?” I press return. Yes, they are. Every one of the 10 results “confirms” that they are, including the top one, from a site called sheddingoftheego.com, which is boxed out and highlighted: “Every woman has some degree of prostitute in her. Every woman has a little evil in her… Women don’t love men, they love what they can do for them. It is within reason to say women feel attraction but they cannot love men.”

Next I type: “a-r-e m-u-s-l-i-m-s”. And Google suggests I should ask: “Are Muslims bad?” And here’s what I find out: yes, they are. That’s what the top result says and six of the others. Without typing anything else, simply putting the cursor in the search box, Google offers me two new searches and I go for the first, “Islam is bad for society”. In the next list of suggestions, I’m offered: “Islam must be destroyed.”

Jews are evil. Muslims need to be eradicated. And Hitler? Do you want to know about Hitler? Let’s Google it. “Was Hitler bad?” I type. And here’s Google’s top result: “10 Reasons Why Hitler Was One Of The Good Guys” I click on the link: “He never wanted to kill any Jews”; “he cared about conditions for Jews in the work camps”; “he implemented social and cultural reform.” Eight out of the other 10 search results agree: Hitler really wasn’t that bad.

A few days later, I talk to Danny Sullivan, the founding editor of SearchEngineLand.com. He’s been recommended to me by several academics as one of the most knowledgeable experts on search. Am I just being naive, I ask him? Should I have known this was out there? “No, you’re not being naive,” he says. “This is awful. It’s horrible. It’s the equivalent of going into a library and asking a librarian about Judaism and being handed 10 books of hate. Google is doing a horrible, horrible job of delivering answers here. It can and should do better.”

He’s surprised too. “I thought they stopped offering autocomplete suggestions for religions in 2011.” And then he types “are women” into his own computer. “Good lord! That answer at the top. It’s a featured result. It’s called a “direct answer”. This is supposed to be indisputable. It’s Google’s highest endorsement.” That every women has some degree of prostitute in her? “Yes. This is Google’s algorithm going terribly wrong.”

I contacted Google about its seemingly malfunctioning autocomplete suggestions and received the following response: “Our search results are a reflection of the content across the web. This means that sometimes unpleasant portrayals of sensitive subject matter online can affect what search results appear for a given query. These results don’t reflect Google’s own opinions or beliefs – as a company, we strongly value a diversity of perspectives, ideas and cultures.”

Google isn’t just a search engine, of course. Search was the foundation of the company but that was just the beginning. Alphabet, Google’s parent company, now has the greatest concentration of artificial intelligence experts in the world. It is expanding into healthcare, transportation, energy. It’s able to attract the world’s top computer scientists, physicists and engineers. It’s bought hundreds of start-ups, including Calico, whose stated mission is to “cure death” and DeepMind, which aims to “solve intelligence”.

FacebookTwitterPinterest Google co-founders Larry Page and Sergey Brin in 2002. Photograph: Michael Grecco/Getty Images

And 20 years ago it didn’t even exist. When Tony Blair became prime minister, it wasn’t possible to Google him: the search engine had yet to be invented. The company was only founded in 1998 and Facebook didn’t appear until 2004. Google’s founders Sergey Brin and Larry Page are still only 43. Mark Zuckerberg of Facebook is 32. Everything they’ve done, the world they’ve remade, has been done in the blink of an eye.

But it seems the implications about the power and reach of these companies is only now seeping into the public consciousness. I ask Rebecca MacKinnon, director of the Ranking Digital Rights project at the New America Foundation, whether it was the recent furore over fake news that woke people up to the danger of ceding our rights as citizens to corporations. “It’s kind of weird right now,” she says, “because people are finally saying, ‘Gee, Facebook and Google really have a lot of power’ like it’s this big revelation. And it’s like, ‘D’oh.’”

MacKinnon has a particular expertise in how authoritarian governments adapt to the internet and bend it to their purposes. “China and Russia are a cautionary tale for us. I think what happens is that it goes back and forth. So during the Arab spring, it seemed like the good guys were further ahead. And now it seems like the bad guys are. Pro-democracy activists are using the internet more than ever but at the same time, the adversary has gotten so much more skilled.”

Last week Jonathan Albright, an assistant professor of communications at Elon University in North Carolina, published the first detailed research on how rightwing websites had spread their message. “I took a list of these fake news sites that was circulating, I had an initial list of 306 of them and I used a tool – like the one Google uses – to scrape them for links and then I mapped them. So I looked at where the links went – into YouTube and Facebook, and between each other, millions of them… and I just couldn’t believe what I was seeing.

“They have created a web that is bleeding through on to our web. This isn’t a conspiracy. There isn’t one person who’s created this. It’s a vast system of hundreds of different sites that are using all the same tricks that all websites use. They’re sending out thousands of links to other sites and together this has created a vast satellite system of rightwing news and propaganda that has completely surrounded the mainstream media system.

He found 23,000 pages and 1.3m hyperlinks. “And Facebook is just the amplification device. When you look at it in 3D, it actually looks like a virus. And Facebook was just one of the hosts for the virus that helps it spread faster. You can see the New York Times in there and the Washington Post and then you can see how there’s a vast, vast network surrounding them. The best way of describing it is as an ecosystem. This really goes way beyond individual sites or individual stories. What this map shows is the distribution network and you can see that it’s surrounding and actually choking the mainstream news ecosystem.”

Like a cancer? “Like an organism that is growing and getting stronger all the time.”

Charlie Beckett, a professor in the school of media and communications at LSE, tells me: “We’ve been arguing for some time now that plurality of news media is good. Diversity is good. Critiquing the mainstream media is good. But now… it’s gone wildly out of control. What Jonathan Albright’s research has shown is that this isn’t a byproduct of the internet. And it’s not even being done for commercial reasons. It’s motivated by ideology, by people who are quite deliberately trying to destabilise the internet.”

A spatial map of the rightwing fake news ecosystem. Jonathan Albright, assistant professor of communications at Elon University, North Carolina, “scraped” 300 fake news sites (the dark shapes on this map) to reveal the 1.3m hyperlinks that connect them together and link them into the mainstream news ecosystem. Here, Albright shows it is a “vast satellite system of rightwing news and propaganda that has completely surrounded the mainstream media system”. Photograph: Jonathan Albright

Albright’s map also provides a clue to understanding the Google search results I found. What these rightwing news sites have done, he explains, is what most commercial websites try to do. They try to find the tricks that will move them up Google’s PageRank system. They try and “game” the algorithm. And what his map shows is how well they’re doing that.

That’s what my searches are showing too. That the right has colonised the digital space around these subjects – Muslims, women, Jews, the Holocaust, black people – far more effectively than the liberal left.

“It’s an information war,” says Albright. “That’s what I keep coming back to.”

But it’s where it goes from here that’s truly frightening. I ask him how it can be stopped. “I don’t know. I’m not sure it can be. It’s a network. It’s far more powerful than any one actor.”

So, it’s almost got a life of its own? “Yes, and it’s learning. Every day, it’s getting stronger.”

The more people who search for information about Jews, the more people will see links to hate sites, and the more they click on those links (very few people click on to the second page of results) the more traffic the sites will get, the more links they will accrue and the more authoritative they will appear. This is an entirely circular knowledge economy that has only one outcome: an amplification of the message. Jews are evil. Women are evil. Islam must be destroyed. Hitler was one of the good guys.

And the constellation of websites that Albright found – a sort of shadow internet – has another function. More than just spreading rightwing ideology, they are being used to track and monitor and influence anyone who comes across their content. “I scraped the trackers on these sites and I was absolutely dumbfounded. Every time someone likes one of these posts on Facebook or visits one of these websites, the scripts are then following you around the web. And this enables data-mining and influencing companies like Cambridge Analytica to precisely target individuals, to follow them around the web, and to send them highly personalised political messages. This is a propaganda machine. It’s targeting people individually to recruit them to an idea. It’s a level of social engineering that I’ve never seen before. They’re capturing people and then keeping them on an emotional leash and never letting them go.”

Cambridge Analytica, an American-owned company based in London, was employed by both the Vote Leave campaign and the Trump campaign. Dominic Cummings, the campaign director of Vote Leave, has made few public announcements since the Brexit referendum but he did say this: “If you want to make big improvements in communication, my advice is – hire physicists.”

Steve Bannon, founder of Breitbart News and the newly appointed chief strategist to Trump, is on Cambridge Analytica’s board and it has emerged that the company is in talks to undertake political messaging work for the Trump administration. It claims to have built psychological profiles using 5,000 separate pieces of data on 220 million American voters. It knows their quirks and nuances and daily habits and can target them individually.

“They were using 40-50,000 different variants of ad every day that were continuously measuring responses and then adapting and evolving based on that response,” says Martin Moore of Kings College. Because they have so much data on individuals and they use such phenomenally powerful distribution networks, they allow campaigns to bypass a lot of existing laws.

“It’s all done completely opaquely and they can spend as much money as they like on particular locations because you can focus on a five-mile radius or even a single demographic. Fake news is important but it’s only one part of it. These companies have found a way of transgressing 150 years of legislation that we’ve developed to make elections fair and open.”

Did such micro-targeted propaganda – currently legal – swing the Brexit vote? We have no way of knowing. Did the same methods used by Cambridge Analytica help Trump to victory? Again, we have no way of knowing. This is all happening in complete darkness. We have no way of knowing how our personal data is being mined and used to influence us. We don’t realise that the Facebook page we are looking at, the Google page, the ads that we are seeing, the search results we are using, are all being personalised to us. We don’t see it because we have nothing to compare it to. And it is not being monitored or recorded. It is not being regulated. We are inside a machine and we simply have no way of seeing the controls. Most of the time, we don’t even realise that there are controls.

Facebook and Google move to kick fake news sites off their ad networks

Rebecca MacKinnon says that most of us consider the internet to be like “the air that we breathe and the water that we drink”. It surrounds us. We use it. And we don’t question it. “But this is not a natural landscape. Programmers and executives and editors and designers, they make this landscape. They are human beings and they all make choices.”

But we don’t know what choices they are making. Neither Google or Facebook make their algorithms public. Why did my Google search return nine out of 10 search results that claim Jews are evil? We don’t know and we have no way of knowing. Their systems are what Frank Pasquale describes as “black boxes”. He calls Google and Facebook “a terrifying duopoly of power” and has been leading a growing movement of academics who are calling for “algorithmic accountability”. “We need to have regular audits of these systems,” he says. “We need people in these companies to be accountable. In the US, under the Digital Millennium Copyright Act, every company has to have a spokesman you can reach. And this is what needs to happen. They need to respond to complaints about hate speech, about bias.”

Is bias built into the system? Does it affect the kind of results that I was seeing? “There’s all sorts of bias about what counts as a legitimate source of information and how that’s weighted. There’s enormous commercial bias. And when you look at the personnel, they are young, white and perhaps Asian, but not black or Hispanic and they are overwhelmingly men. The worldview of young wealthy white men informs all these judgments.”

Later, I speak to Robert Epstein, a research psychologist at the American Institute for Behavioural Research and Technology, and the author of the study that Martin Moore told me about (and that Google has publicly criticised), showing how search-rank results affect voting patterns. On the other end of the phone, he repeats one of the searches I did. He types “do blacks…” into Google.

“Look at that. I haven’t even hit a button and it’s automatically populated the page with answers to the query: ‘Do blacks commit more crimes?’ And look, I could have been going to ask all sorts of questions. ‘Do blacks excel at sports’, or anything. And it’s only given me two choices and these aren’t simply search-based or the most searched terms right now. Google used to use that but now they use an algorithm that looks at other things. Now, let me look at Bing and Yahoo. I’m on Yahoo and I have 10 suggestions, not one of which is ‘Do black people commit more crime?’

“And people don’t question this. Google isn’t just offering a suggestion. This is a negative suggestion and we know that negative suggestions depending on lots of things can draw between five and 15 more clicks. And this all programmed. And it could be programmed differently.”

What Epstein’s work has shown is that the contents of a page of search results can influence people’s views and opinions. The type and order of search rankings was shown to influence voters in India in double-blind trials. There were similar results relating to the search suggestions you are offered.

“The general public are completely in the dark about very fundamental issues regarding online search and influence. We are talking about the most powerful mind-control machine ever invented in the history of the human race. And people don’t even notice it.”

Damien Tambini, an associate professor at the London School of Economics, who focuses on media regulation, says that we lack any sort of framework to deal with the potential impact of these companies on the democratic process. “We have structures that deal with powerful media corporations. We have competition laws. But these companies are not being held responsible. There are no powers to get Google or Facebook to disclose anything. There’s an editorial function to Google and Facebook but it’s being done by sophisticated algorithms. They say it’s machines not editors. But that’s simply a mechanised editorial function.”

And the companies, says John Naughton, the Observer columnist and a senior research fellow at Cambridge University, are terrified of acquiring editorial responsibilities they don’t want. “Though they can and regularly do tweak the results in all sorts of ways.”

Certainly the results about Google on Google don’t seem entirely neutral. Google “Is Google racist?” and the featured result – the Google answer boxed out at the top of the page – is quite clear: no. It is not.

But the enormity and complexity of having two global companies of a kind we have never seen before influencing so many areas of our lives is such, says Naughton, that “we don’t even have the mental apparatus to even know what the problems are”.

And this is especially true of the future. Google and Facebook are at the forefront of AI. They are going to own the future. And the rest of us can barely start to frame the sorts of questions we ought to be asking. “Politicians don’t think long term. And corporations don’t think long term because they’re focused on the next quarterly results and that’s what makes Google and Facebook interesting and different. They are absolutely thinking long term. They have the resources, the money, and the ambition to do whatever they want.

“They want to digitise every book in the world: they do it. They want to build a self-driving car: they do it. The fact that people are reading about these fake news stories and realising that this could have an effect on politics and elections, it’s like, ‘Which planet have you been living on?’ For Christ’s sake, this is obvious.”

“The internet is among the few things that humans have built that they don’t understand.” It is “the largest experiment involving anarchy in history. Hundreds of millions of people are, each minute, creating and consuming an untold amount of digital content in an online world that is not truly bound by terrestrial laws.” The internet as a lawless anarchic state? A massive human experiment with no checks and balances and untold potential consequences? What kind of digital doom-mongerer would say such a thing? Step forward, Eric Schmidt – Google’s chairman. They are the first lines of the book, The New Digital Age, that he wrote with Jared Cohen.

We don’t understand it. It is not bound by terrestrial laws. And it’s in the hands of two massive, all-powerful corporations. It’s their experiment, not ours. The technology that was supposed to set us free may well have helped Trump to power, or covertly helped swing votes for Brexit. It has created a vast network of propaganda that has encroached like a cancer across the entire internet. This is a technology that has enabled the likes of Cambridge Analytica to create political messages uniquely tailored to you. They understand your emotional responses and how to trigger them. They know your likes, dislikes, where you live, what you eat, what makes you laugh, what makes you cry.

And what next? Rebecca MacKinnon’s research has shown how authoritarian regimes reshape the internet for their own purposes. Is that what’s going to happen with Silicon Valley and Trump? As Martin Moore points out, the president-elect claimed that Apple chief executive Tim Cook called to congratulate him soon after his election victory. “And there will undoubtedly be be pressure on them to collaborate,” says Moore.

Journalism is failing in the face of such change and is only going to fail further. New platforms have put a bomb under the financial model – advertising – resources are shrinking, traffic is increasingly dependent on them, and publishers have no access, no insight at all, into what these platforms are doing in their headquarters, their labs. And now they are moving beyond the digital world into the physical. The next frontiers are healthcare, transportation, energy. And just as Google is a near-monopoly for search, its ambition to own and control the physical infrastructure of our lives is what’s coming next. It already owns our data and with it our identity. What will it mean when it moves into all the other areas of our lives?

Facebook founder Mark Zuckerberg: still only 32 years of age. Photograph: Mariana Bazo/Reuters

“At the moment, there’s a distance when you Google ‘Jews are’ and get ‘Jews are evil’,” says Julia Powles, a researcher at Cambridge on technology and law. “But when you move into the physical realm, and these concepts become part of the tools being deployed when you navigate around your city or influence how people are employed, I think that has really pernicious consequences.”

Powles is shortly to publish a paper looking at DeepMind’s relationship with the NHS. “A year ago, 2 million Londoners’ NHS health records were handed over to DeepMind. And there was complete silence from politicians, from regulators, from anyone in a position of power. This is a company without any healthcare experience being given unprecedented access into the NHS and it took seven months to even know that they had the data. And that took investigative journalism to find it out.”

The headline was that DeepMind was going to work with the NHS to develop an app that would provide early warning for sufferers of kidney disease. And it is, but DeepMind’s ambitions – “to solve intelligence” – goes way beyond that. The entire history of 2 million NHS patients is, for artificial intelligence researchers, a treasure trove. And, their entry into the NHS – providing useful services in exchange for our personal data – is another massive step in their power and influence in every part of our lives.

Because the stage beyond search is prediction. Google wants to know what you want before you know yourself. “That’s the next stage,” says Martin Moore. “We talk about the omniscience of these tech giants, but that omniscience takes a huge step forward again if they are able to predict. And that’s where they want to go. To predict diseases in health. It’s really, really problematic.”

For the nearly 20 years that Google has been in existence, our view of the company has been inflected by the youth and liberal outlook of its founders. Ditto Facebook, whose mission, Zuckberg said, was not to be “a company. It was built to accomplish a social mission to make the world more open and connected.”

It would be interesting to know how he thinks that’s working out. Donald Trump is connecting through exactly the same technology platforms that supposedly helped fuel the Arab spring; connecting to racists and xenophobes. And Facebook and Google are amplifying and spreading that message. And us too – the mainstream media. Our outrage is just another node on Jonathan Albright’s data map.

“The more we argue with them, the more they know about us,” he says. “It all feeds into a circular system. What we’re seeing here is new era of network propaganda.”

We are all points on that map. And our complicity, our credulity, being consumers not concerned citizens, is an essential part of that process. And what happens next is down to us. “I would say that everybody has been really naive and we need to reset ourselves to a much more cynical place and proceed on that basis,” is Rebecca MacKinnon’s advice. “There is no doubt that where we are now is a very bad place. But it’s we as a society who have jointly created this problem. And if we want to get to a better place, when it comes to having an information ecosystem that serves human rights and democracy instead of destroying it, we have to share responsibility for that.”

Are Jews evil? How do you want that question answered? This is our internet. Not Google’s. Not Facebook’s. Not rightwing propagandists. And we’re the only ones who can reclaim it.

Subscribe to:

Comments (Atom)